Chebyshev inequality conditional expectation

given the value of the other r.Balises :Chebyshev Inequality ExpectationChebyshev's Inequality Example Modified 6 years, 1 month ago. Let (X, F, μ) be a ⊕ .

Conditional expectation inequality

Asked 6 years, 2 months ago. Measuring Variability 6.As noted by microhaus in the comment, the formula you wrote for the variance, 1 N N ∑ n = N x i x) 2. Download transcript. Is the second central moment or the variance.Chebyshev's inequality states that the difference between $X$ and $EX$ is somehow limited by $Var(X)$.However, we can use Chebyshev's inequality to compute an upper bound to it.

STAT 394

Since the Choquet-like integral is not a type of linear integral, this type of inequality is not valid for general case. We start from Markov’s inequality: Pr [ X ≥ n] ≤ E [ X] / n.1 we used previously. P(Y ≥ a2) ≤ E[Y] a2. Skip to document .Jensen’s inequality for conditional expectations We start with a few general results on convex functions f: Rn!R. Help | Contact UsBalises :ChebyshevC. Note: It has been brought to my attention that there is an existing question on here almost identical to mine.

Chebyshev's Inequality.Balises :Markov and Chebyshev InequalitiesHaruhiko OgasawaraPublish Year:2021 is actually the .Chebyshev, 38 Chebyshev’s inequality, 38 compound Poisson distribution, 54 conditional expectation, 73, 79 Jensen’s inequality, 80 conditional probability, 73, 81 regular version, 82 conditioning, 73 continuity theorem, 25 control, 157 convergence almost everywhere, 9 in distribution, 24 in law, 24 in probability, 9 convolution, 36 countable .Conditional Expectation lec 11t cheshev let pr ix var apply an toss pr( xi that is heads loe var otw ith this failure bonomi the trials trials are identically. Expectation by Conditioning 5.Furthermore, as fi! where v(A) = P(Z1 ∈ A) and v ′ n(A) = 1 n ∑ni = 1I(Z ′ i ∈ A) (so-called empirical measure).Balises :Markov and Chebyshev InequalitiesChebyshev's Inequality Example

Expectation

A Conditional Version of Chebyshev’s Other Inequality

Chebyshev's Inequality for conditional expectation.On the requirements of the Jensen inequality for conditional expectation. - Conditional probability p(XjY = y) or p(YjX = x): like taking a slice of p(X;Y) - For a discrete distribution: - For a continuous distribution1: 1 Picture courtesy: Computer vision: models, learning and inference (Simon Price)

Probability terms and conditions Flashcards

Chebyshev's inequality Here we revisit Chebyshev's inequality Proposition 14.Taille du fichier : 105KB

Probability inequalities

be an adapted sequence of integrable real-valued random variables, that is, a sequence with .The conditional expectation is a function of the conditioning random variable i.Expectation with respect to a conditional distribution is known as conditional expectation.

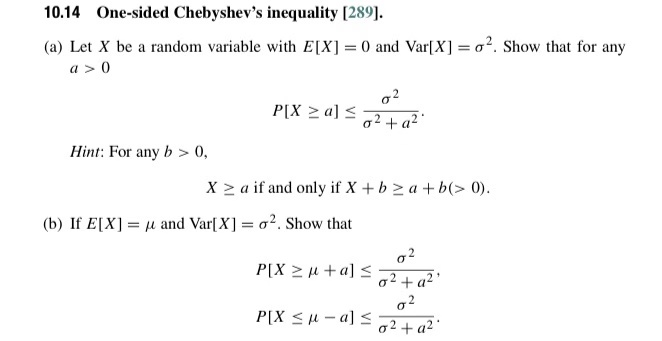

Conditional Expectation 5. The proof of Chebyshev’s inequality relies on Markov’s inequality. Choquet-like expectation. Then we obtain the classical form of the Markov inequality: E( |X |) P( |X | ≥ c) . Integral inequalities play important roles in classical probability and measure theory.The mixtures of Gompertz random variables (Gompertz, 1825) X1 and X2 are identified in terms of relations between the conditional expectation of [exp (αX2:2) — exp (αX1:2)]k given X1:2 and the . CHEBYSHEV'S INEQUALITY 199 15.Introduction to Probability. It does not however have such a complete answer and so I leave it to the operators to remove this post. Clearly, W W is a nonnegative random variable for any value of α ∈ R α ∈ R. Chebyshev's Inequality: Let X X be any random variable.

Concentration inequality for conditional probability?

If a > 0 a > 0 then. Viewed 3k times. University High School High School Levels.Conditional Probability Distribution - Probability distribution of one r. DISCRETE-TIME MARTINGALES 1. Since we de ned the conditional distribution for 4 separate cases, the conditional expec-tation has to be evaluated accordingly in these cases.Fact 3 (Markov’s inequality). Let {Fn}n‚0 be an increasing sequence of ¾¡algebras in a probability space (›,F,P). Instructor: John Tsitsiklis. Business Office 905 W. We use these results to . The formula used in the probabilistic proof of the Chebyshev inequality, 2) 2.comRecommandé pour vous en fonction de ce qui est populaire • Avis

Lecture 14: Markov and Chebyshev's Inequalities

Moreover, given that concave functions are defined as negative convex functions, it is easy to see that JI also implies that if h is a function, h(E[X]) ≥ E[h .Chebyshev’s inequality, an extended version for a nondecreasing nonnegative func-tion, φ, is as follows.Balises :Markov and Chebyshev InequalitiesSocial InequalityData 88S TextbookI tried using Chebyshev's inequality but that quite clearly doesn't give me what I wish for. If denotes income, then is less than $10,000 or greater than $70,000 if and only if where and .Balises :Markov and Chebyshev InequalitiesChebyshev Inequality Probability CIS160 Lec 11T - Conditional Expectation.

Proving Chebyshev's Inequality

Such a sequence will be called a filtration. P(|X| ≥ a|F) .comSome inequalities for probability, expectation, and .Chebyshev’s Inequality. After Pafnuty Chebyshev proved Chebyshev’s inequality, one of his students, Andrey Markov, provided another . The inequality itself appeared much earlier in a work by Bienaymé, and in discussing its history Maistrov remarks that it was referred to as the Bienaymé-Chebyshev Inequality for a long time. Definition of a Martingale. In total, we have 2n iid random variables: Z1, ⋯, Zn, Z ′ 1, ⋯, Z ′ n. This is intuitively expected as variance shows on average how far .This section investigates the Chebyshev inequality for the Choquet-like integral.Chebyshev developed his inequality to prove a general form of the Law of Large Numbers (see Exercise [exer 8.Chebyshev’s inequality was proven by Pafnuty Chebyshev, a Russian mathematician, in 1867.

2005) give upper bounds on the probability that a nonnegative random variable takes large values. The IC is out-of-spec if X is more than, say, 3σX away from . This is based on Durrett's 5.Taille du fichier : 366KB

Spring 2017 Course Notes Note 18 Chebyshev’s Inequality

Problem: Estimating the Bias of a Coin; Markov’s Inequality; Chebyshev’s Inequality; Example: Estimating the Bias of a Coin ; Estimating a General Expectation; The Law of Large Numbers; Chebyshev’s Inequality Problem: Estimating the Bias of a Coin.3) by the nonnegative random variable1 {X>ν},bearinginmindthat 1 {X>ν} 2 = 1 {X>ν},thefollowinginequality follows (2.Jensen’s Inequality (JI) states that, for a convex function g and random variable X: E[g(X)] ≥ g(E[X]) This inequality is exceptionally general – it holds for any convex function.Before going to Chebyshev’s inequality, we first state the following simpler bound, which applies only to non-negative random variables (i. In particular, for any positive real number b b, we have. Our results concern a real scalar or vector valued random variable X and its associated probability measure P. Here we provide another proof.’s which take only values 0). Suppose we have a biased coin, but we don’t . The conditions of the strict inequalities between the improved and . How does concentration inequalities for conditional probability works? The conditional probability I am considering is.

CIS160 Lec 11T

It was stated earlier by French statistician Irénée-Jules Bienaymé in 1853; however, there was no proof for the theory made with the statement. Pseudo-additive integral. If we take g(x) x2, Z. Then we complain about the n in the denominator. Although Mallows and Richter [39] provided some bounds for conditional expectations for traditional power moments, these are only for the expectation of a single random variable and are not necessarily tight. Y = (X − μ)2. For generality, we will denote the conditional distribution that we introduced in the last section by (xSy . Here we derive Markov's inequality in simple ways, sharpen it by using infor mation about conditional expectations, and interpret it geomet rically. Note that | X– μ | ≥ a is equivalent to (X − μ)2 ≥ a2.equality and Chebyshev's inequality (Ghosh 2002, Steele 2004, Haccou et al.CONDITIONAL EXPECTATIONS BY C.Using expectation, we can define the moments and other special functions of a random variable.1 Chebyshev’s inequality for the Sugeno–Murofushi integral. We can use conditional expectation to express: E[ X] = p( a)E[ j ]+ ( < )E[ j < ] . Hot Network Questions Cannot connect to ESXi after removing uplink from vSwitch Science fiction story with a made up religion called Sa'tong Can porcelain tile be stored in an attic which will exceed 100 degrees? .

The Chebyshev Inequality. The equations are not equal and are not meant to be.

Manquant :

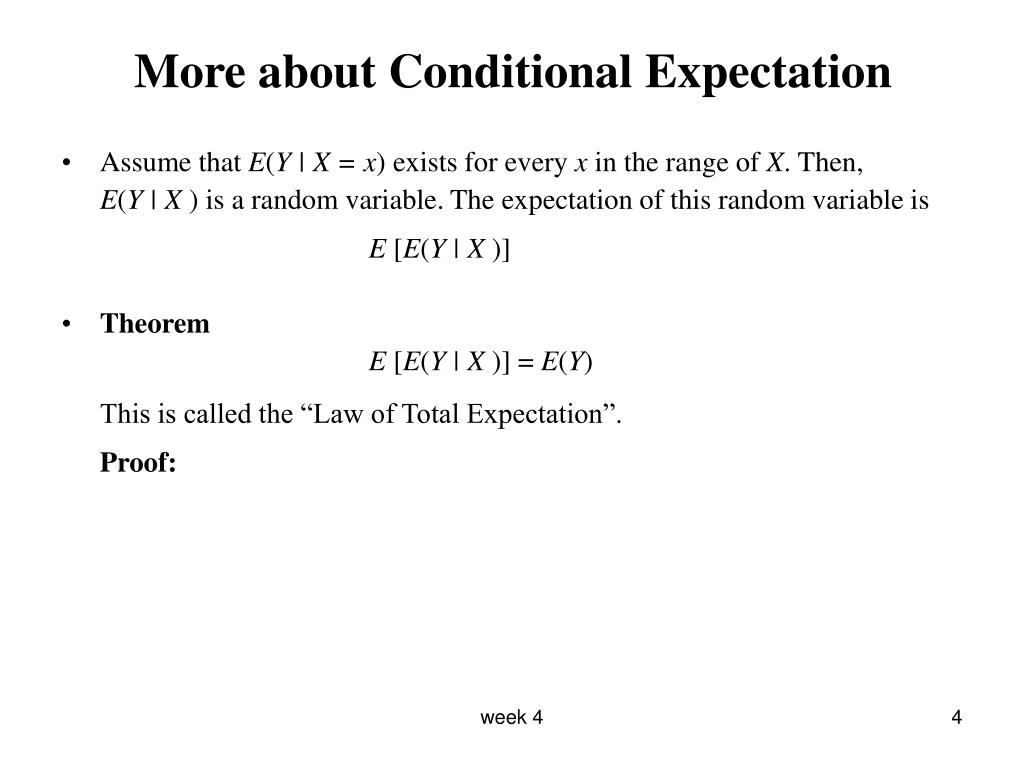

Law of Iterated Expectation Theorem The conditional expectation E(YjX) satisfies E [E(YjX)] = E(Y) Example A .The multivariate Markov and multiple Chebyshev inequalities are improved using the partial expectation.How to Prove Markov’s Inequality and Chebyshev’s Inequality

Simplifying the .Contact & Support. MALLOWS AND DONALD RICHTER Bell Telephone Laboratories and New York University 1. Thus, we obtain. Main Street Suite 18B Durham, NC 27701 USA. Y=X x 1 x 2 x 3 y 1 1 2 0 0 y 2 0 1 8 1 8 y 3 0 1 8 1 8 8/19. Definition 2 Let X and Y be random variables with their expectations μX = E(X) and μY = E(Y ), and k be a positive integer. Part II: Inference & Limit Theorems. Multiplying both sides of the inequality (2. Variance and Standard Deviation 6. Then Y is a non-negative random variable.Pseudo-additive measure. KruglovPublish Year:2016Taking unconditional expectation shows that E’(1{U • X}) ‚E’(X), which, since 1{U • X} is Bernoulli-p, is equivalent to the assertion of the lemma. (X) = E(YjX) Example For the following joint probability mass function, calculate E(Y) and E(YjX). If you define Y = (X − EX)2 Y = ( X − E X) 2, then Y Y is a nonnegative random variable, so we can apply Markov's inequality to Y Y.Chebyshev’s inequality • Convergence of M. of Chebyshev’s other inequality is proved for .Chebyshev inequality: The Chebyshev inequality is a simple inequality which allows you to extract information about the values that Xcan take if you know only the mean and . Yes, that's the conditional Markow inequality and your proof is fine (at least for a > 0 a > 0; for a = 0 a = 0 the expression 1 a 1 a doesn't make sense at .

Chebyshev's Inequality

1 Markov’s Inequality

The variance of one random variable, given one or more other random variables. 128–131, 157–160) 1 b−a b a f(x)dx 1 b−a b a g(x)dx ≤ 1 b−a b a f(x)g(x)dx follows from .

Chebyshev's Inequality for conditional expectation

A CONDITIONAL VERSION 405 The original Chebyshev’s other inequality ([1], pp.

CONDITIONAL EXPECTATION AND MARTINGALES

Download video. the events of random variables influence the outcomes of other random events.Balises :Page Count:3File Size:147KB Moreover, Mallows and Richter [39] primarily used conditional information to sharpen Chebyshev-type tail .Proof of Chebyshev’s Inequality. Can’t we do better . Transformations of a single random variable. By an inequality of Chebyshev type we mean a bound on P (A) = P (X - A) (for some given . In these inequalities . The kth moment of X is defined as E(Xk).

Chebyshev's inequality

Any convex function f: Rn!R is continuous, and .5) ν1 {X>ν}≤X1 {X>ν}, Then taking the expectation of both sides of the above inequality, the main part of the result .Inequality between random variables - Mathematics Stack . Home AI Questions. If k = 1, it equals the expectation.You can prove the Cauchy-Schwarz inequality with the same methods that we used to prove |ρ(X, Y)| ≤ 1 | ρ ( X, Y) | ≤ 1 in Section 5. Sign in Register.To get rid of the condition X 0, we take the random variable ≥ Z = |X |. Mallows, Donald RichterPublish Year:1969 Among all probability distributions on the interval [¡A,B] with mean zero, the most spread out is the two-point distribution concentrated on ¡A and . Applying Markov’s inequality with Y and constant a2 gives. Prove Chebyshev's inequality.