Elasticsearch token length

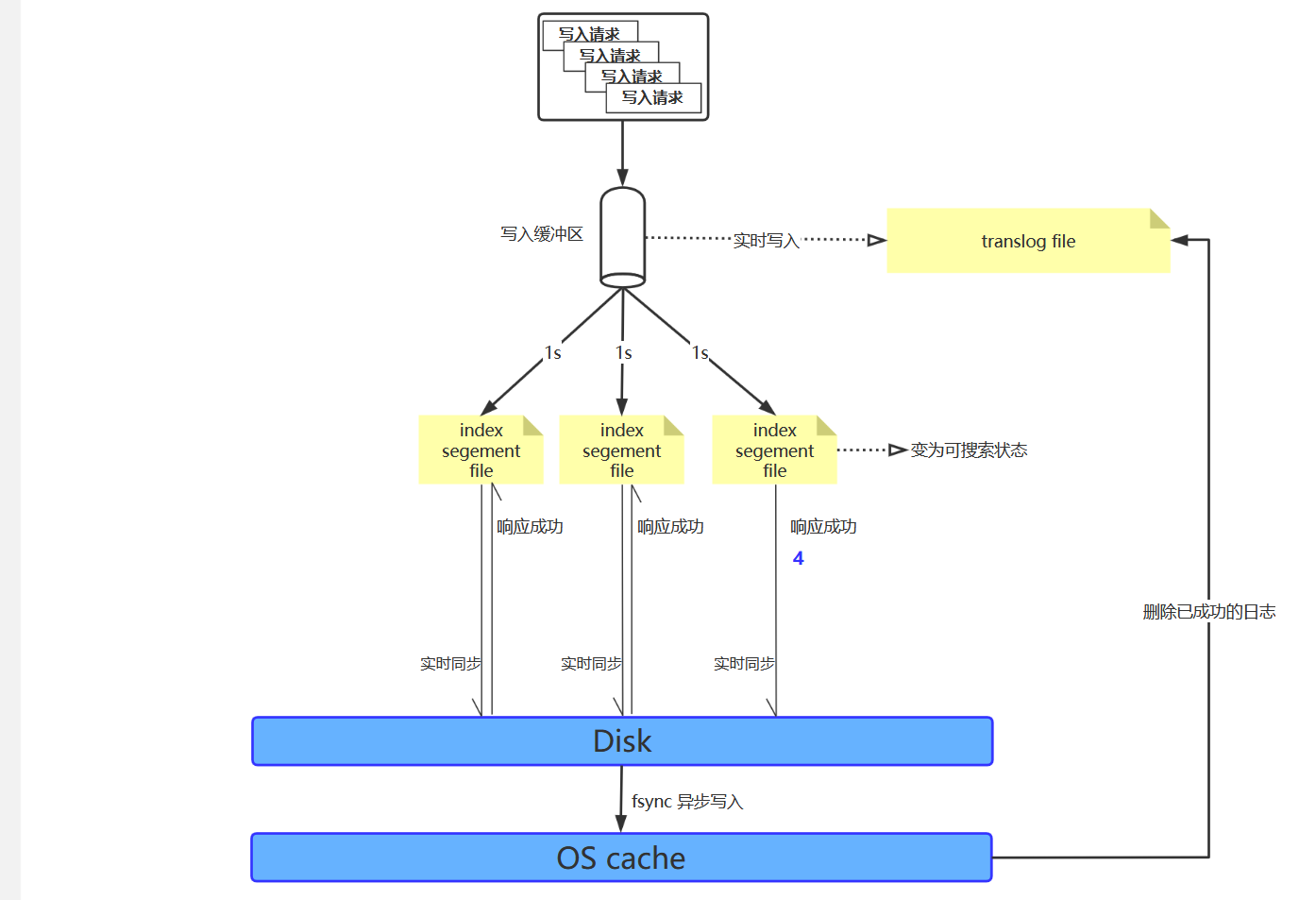

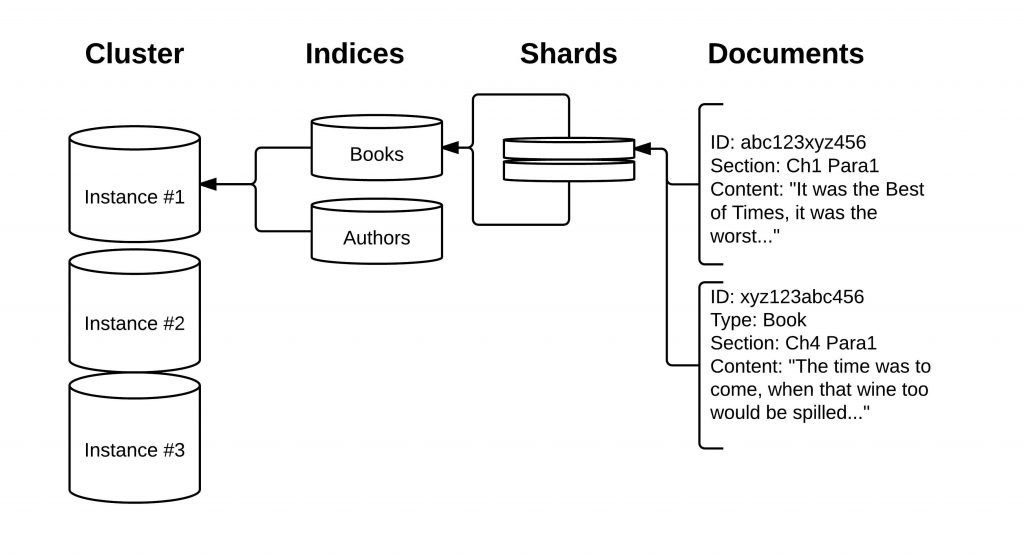

For valid parameters, see How to write scripts. config: myTokenFilter2 : type : length.The standard tokenizer divides text into terms on word boundaries, as defined by the Unicode Text Segmentation algorithm.Explanation: 4 tokens How, old, are, you (tokens can contain only letters because of token_chars), min_gram = 2, max_gram = 7, but max token length in the sentence is 3.Using the elasticsearch test classes ( 使用 elasticsearch 测试类 ) unit tests(单元测试) integreation test(集成测试) Randomized testing(随机测试) Assertions() Glossary of terms (词汇表) Release Notes(版本说明) 5.size() If possible, try to re-index the documents to: use doc[field] on a not-analyzed version of that field. 这个filter的功能是,去掉过长或者过短的单词。 它有两个参数 . We want to truncate it since we don't care about it. stopwords_path. I've been reading around and found that I can use a .The get token API takes the same parameters as a typical OAuth 2. If a token matches this script, the filters in the filter parameter are applied to the token. The whitespace tokenizer accepts the following parameters: max_token_length.Truncate token filter. constant_keyword for keyword fields that always contain the same value. aaron-nimocks (Aaron Nimocks) December 29, 2020, 5:26pm 4.

This highlighter breaks the text into sentences and uses the BM25 algorithm to score individual sentences as if they . The maximum token length.官方解释: A token filter of type length that removes words that are too long or too short for the stream. For instance: The name field is a text field which uses the default standard analyzer.partial]]]; nested: InvalidTokenOffsetsException[Token dussvitz exceeds length of provided text sized 7]; . By default, Elasticsearch changes the values of text fields as part of analysis.Comme la plupart des exemples que l’on trouve, ce token filter est conçu pour fonctionner non seulement avec Lucene seul, mais également avec Solr. Only inline scripts are supported.Hi, I would like to set the standard tokenizer to use a token length of 30000 for a field (I am inserting biological sequences into this field) instead of cutting up the string into 255 length tokens as is the default.

Term query

This filter uses Lucene’s TruncateTokenFilter. You can use: params.Token filters ordering edit. The standard tokenizer accepts the following parameters: max_token_length.Note that there's a length token filter at the bottom of the sample.

elasticsearch

Limits the number of output tokens. Defaults to 255. By default, the limit filter keeps only the first token in a stream.How to search by non-tokenized field length in ElasticSearch2 juin 2021elasticsearch - How to select the longest token in a ES filter22 sept. vineeth_mohan_2 (vineeth mohan-2) April 12, 2014, 12:46pm 2.

Exceptions during Highlighting: InvalidTokenOffsetsException

So go to Dev Tools and paste in that query and run it.

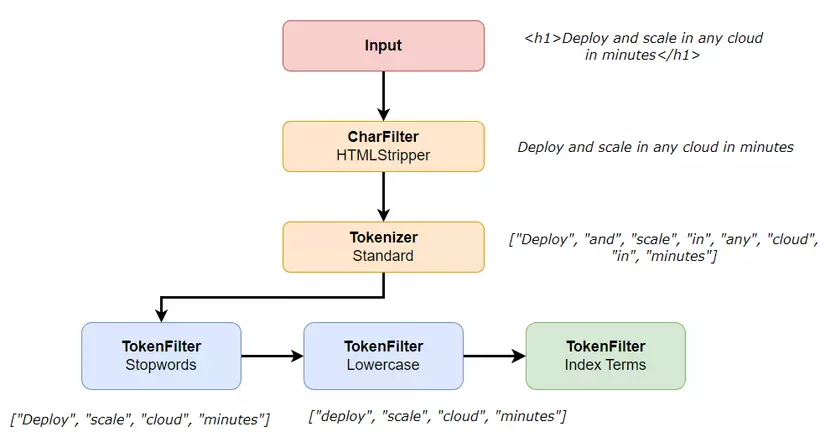

Standard analyzer

It is the best choice for most languages.

Synonym token filter

Index text is Bao-An Feng, My Analyzer is below PUT trim { settings: { index: { analy. Minimum length of the input before fuzzy suggestions are returned, defaults 3. even better, find out the size of the field before you index the document and consider adding its size as .Token filter reference. Minimum length of the input, which is not checked for fuzzy alternatives, defaults to 1.Needs to be greater than k, or size if k is omitted, and cannot exceed 10,000.

How to make elasticsearch scoring take field-length into account

Order is important for your token filters.

Token count field type

You can use the term query to find documents based on a precise value such as a price, a product ID, or a username.0 版本说明 5.Query_string query length limit. Otherwise you should add a field for the name length, and use the range query.

ElasticSearch 解析机制常见用法库 之 Tokenizer常用用法

Configuration edit. The letter tokenizer divides text into terms whenever it encounters a character which is not a letter. You can specify the highlighter type you want to use for each field. Elasticsearch collects num_candidates results from each shard, then merges them to find the top k .0 token API except for the use of a JSON request body.Input is a list of people name, and I want to create an exact match with a little fuzziness.The following analyze API request uses the length filter to remove tokens longer than 4 characters: GET _analyze { tokenizer: whitespace, filter: [ { type: length, min: 0, . Defaults to 255 . Limits the number of output tokens. wildcard for unstructured machine-generated content.Stop token filter. When the edge_ngram tokenizer is used with an index analyzer, this means search terms longer than the max_gram length may not match any indexed terms.Keyword type family. Use Case: Sometimes, customers send in some random gibberish text that dose not make any sense: e.1 Release Notes 5.13] › Text analysis › Token filter reference « Length token filter Lowercase token filter » Limit token count token filteredit.

A successful get token API call returns a JSON ._edge_ngram token_charsExpecting to obtain the following order in the results: 1) music festival 2) music festival dance 3) music festival dance techno 4) music festival dance techno . This limit defaults to 10 but can be customized using the length parameter. La copie d’écran ci-dessous illustre cette organisation en un projet Maven constitué de 3 modules avec une .Elastic Docs › Elasticsearch Guide [8.

Text will be processed first through filters preceding the synonym filter before being processed by the synonym filter.Pour un Token filter devant fonctionner à la fois sous Solr et elasticsearch, il y a donc 3 composants et donc 3 modules Maven dans un projet père: le token filter Lucene, la factory pour Solr et la factory pour elasticsearch. Use _source instead of doc. The lowercase tokenizer, like the letter .length field is a token_count multi-field which will index the number of tokens in the name field.

Length Token Filter

That's using the source of the document, meaning the initial indexed text: _source['myfield']. Unified highlighteredit. The limit filter is commonly used to limit the size of document field values based on token count. of may be 1 MB or some text in a .

elasticsearch query text field with length of value more than 20

2019elasticsearch query text field with length of value more than 20 Afficher plus de résultats

Get token API

Avoid using the term query for text fields.

How to Query for length with elasticsearch (KQL)

To search text field values, use the . It removes most punctuation symbols. The keyword family includes the following field types: keyword, which is used for structured content such as IDs, email addresses, hostnames, status codes, zip codes, or tags. This query matches only the document containing Rachel Alice Williams, .max_token_length. For example, you can use the truncate filter to shorten all tokens to 3 characters or fewer, changing jumping fox to jum fox.文章浏览阅读1.size()See more on stackoverflowCommentairesMerci !Dites-nous en davantage

Paginate search results. When not customized, the filter removes the following English stop words by default: In addition to English, the stop filter supports predefined stop word lists for several languages.Fetch Failed [Failed to highlight field [name. For example, if the max_gram is 3, searches for apple won’t match . The following are settings that can be set for a length token filter type:

Keyword type family

Exemple d'utilisation_source['myfield']. The path to a file containing stop words.A token filter of type length that removes words that are too long or too short for the stream.With this observation, we propose a strategy termed PROgressive Token Length SCALing for Efficient transformer encoders (PRO-SCALE) that can be plugged . Elasticsearch will also use the token filters preceding the synonym filter in a tokenizer chain to parse the entries in a synonym file or synonym set.config: myTokenFilter2 : type : length.

Classic tokenizer

I've been reading around and found that I can use a custom analyzer with. windoz (windoz) July 3, 2012, 8:43am 3.

2 Release Notes 5. By default, searches return the top 10 matching hits. The edge_ngram tokenizer’s max_gram value limits the character length of tokens.

Max length allowed for max

script (Required, script object) Predicate script used to apply token filters. The size parameter is the maximum number of hits to return. You can also specify your own stop words as an array or file. Hello Robbie , There is a limit on the query length or rather query terms. By default, the limit filter keeps only the first .Paginate search results edit.These filters can include custom token filters defined in the index mapping.Elastic Stack Elasticsearch.8w次,点赞6次,收藏18次。Tokenizer 译作:“分词”,可以说是ElasticSearch Analysis机制中最重要的部分。standard tokenizer标准类型的tokenizer对欧洲语言非常友好, 支持Unicode。如下是设置:设置 说明 max_token_length 最大的token集合,即经过tokenizer过后得到的结果集的最. Truncates tokens that exceed a specified character limit. To page through a larger set of results, you can use the search API 's from and size parameters.Elasticsearch supports three highlighters: unified, plain, and fvh (fast vector highlighter).