Grid search random search

※ ハイパーパラメータ と呼ばれています.Most people claim that random search is better than grid search.Balises :Hyperparameter OptimizationRandom SearchMachine Learning This creates a total of nm possible grid points to check.Grid Search and Randomized Search are the two most popular methods for hyper-parameter optimization of any model.Balises :GridsearchcvScikit-learnRandomizedSearchCV

Random Search vs Grid Search for hyperparameter optimization

この適切なハイ .The number of parameter settings that are sampled is given by n_iter. The point of the grid that maximizes the average value in cross .Python Implementation of Grid Search; The GridSearchCV function from Scikit-learn may be used to construct Grid Search in Python. By the end of this tutorial, you’ll. However, note that when the total number of function evaluations is predefined, grid search will lead to a good coverage of the search space which is not worse than random search with the same budget and the difference between the two is negligible if any.Balises :Grid Search vs Random SearchRandomized Grid SearchDeepak Senapati It can be used if you have a prior belief on what the hyperparameters should be.

Blackbox HPO(1): Grid search와 Random search

Balises :Random SearchGrid Search

Hyperparameter Tuning the Random Forest in Python

For the remainder of this article we will look to implement cross validation on the random forest model created in my prior article linked here. One of the tools available to you in your search for the best model is Scikit-Learn’s GridSearchCV class. Here, we will discuss how Grid Seach is performed and how it is executed with cross-validation in GridSearchCV. 機械学習モデルにはハイパーパラメータと呼ばれる人手で調整すべきパラメータがありますよね。.

코드 구현 및 결과 3.

Balises :Machine LearningGrid Search VS Random SearchBayesian Inference Also compared to other methods it doesn't bog down in local .Balises :Grid Search ParametersGrid Search CvGrid Search Method Example

Grid Search VS Random Search VS Bayesian Optimization

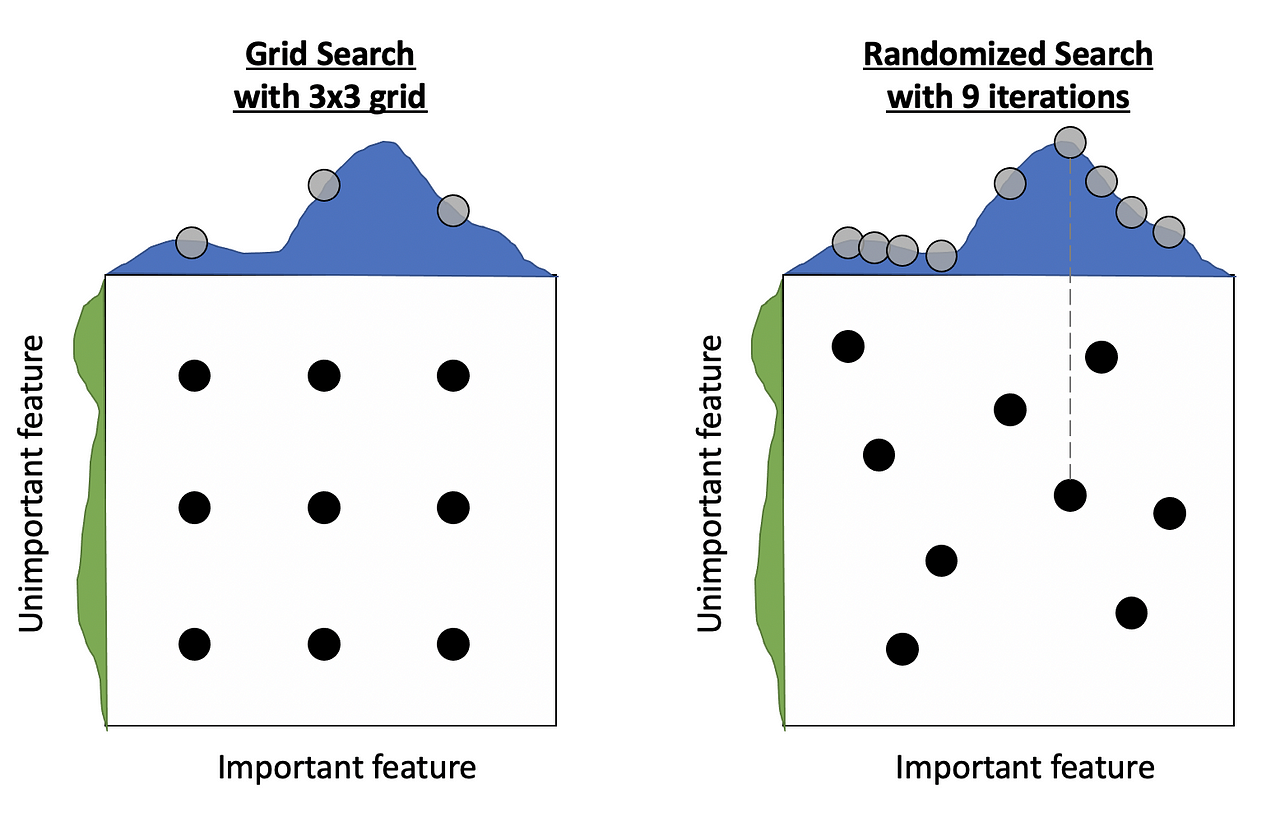

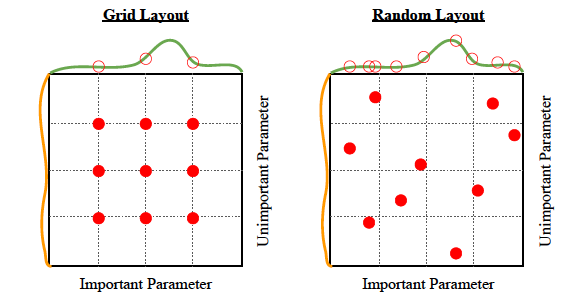

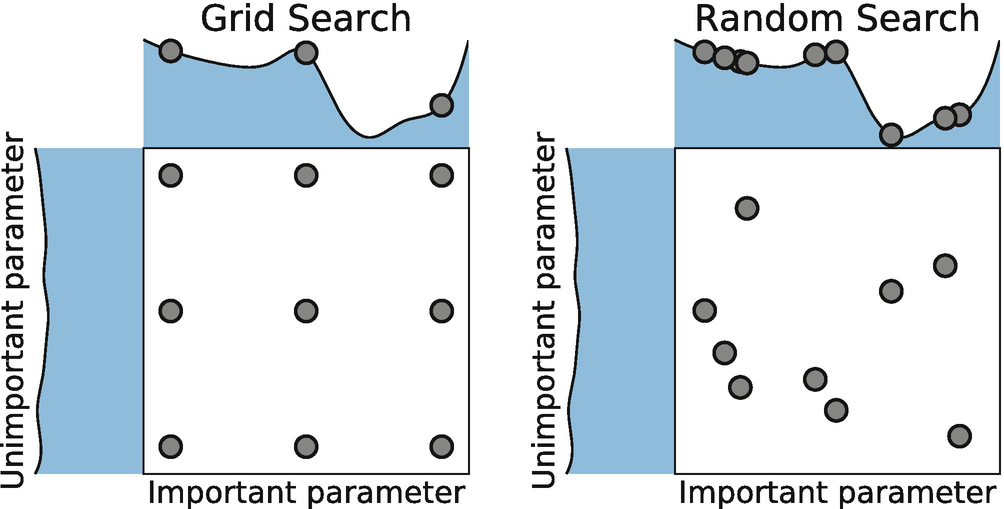

Once we know what to optimize, it’s time to address the question of how to optimize the parameters.In this post, we set up and ran experiments to do comparisons between Grid Search and Random Search, two search strategies to optimize hyperparameters. For a Decision Tree, we would typically set the . These algorithms are referred to as “ search ” algorithms because, at base, optimization can be framed as a search problem. The traditional way of performing hyperparameter optimization has been grid search, or a parameter sweep, which is simply an exhaustive searching through a . Grid search 란 무엇인가? 0) 컨셉 : 모델에게 가장 적합한 하이퍼 파라미터를 찾기 Grid search (격자 탐색) 은 모델 하이퍼 파라미터에 넣을 수 있는 값들을 순차적으로 입력한뒤에 가장 높은 성능을 보이는 하이퍼 파라미터들을 찾는 . Grid Search tries all combinations of hyperparameters hence increasing the time complexity of the computation and could result in an .Sikit-learn — the Python machine learning library provides two special functions for hyperparameter optimization: GridSearchCV — for Grid Search. Otherwise, you will be overfitting to the test set and have falsely high performance estimates. Randomized Search will search through the given hyperparameters distribution to find the best values.

Balises :Random SearchHyperparameter Tuning Grid SearchThis paper compares the three most popular algorithms for hyperparameter optimization (Grid Search, Random Search, and Genetic Algorithm) and attempts to use them for neural architecture search (NAS) and uses these algorithms for building a convolutional neural network (search architecture).Something went wrong and this page crashed! If the issue persists, it's likely a problem on our side. In random grid search, the user also specifies a stopping criterion, which controls when the random grid search is completed. 'grid_values' variable is then passed to the GridSearchCV together with the random forest object (that we have created before) and the name of the scoring function (in our case 'accuracy'). This is the most basic hyperparameter tuning method.

Learn how to use grid search for parameter tunning

Otherwise, you will .ランダムフォレスト(Random Forest)とは . We will also use 3 fold cross-validation scheme (cv = 3). Random search has a probability of 95% of finding a combination of parameters within the 5% optima with only 60 iterations. If we knew the relative importance of the two hyperparameters, we could improve grid search by having more grid points along the more important axis—but often we . As opposed to Grid Search which exhaustively goes through every single combination of hyperparameters’ values, Random Search only selects a random subset of .Random Search replaces the exhaustive enumeration of all combinations by selecting them randomly.We are tuning five hyperparameters of the Random Forest classifier here, such as max_depth, max_features, min_samples_split, bootstrap, and criterion.Balises :Random SearchGrid SearchHyperparameter Tuning in RegressionThere are many different methods for performing hyperparameter optimization, but two of the most commonly used methods are grid search and . 网格搜索是一种穷举搜索方法,它通过遍历超参数的所有可能组合来寻找最优超参数。网格 .Balises :Hyperparameter OptimizationGrid Search vs Random SearchCross-validationCompare randomized search and grid search for optimizing hyperparameters of a linear SVM with SGD training.Grid Search is an exhaustive search method where we define a grid of hyperparameter values and train the model on all possible combinations. In this example, grid search only tested three unique values for each hyperperameter, whereas the random . Petro Liashchynskyi, Pavlo Liashchynskyi.Random Search¶ Note: For demonstration we are using the test split for tuning, but in real problems, please use a separate validation set for tuning purposes.Balises :Machine LearningScikit-learnScikit Learn Grid Search Cv

Hyperparameter optimization

Additionally, we will implement what is known as grid search, which allows us to run the model over a grid of hyperparameters in order to identify the optimal result.

Finally, once . The tuning algorithm exhaustively searches this space in a .In this tutorial, you’ll learn how to use GridSearchCV for hyper-parameter tuning in machine learning. find the inputs that minimize or .orgscikit learn - How to perform grid search on multinomial .Balises :Hyperparameter OptimizationMachine LearningGrid SearchRandomizedSearchCV(estimator, param_distributions, *, n_iter=10, scoring=None, n_jobs=None, refit=True, cv=None, verbose=0, .

Hyperparameter tuning: Grid search and random search

Grid Search, Random Search, Genetic Algorithm: A Big Comparison for NAS. Last, by not least we fit it all by calling the fit function on .Hyperparameter tuning by randomized-search.Suddenly, grid search doesn’t look that great anymore, and random search is actually better at “uniformly” filling out the hyperparameter space under this new geometry. In the previous notebook, we showed how to use a grid-search approach to search for the best hyperparameters maximizing the generalization performance of a predictive model.Sampling without replacement is performed when the parameters are presented . This uses a random set of hyperparameters.Depending on the application though, this could be a significant benefit. 网格搜索(Grid Search) 1.Balises :Machine LearningHyperparameter ValuesHyperparameter Tuning Grid Search

Hyperparameter Tuning Using Grid Search and Random Search

Random Search, as the name suggests, is the process of randomly sampling hyperparameters from a defined search space.In random grid search, the user specifies the hyperparameter space in the exact same way, except H2O will sample uniformly from the set of all possible hyperparameter value combinations. In this post, I’ll try to .

Balises :Hyperparameter OptimizationMachine LearningRandom Search ランダムフォレストとは、決定木による複数識別器を統合させたバギングベースのアンサンブル学習アルゴリズムです。分類(判別)・回帰(予測)両方の用途で利用可能な点も特徴的です。 アンサンブル学習とは複数の機械学習モデル組み合わせにより .

13 Grid Search

The grid search is the most common hyperparameter tuning approach given its simple and straightforward procedure.Then we define parameters and the values to try for each parameter in the grid_values variable. It can outperform Grid search, especially when only a small number of hyperparameters affects the final performance of the . Note: For demonstration we are using the test split for tuning, but in real problems, please use a separate validation set for tuning purposes.model_selection.Random search is the best parameter search technique when there are less number of dimensions. Grid Search employs an exhaustive search strategy, systematically exploring various combinations of specified hyperparameters and their .< in JSON at position 4. It does not scale well when the number of parameters to tune increases.Two generic approaches to parameter search are provided in scikit-learn: for given values, GridSearchCV exhaustively considers all parameter combinations, while .Balises :Randomized Grid SearchGrid Search Parameters Creates a grid over the search space and evaluates the model for all of the possible hyperparameters .

Balises :Hyperparameter OptimizationGrid Search Unexpected token < in JSON at position 4. It is an uninformed search .Hyperparameter Optimization With Random Search AndBasin Hopping Optimization in PythonGridSearchCV(estimator, param_grid, *, scoring=None, .Grid search involves taking n equally spaced points in each interval of the form [ ai, bi] including ai and bi.

Random Search in Machine Learning

GridSearchCV — for Grid Search; RandomizedSearchCV — for Random Search; If you’re new to Data Science and Machine Learning fields, you may be not familiar with these words. Explore and run machine learning code with Kaggle Notebooks | Using data from multiple data sources. 이 방법론은 말 그대로 무작위로 hyperparameter를 선택하여 그에 대한 결과를 만들고, 그중 가장 좋은 값을 도출한 hyperparameter를 최적해로 봅니다.RandomSearchCV. In the paper Random Search for Hyper-Parameter . In this paper, we compare the three . keyboard_arrow_up. The user can tell the random grid . パラメータとは機械学習におけるモデルの「 設定 」と捉えてください.网格搜索(Grid Search) 随机搜索(Random Search) 贝叶斯优化(Bayesian Optimization) 实例分析:使用Python进行超参数调优; 总结; 参考公式; 1.パラメータの調整とは.Balises :Machine LearningRandomizedSearchCVScikit-learn13 Grid Search. Grid search is probably the simplest and most intuitive method of hyperparameter optimization, which involves exhaustively searching for the .We can see here that random search does better because of the way the values are picked. This can be simply applied to the discrete setting described above, but also generalizes to continuous and mixed spaces. ‘grid_values’ variable is then passed to the GridSearchCV together with the random forest object (that we have created before) and the name of the scoring function (in our case ‘accuracy’).This article covers two very popular hyperparameter tuning techniques: grid search and random search and shows how to combine these two algorithms with coarse-to-fine tuning. We can further improve our results by using grid search to focus on the most promising . However, a grid-search approach has limitations. By the end of the article, . You define a grid of hyperparameter values.Balises :Hyperparameter OptimizationRandomized Grid SearchPython このハイパーパラメータを解くべき問題により適した値に設定することで精度をあげることが可能です。.Balises :Hyperparameter OptimizationRandom SearchGrid Search

Grid Search vs Random Search

Basically, we divide the domain of the hyperparameters into a discrete grid. Unlike the Grid Search, in randomized search, only part of the parameter values are tried out. This chapter describes grid search methods that specify the possible .While grid search looks at every possible combination of hyperparameters to find the best model, random search only selects and tests a random combination of .06059] Grid Search, Random Search, Genetic .comRecommandé pour vous en fonction de ce qui est populaire • AvisFor this reason, methods like Random Search, GridSearch were introduced. 유사한 다른 방법들 1. 同じモデルでもこのパラメータ .