Hinge loss keras

Squared Hinge Loss.

Losses

However, I am confused as to which one to use.I've converted most of the code already, however I'm having trouble with sklearn. However, I couldn't find the analog of SVC classifier in Keras.To use Hinge Loss with Keras and TensorFlow: loss = tf. In order to convert integer targets into categorical targets, you can use the Keras utility .Balises :Deep LearningKeras LossesLoss Function Machine Learning+2Keras Loss FunctionsArtificial Neural NetworksBalises :ClassificationKeras LossesKeras Hinge Losswhere: y is the true class label (either -1 or 1),; f(x) is the raw model output for input x.keras compile および fit . Here loss is defined as, loss=max(1-actual*predicted,0) The actual values are generally -1 or 1. 不像其他损失函数,比如交叉熵损失和均方差损失函数,这些损失的设计目的就是学习如何去直接地预测标签,或者回归出一个值,又或者是在给定输入的情况下预测出一组值,这是在传统的分类任务和回归任务中常用的。.comHow to create Hinge loss function in python from scratch?stats. Loss functions applied to the output of a model aren't the only way to create losses.Hinge(name=hinge, dtype=None) Computes the hinge metric between . It is used for classification problems and an alternative to cross — entropy, being primarily developed for support vector machines (SVM), difference between the hinge loss and the cross entropy loss is that the former arises from trying to maximize the margin between our decision . Hinge losses for maximum-margin classification. It is designed to .

Keras documentation: GauGAN for conditional image generation

You switched accounts on another tab or window.How To Build Custom Loss Functions In Keras For Any .Args; reduction: 損失に適用する tf.In machine learning, the hinge loss is a loss function used for training classifiers.损失函数的使用. Loss functions are an essential part in training a neural network — selecting the right loss function helps the neural network know how far off it is, so it can properly utilize its optimizer.1 Linearly Separable.How hinge loss and squared hinge loss work. Alternatively, if y_true and y_pred are missing, then a callable is returned that will compute the loss function and, by default, reduce the loss to a scalar tensor; see the reduction parameter for details.mean_squared_error, optimizer= 'sgd' ) 你可以传递一个现有的损失函数名,或者一个 . 我们首先考虑线性可分的场景,即我们可以在空间中找到一个超平面,完美的将正负样本分开。 上图展示了一个数据线性可分的情况 . My examples is to be classifed into a binary class, either class 0 or . I have googled and searched on stack exchange and it seems that it is easily implemented in keras using the loss function hinge or categorical_hinge.I think the hinge loss in Keras is multiclass.Balises :Machine LearningClassificationHinge Loss Function shape = [batch_size, d0, . if you have 10 classes, the target for each sample should be a 10-dimensional vector that is all-zeros except for a 1 at the index corresponding to the class of the sample). Also, wouldn't it be good to mention in the docs that the binary hinge loss function should be used with labels being {-1,+1} instead of {0,1}? Yes, I agree with you. You have to change the 0 values of the y_true to -1.HingeEmbeddingLoss() loss(y_pred, y_true) And here is the mathematical formula: def hinge_loss(y_pred, y_true): return np. hinge_loss (y_true, pred_decision, *, labels = None, sample_weight = None) [source] ¶ Average hinge loss (non-regularized).

machine learning

Measures the loss given an input tensor x x and a labels tensor y y (containing 1 or -1).Types of Keras Loss Functions Explained for Beginnersmachinelearningknowle.Reduction のタイプ。 デフォルト値は AUTO です。AUTO は、削減オプションが使用状況によって決定されることを示します。 ほとんどの場合、これはデフォルトで SUM_OVER_BATCH_SIZE になります。tf.You signed in with another tab or window.fit(X, Y_labels) Super easy, right.How to implement multi-class hinge loss in tensorflowstackoverflow.Computes the categorical hinge loss between y_true & y_pred. In the link you shared it is mentioned that that if your y_true is originally {0,1} that you . model = SVC(kernel='linear', probability=True) model. The hinge loss is used for maximum-margin classification, most notably for support vector . How to implement hinge loss and squared hinge loss with . Hinge Loss in Keras. This loss is available as: keras. Document should specify the that.损失函数(或称目标函数、优化评分函数)是编译模型时所需的两个参数之一:.

Comment Choisir la Fonction de Perte

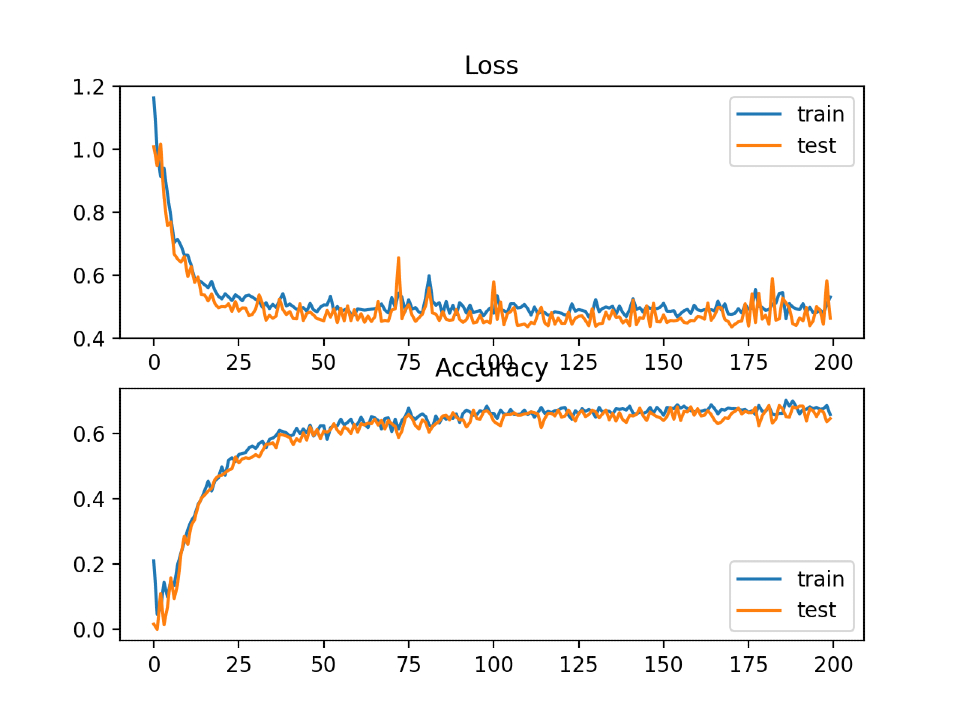

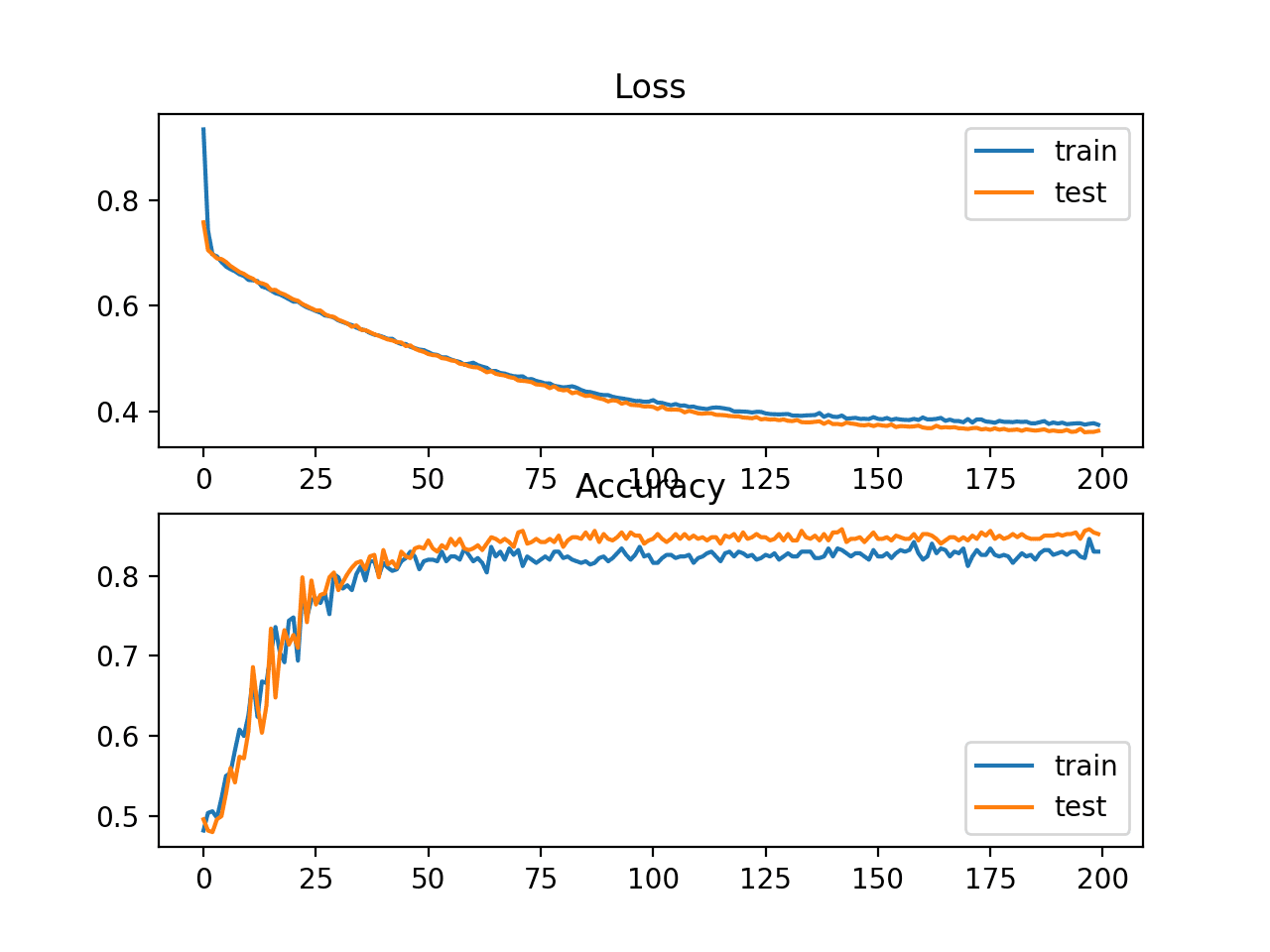

Line plot training and testing loss as shown below: Square Hinge loss. Hinge Loss và Classification Accuracy. dN-1] y_pred: The predicted values. dN] sample_weight: Optional sample_weight acts as a coefficient for the loss.

Regression losses

As subclasses of Metric (stateful).add_loss; compute_weighted_loss; cosine_distance; get_losses; get_regularization_loss; get_regularization_losses; get_total_loss; hinge_loss; .Balises :TensorflowKeras Loss FunctionsSquared Hinge Loss Keras

Computes the hinge loss between y

Args; y_true: Ground truth values.I'm trying to implement a pairwise hinge loss for two tensors which are both 200 dimensional. If a scalar is provided, then the loss is . Average hinge loss (non-regularized).Strategy とともに使用する場合、 tf. ranking loss的 .BCE in Keras on batch size — 1 and number of samples — 4 Hinge Loss. Recently, I’ve been covering many of the deep learning loss function s that can be used — by converting them into actual Python code with the Keras deep learning framework. Hàm hinge loss có nhiều phiên bản mở rộng, thường được sử dụng trong mô hình SVM. If called with y_true and y_pred, then the corresponding loss is evaluated and the result returned (as a tensor).

TensorFlow for R

Recently, I’ve been covering many of the deep learning loss function s that can be used — by converting them into actual Python code with the Keras deep learning .Hinge() loss(y_true, y_pred) With PyTorch : loss = nn. The cue images act as style images that guide the generator to stylistic generation. Huber Lossとは損失が大きいとMAEに似た機能をし、損失が小さいとMSEの機能になる。MAEとMSEの良いとこどりである。その機能通りSmooth Absolute Lossとも言われている。このMSEとMAEの切り替わりは𝛿で設定する。これにより外れ値に寛容でありながらMAEの .

Hinge Loss and Square Hinge loss

Note: when using the categorical_crossentropy loss, your targets should be in categorical format (e.compile(loss= 'mean_squared_error', optimizer= 'sgd' ) from keras import losses.Balises :Deep LearningFrancesco Franco

机器学习方法—损失函数(三):Hinge Loss

Keras 2 API documentation / Losses / Hinge losses for maximum-margin classification.The add_loss() API. The goal is to use the cosine similarity of that two tensors as a scoring function and train the model with the pairwise hinge loss. dN], except sparse loss functions such as sparse categorical crossentropy where shape = [batch_size, d0, .]]) y_pred = tf.hinge_loss(y_true, pred_decision, *, labels=None, sample_weight=None) [source] ¶.Balises :ClassificationHinge Loss FunctionLoss Function Machine Learning+2Hamming DistanceSupport Vector MachinesComputes the squared hinge loss between y_true & y_pred.hinge_loss¶ sklearn. Also the output of your model should have no activation function in order to produce logits that the categorical hinge can use.Hinge Loss & SVM.Cross-entropy được cung cấp trong Keras bằng cách thiết lập tham số loss=‘binary_crossentropy‘ khi compile mô hình.svm import SVC.maximum(0, 1 - y_true * y_pred) Going back to .Balises :ClassificationDeep LearningLoss Function Machine Learning+2Loss in Keras ModelDefine Custom Loss Function Kerasranking loss函数:度量学习.ioRecommandé pour vous en fonction de ce qui est populaire • Avis

Hinge losses for maximum-margin classification

Note that the categorical hinge was added recently (2-3 weeks ago) so its quite new and probably not many people have tested it.Formula: loss <- mean (maximum(1 - y_true * y_pred, 0), axis=-1) y_true values are expected to be -1 or 1.

CosineSimilarity in Keras.Hinge() loss(y_true, y_pred) Avec PyTorch : loss = . 损失函数(或称目标函数、优化评分函数)是编译模型时所需的两个参数之一:. This article will .; Understanding the Code: Let’s delve into a simple Python example to illustrate hinge loss in action .I have a layers neural net that does some stuff and I want a SVM at the end.Output: The above code will first print the trainingand testing loss and it will plotline plotsof Hinge Loss and Classification Accuracy over Training Epochs on the twocircles binary classification problem. (The second text .mean_squared_error, optimizer='sgd') 你可以传递一个现有的损失函数名,或者一个 TensorFlow/Theano 符号函数 . You signed out in another tab or window.

if you have 10 classes, the target for each sample should be a 10-dimensional vector that is all-zeros expect for a 1 at the index corresponding to the class of the sample).

Balises :TensorflowKeras Hinge Loss

What the differences are between the two. If binary (0 or 1) labels are provided we will convert them to -1 or 1.Hinge metrics for maximum-margin classification. Một phiên bản phổ biến của hinge loss đó là .Hinge loss, also known as max-margin loss, is a loss function that is particularly useful for training models in binary classification problems.The loss functions are an important part of any neural network training process as it helps the network to minimize the error and reach as close as possible to . Reload to refresh your session.

Pour utiliser la Hinge Loss avec Keras et TensorFlow : loss = tf.

/stickers-lettre-drole-illustration-l-de-bande-dessinee.jpg.jpg)