Kubernetes nginx load balancer

; Prerequisites In traditional cloud environments, where network load balancers are available on-demand, a single Kubernetes manifest suffices to provide a single point of contact to the Ingress-Nginx Controller to external clients and, indirectly, to any application running inside the cluster. Make sure you have enough memory and storage to create a new virtual machine and have virtual .Kubernetes provides three types of external load balancing: NodePort, LoadBalancer, and Ingress. Let’s take a look at what that looks like.Ingress 控制器有各种类型,包括 Google Cloud Load Balancer, Nginx,Contour,Istio,等等。它还有各种插件,比如 cert-manager[5],它可以为你的 . When creating a Service, you have the option of automatically creating a cloud load balancer.29 introduces a new (alpha) . This page shows how to create an external load balancer. There needs to be some external load balancer functionality in .To address this, Kubernetes v1. For load balancer IP select an unused IP within the AKS Service CIDR (to be . MetalLB est un outil pratique pour les clusters bare-metal ou on-premise grâce à son mécanisme de basculement.

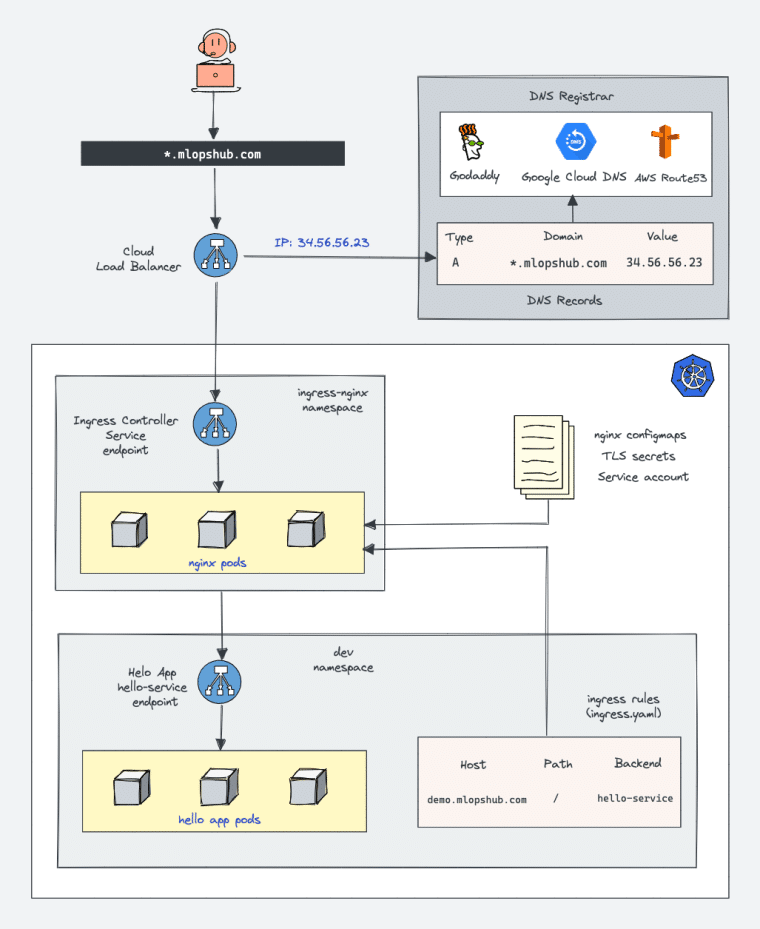

Deploy a Simple Nginx App with Kubernetes Cluster

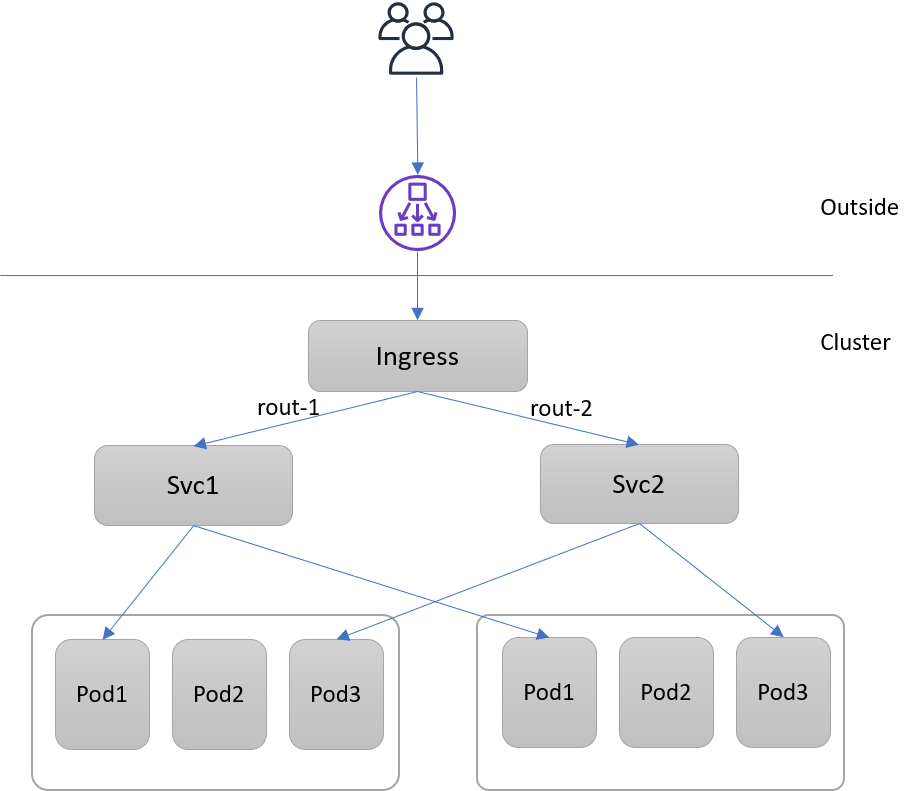

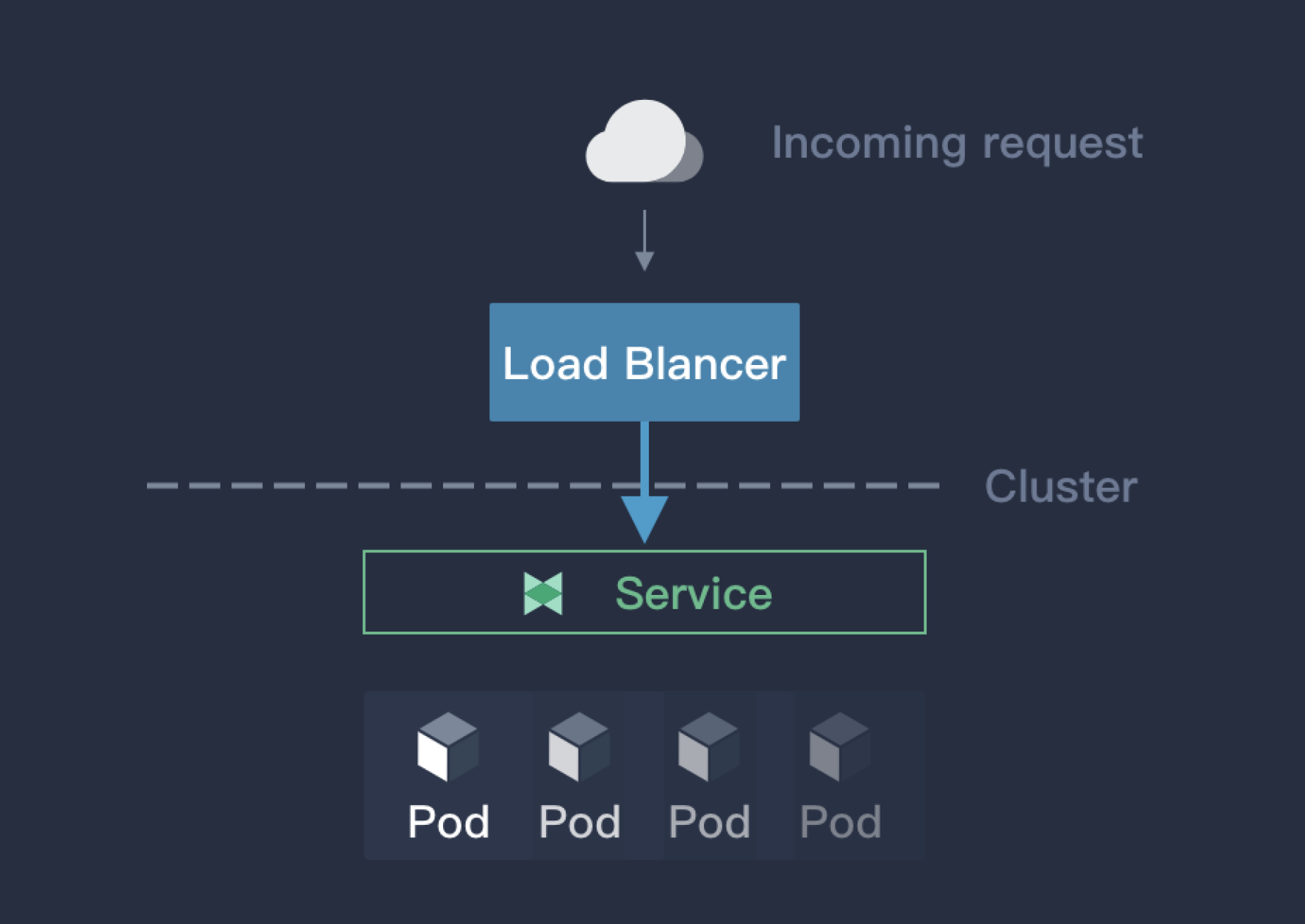

In this tutorial, we will look at two of these mechanisms: ingresses and load balancers.Load balancing traffic across your Kubernetes nodes. You can turn the “hard option” into the “easy option” with .Proxy protocol enables an L4 Load Balancer to communicate to the original client IP. You will create an environment where AKS egress traffic go through an HTTP Proxy server.

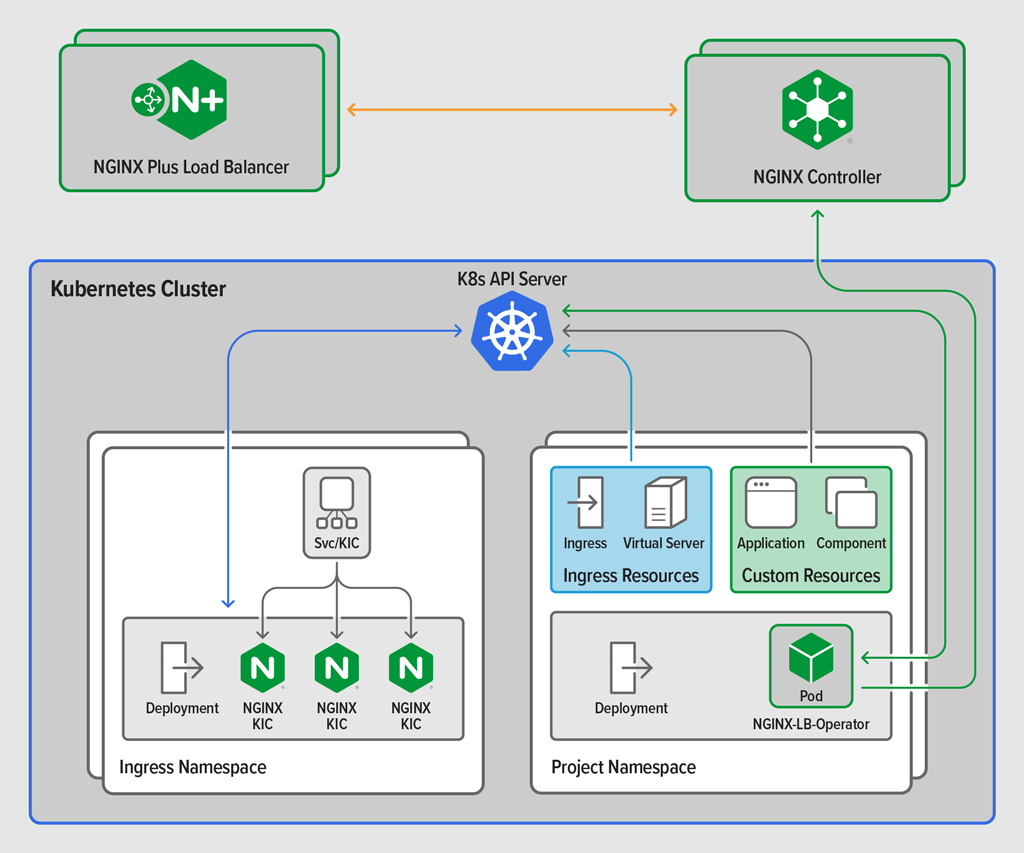

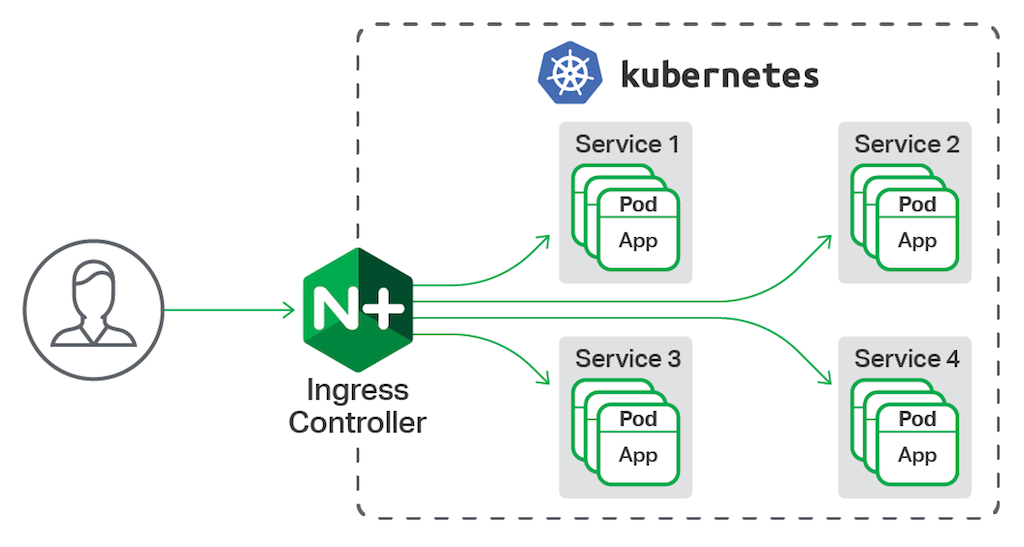

Deploy demo using Terraform. Install AKS Arc, and provide a range of virtual IP addresses for the load balancer during the network configuration step.Critiques : 99 The backends must be secured by restricting . If you have multiple Kubernetes clusters, it’s ideal to route traffic to both clusters at the same time. AWS provides the documentation on how to use Network load balancing on . They redistribute the traffic load of the cluster, providing an .Load-balancers are in charge of redirecting incoming network traffic to the node hosting the pods.Nginx can be configured as a load balancer to distribute incoming traffic around several backend servers.Il vous est dorénavant possible d’utiliser un load-balancer tel que MetalLB et de l’utiliser en tandem avec nginx-ingress pour créer un unique point d’accès aux services de votre cluster.kubernetes - Nginx ingress controller vs HAProxy load balancerkubernetes - Ingress vs Load BalancerAfficher plus de résultats The Kubernetes network model. When the configuration is applied to your cluster, Linode NodeBalancers will be created, and added to your Kubernetes cluster. I have a question about nginx ingress controller. The previous section covered how to equally distribute load across several virtual servers.The provided templates illustrate the setup for legacy in-tree service load balancer for AWS NLB. hostname - Hostname is set for load-balancer ingress points that are DNS based (typically AWS load-balancers). NGINX-LB-Operator relies on a number of Kubernetes and NGINX technologies, so I’m providing a quick review to get us all on the same page. After deploying the Backend Services, you need to configure the nginx Kubernetes Service to use the proxy protocol and tls-passthrough. For this to work, you need to configure both DigitalOcean Load Balancer and Nginx. What we’re going to do in our demo, coming right up, is build an NGINX load balancer for Kubernetes services.Noticed in your controller.As there are different ingress controllers that can do this job, it’s important to choose the right one for the type of traffic and load .

Load Balancing TCP and UDP Traffic in Kubernetes with NGINX

Bare-metal considerations

To run all the examples below, make sure to have .Temps de Lecture Estimé: 11 min

Dynamic A/B Kubernetes Multi-Cluster Load Balancing and

According to documentation, this setup creates two load balancers, an external and an internal, in case you want to expose some applications to internet and others only inside your vpc in same k8s cluster.You need high‑performance load balancing for applications that are based on TCP or UDP.

Your manifest might then look like: apiVersion: v1 kind: . In Part 1, we explored Service and Ingress resource types that define two ways to control the inbound traffic in a Kubernetes cluster. As you open network ports to pods, Azure automatically configures the necessary network security group rules. This means you do .

Concepts

In support of frequent app releases, NGINX custom .

To create an external load balancer, add the following line to your Service manifest: type: LoadBalancer. This field specifies how the load balancer IP behaves and can be specified only when the .

Exposing Kubernetes Applications, Part 3: NGINX Ingress Controller

This is especially common when organizations need to scale .The Exposing Kubernetes Applications series focuses on ways to expose applications running in a Kubernetes cluster for external access.19 [stable] An API object that manages external access to the .Restart nginx: sudo service nginx restart. This annotations are made . We also recommend that you enable the PROXY Protocol for both the NGINX Plus Ingress Controller and your NLB target . Two values are possible for .By default, Amazon EKS uses Classic Load Balancer for Kubernetes services of type LoadBalancer.

How To Set Up Nginx Load Balancing

There is a difference between ingress rule (ingress) and ingress controller. To enable active health checks: In the location that passes requests ( proxy_pass) to an upstream group, include the health_check directive: Copy. Internally, you can use a ClusterIP service to direct traffic to pods, ensuring smooth operation even if pods are replaced in a Deployment.ip field is also specified.Temps de Lecture Estimé: 6 min

Installation Guide

Before you begin. If you rename or otherwise modify these resources in the control panel, you may render them unusable to the cluster or cause the reconciler to . You want to minimize the amount of change when migrating an existing .

GitHub

Every Pod in a cluster gets its own unique cluster-wide IP address. Il vous est dorénavant possible d’utiliser un load-balancer tel que MetalLB et de l’utiliser en tandem avec nginx-ingress pour créer un unique point . So, technically, nginx ingress controller and LoadBalancer type .Add the ingress-nginx repository and use Helm to deploy an NGINX ingress controller.Hi I’m relatively new to Kubernetes.

Adding Linode NodeBalancers to your Kubernetes Cluster. The Ingress resource supports the following features: Content-based routing:As we discuss in Deploying BIG-IP and NGINX Ingress Controller in the Same Architecture, many organizations have use cases that benefit from deploying an external load balancer with an Ingress controller (or in most cases, multiple Ingress controller instances). You create an ingress resource, it creates the HTTP/S load balancer. Kubernetes Controllers and OperatorsThe Ingress is a Kubernetes resource that lets you configure an HTTP load balancer for applications running on Kubernetes, represented by one or more Services.

Create an External Load Balancer

Azure can also manage external DNS configurations for HTTP application routing as new Ingress routes . We plan to build a on-premise K8s cluster. The issue with the health probe path being set incorrectly to C:/Program Files/Git/healthz instead of /healthz is related to the environment in which the helm .In this article.Google Kubernetes Engine (GKE) offers integrated support for two types of Cloud Load Balancing for a publicly accessible application: Ingress.19:05 An NGINX Load Balancer for Kubernetes Services. However, load balancers have different characteristics from ingresses. I have quite some knowledge after reading 5 or 6 books on Kubernetes but I have never built one cluster before.Bare-metal considerations ¶.NGINX Plus enables you to easily automate load balancing traffic to multiple Kubernetes active-active clusters.Expanding your Kubernetes cluster for external traffic needs seamless integration with a load balancer.AKS load balances requests to the Kubernetes API server, and manages traffic to application services.; Configuration per ingress resource through annotations.Why Nginx for Kubernetes Load Balancer? You may wonder, why use Nginx for a Kubernetes bare metal Load Balancer? Well, it is free, open-source, and easy.

Kubernetes Ingress with Nginx Example

kubectl apply -f nginx-deployment. Code of conduct.

NGINX Ingress Controller + front F5 Load Balancer

Install and configure Kubernetes cluster master nodes using kubeadm - Part 2. This is because they are primarily used to expose services to the internet, which, as we saw above, is also a feature of ingresses. Ingress NGINX Controller. Workloads and Services. Load Balancers in Kubernetes have quite a bit of overlap with ingresses. The cert-manager tool creates a Transport Layer Security (TLS) certificate from the Let’s Encrypt certificate authority (CA) providing . To add an external load balancer to your Kubernetes cluster you can add the example lines to a new configuration file, or more commonly, to a Service file. To solve this problem, organizations usually choose an external hardware or virtual load balancer or a . The NGINX Plus Split Clients module, key-value store, rate limits, and other security controls add enterprise-class traffic management controls to Kubernetes platform and DevOps engineers, providing real-time dynamic ratio load .In addition to the cluster’s Resources tab, cluster resources (worker nodes, load balancers, and volumes) are also listed outside the Kubernetes page in the DigitalOcean Control Panel. It provides a great option for . Then is checks ingress rules and distributes the load.ipMode field for a Service. But when all of your pods are equally healthy, the load will be distributed equally. The application routing add-on supports two ways to configure ingress controllers and ingress objects: Configuration of the NGINX ingress controller such as creating multiple controllers, configuring private load balancers, and setting static IP addresses.run this command to apply nginx-deployment.This post shows how to use NGINX Plus as an advanced Layer 7 load‑balancing solution for exposing Kubernetes services to the Internet, whether you are running Kubernetes . We discussed handling of these resource types via Service and . If you’d like to see more detail about your deployment, you . You will use MITM-Proxy as an HTTP .This provides an externally-accessible IP address that sends traffic to the correct port on your cluster nodes, provided your cluster runs in a .

Services, Load Balancing, and Networking

yaml that you enabled internal setup.Kubernetes facilitates load balancing in two primary ways: internally within the cluster, and externally for accessing applications from outside the cluster. ingress-nginx is an Ingress controller for .Create an External Load Balancer. Services, Load Balancing, and Networking.

NGINX Plus can periodically check the health of upstream servers by sending special health‑check requests to each server and verifying the correct response. Such a load balancer is necessary to deliver those applications to clients outside of the Kubernetes cluster.Nginx Ingress Controller.A Better Solution for On-Premises TCP Load Balancing: NGINX Loadbalancer for Kubernetes.

As long as you have all of the virtual private servers in place you should now find that the load balancer will begin to distribute the visitors to the linked servers equally.Bare-metal environments lack this commodity, .

Creating a Kubernetes load balancer on Azure simultaneously sets up the corresponding Azure load balancer resource.ip - IP is set for load-balancer ingress points that are IP based (typically GCE or OpenStack load-balancers). Ingress can be imported using its namespace and name:The NGINX Ingress Controller uses Linode NodeBalancers, which are Linode’s load balancing service, to route a Kubernetes Service’s traffic to the appropriate backend Pods over HTTP and HTTPS. If you want just one internal load balancer, try to setup you controller. Externally, you would typically use services of . However, there are many .