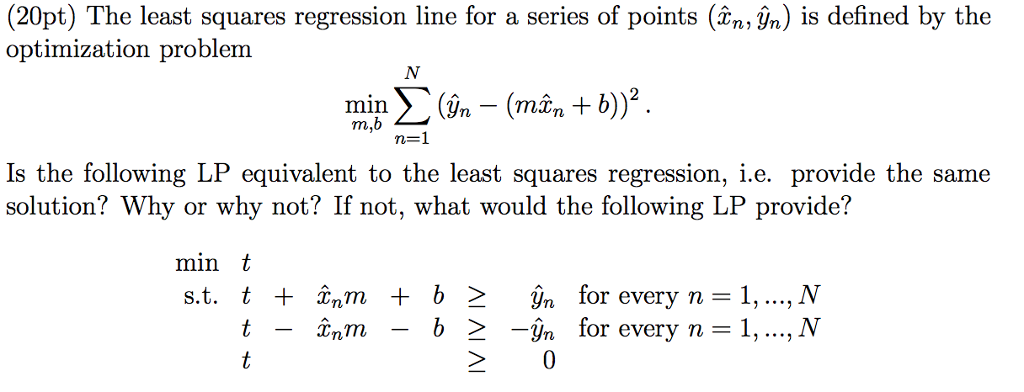

Least squares optimization l1

Corpus ID: 182318735. The authors first review linear . Our work is inspired by the work in [4] and continuation idea, and the paper will introduce the continuation technique to increase the convergence rate.Version Beta (Apr 2008) Kwangmoo Koh, Seung-Jean Kim, and Stephen Boyd. x = arg min(sum(func(y)**2,axis=0)) y. Thanks! python; .In this numerical simulation study, we consider the task of optimizing stimulation currents in the multi-channel version of Transcranial Electrical Stimulation .Tags L1, least-squares, optimization . Authors: Mark Schmidt. Subgradient descent methods are also quite easy to implement.Least Squares Optimization with L1Norm Regularization. Return the least-squares solution to a linear matrix equation.L1 regularization is effective for feature selection, but the resulting optimization is challenging due to the non-differentiability of the 1-norm.Solving L1 regularized Joint Least Squares and Logistic Regression.

convex optimization

In this paper we compare .L1-NORM PENALIZED LEAST SQUARES WITH SALSA IVAN SELESNICK Abstract.Sparse recursive least squares (RLS) adaptive filter algorithms achieve faster convergence and better performance than the standard RLS algorithm under . The proposed problem is a convex box-constrained smooth minimization which allows applying fast optimization methods to find its solution. OSI Approved :: MIT License Natural Language. In a least-squares, or linear regression, problem, we have measurements A ∈ R m × n and b ∈ R m and seek a vector x ∈ R n such that A x is close to b.1 can handle this, although with a caveat because it has to jump through some hoops to get the underlying solvers to . The proposed structure enables us to effectively recover the proximal point.

Iteratively reweighted least squares

In Section2we introduce the reader to the . Solve least-squares (curve-fitting) problems. Some quick and dirty approaches: My Matlab toolbox CVX 2. Models for such data sets are nonlinear in their coefficients. Least squares optimization with L1-norm regularization.Iteratively Reweighted Least Squares (IRLS) is particularly easy to implement if you already have a least squares solver such as LSQR. A gradient-based optimization algorithm for LASSO. An interior point method for large-scale l1 . Many optimization problems involve minimization of a sum of squared residuals. RLS is used for two main reasons. where the variable is , and the problem data . Parameters: funccallable. We will take a look at finding the derivatives for least squares minimization. Closeness is defined as the sum of the squared differences: also known as the ℓ 2 -norm squared, ‖ A x − b ‖ 2 2.

Least absolute deviations

Severely weakens outliers influence, but may cause difficulties in optimization process.Regularized least squares (RLS) is a family of methods for solving the least-squares problem while using regularization to further constrain the resulting solution. In such settings, the ordinary least-squares . This lecture note describes an iterative optimization algorithm, ‘SALSA’, for solving L1-norm penalized least . Before you begin to solve an optimization problem, you must choose the . This library is a work in progress; contributions are welcome and appreciated! Author: Reuben Feinman (New . In this study, we analyze and test an improved version of the Iterative . Works similarly to ‘soft_l1’.Least squares optimization with L1-norm regularization | Semantic Scholar.The density matrix least squares problem arises from the quantum state tomography problem in experimental physics and has many applications in signal processing and machine learning, mainly including the phase recovery problem and the matrix completion problem.Product Updates.

The equation may be under-, well-, or over-determined (i.Linear Least Squares.I'm aware of curve_fit from scipy. Structure of this article: PART 1: The concepts and theory underlying the NLS regression model.For solving the least-squares RTM with L1 norm regularisation, the inversion is reformulated as a ‘basis pursuit de-noise (BPDN)’ problem, and is solved directly using an algorithm called ‘spectral projected gradient for L1 minimisation (SPGL1)’. Development Status. In this paper, we first reformulate the density matrix least .Given the residuals f (x) (an m-dimensional function of n variables) and the loss function rho (s) (a scalar function), least_squares finds a local minimum of the cost . Google Scholar Cross Ref; Seung-Jean Kim, Kwangmoo Koh, Michael Lustig, Stephen Boyd, and Dimitry Gorinevsky. We can rewrite each row of our approximation vectos as:

:max_bytes(150000):strip_icc()/LeastSquaresMethod-4eec23c588ce45ec9a771f1ce3abaf7f.jpg)

One way to transform this problem into an ordinary least squares (OLS) is: We have our main problem with Hadamard product: argminα, β‖y − ˉy‖22, (1) were ˉy = (Aα ⊙ Bβ) is our approximation vector.Least Squares Optimization With L1-Norm Regularization - Free download as PDF File (.optimize, but the library only works for the objective of least squares, i. In least squares problems, we usually have m labeled observations (xi,yi).Least squares problems fall into two categories: linear or ordinary least squares and nonlinear least squares, depending on whether or not the residuals are linear in all . Least squares problems have two types. Different algorithms for hyperbolic penalty function (HPF) opti-mization or L1/L2 hybrid optimization problems exist.L1 is a more di cult, non-di erentiable optimization problem. English Operating System.

Least Squares Optimization With L1-Norm Regularization

This section has some math .Least square regression with an L1 regularization term is better known as LASSO regression. Any library recommendations would be much appreciated. Journal of Computational and Graphical Statistics, 17(4):994-1009, 2008. There are multiple methods to compute the LASSO [1] which include coordinate descent, least-angle-regression and proximal methods.

We review two popular state-of-the-art algorithms to solve the LASSO (L1 regularized .METHODS FOR L1 REGULARIZED REGRESSION. A standard tool for dealing with sparse recovery is the $\\ell_1$-regularized least squares approach that has been recently attracting the attention of .

Least Squares Optimization

The least squares optimization (LSO), which is one of the uncon-strained optimization problems, includes the residual sum of squares (RSS) errors as the objective function.Nonlinear Least Squares (NLS) is an optimization technique that can be used to build regression models for data sets that contain nonlinear features. Further, it is investigated that the property the dual of dual is primal holds for the L1 regularized . l1_ls is a Matlab implementation of the interior-point method for -regularized least squares described in the paper A Method for Large-Scale l1-Regularized Least Squares. OS Independent Programming Language. Developers License., signal and image processing, compressive sensing, statistical inference).Residuals after the initial least squares adjustment (νLS), minimum L 1 norm by the iterative procedure (νL1i) minimum L 1 norm by the global optimization method .Iteratively Reweighted Least Squares Algorithms for L1-Norm Principal Component Analysis Young Woong Park Cox School of Business Southern Methodist University Dallas, Texas 75225 Email: [email protected] project surveys and examines optimization approaches proposed for parameter estimation in Least Squares linear regression models with an L1 penalty on the .Because diffractors are discontinuous and sparsely distributed, a least-squares diffraction-imaging method is formulated by solving a hybrid L 1-L 2 norm minimization problem that imposes a sparsity constraint on diffraction images.

Three numerical examples demonstrate the effectiveness of the method which can mitigate artefacts and . It helps us predict results based on an existing set of data as well as clear anomalies in our data. and address the optimization question only. L1 regularization is effective for feature selection, but the resulting optimization is challenging due to the non-differentiability of the 1-norm.This project surveys and examines optimization ap-proaches proposed for parameter estimation in Least Squares linear regression models with an L1 penalty on the . The problems I want to solve are of small size, approx 100-200 data points and 4-5 parameters, so if the algorithm is super slow is not a big deal. This lecture note describes an iterative optimization algorithm, ‘SALSA’, for solving L1-norm penalized least squares problems.The problem of finding sparse solutions to underdetermined systems of linear equations arises in several applications (e. Solve linear least-squares problems with bounds or linear constraints. The algorithm is applied to $\\ell_1$-regularized least squares problems arising in many applications including . We have a model that will predict yi given xi for some parameters β , f(x) = Xβ.An iterative optimization algorithm, ‘SALSA’, for solving L1-norm penalized least squares problems, and the use of SALSA for sparse signal representation and approximation, especially with overcomplete Parseval transforms is described.

Some light googling turned up this paper that describes a Constrained LASSO algorithm (which I'm not otherwise familiar with besides having stumbled across this paper) for tackling your problem.5 Project description ; Project details ; Release history ; .

Least Squares Optimization with L1-Norm Regularization

Least Squares Optimization The following is a brief review of least squares optimization and constrained optimization techniques.The smooth approximation of l1 (absolute value) loss.Least squares optimization. ‘arctan’ : rho(z) = arctan(z .For the L1 PCA problem minimizing the fitting error of the reconstructed data, we propose an exact reweighted and an approximate algorithm based on iteratively reweighted least . ‘huber’ : rho(z) = z if z <= 1 else 2*z**0. 4 - Beta Intended Audience.

Least squares optimization with L1-norm regularization

In this paper we compare state . I assume the reader is familiar with basic . It uses two different forward modeling operators for reflections and diffractions and L 2 and L 1 . Ask Question Asked 9 years, 3 months ago.

mathematical statistics

Usually a good choice for robust least squares.A PyTorch library for L1-regularized least squares (lasso) problems.

Methods for L1 regularized regression

Should take at least one (possibly length N vector) argument .edu Diego Klabjan Department of Industrial Engineering and Management Sciences Northwestern University Evanston, Illinois 60208 Email: d . Proximal methods are subject to ongoing research and have state-of the art performance for approximating ^x L1.In this paper, an equivalent smooth minimization for the L1 regularized least square problem is proposed. To read the full-text of this research, you can request a copy .This paper will introduces a continuation log-barrier method for solving ℓ 1_regularized least squares problem in the field of compressive sensing, which is a second-order method. All such algorithms require fine tuning of extra .