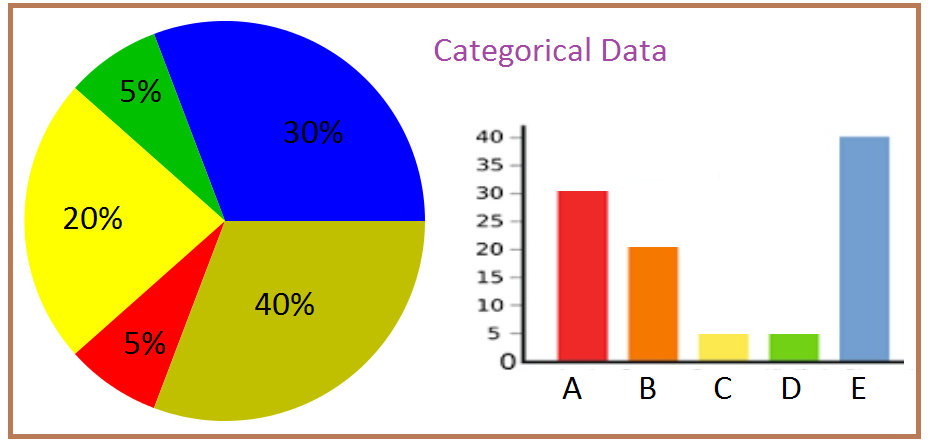

Measurement agreement for categorical data

Sign in | Create an account. Specifically, .

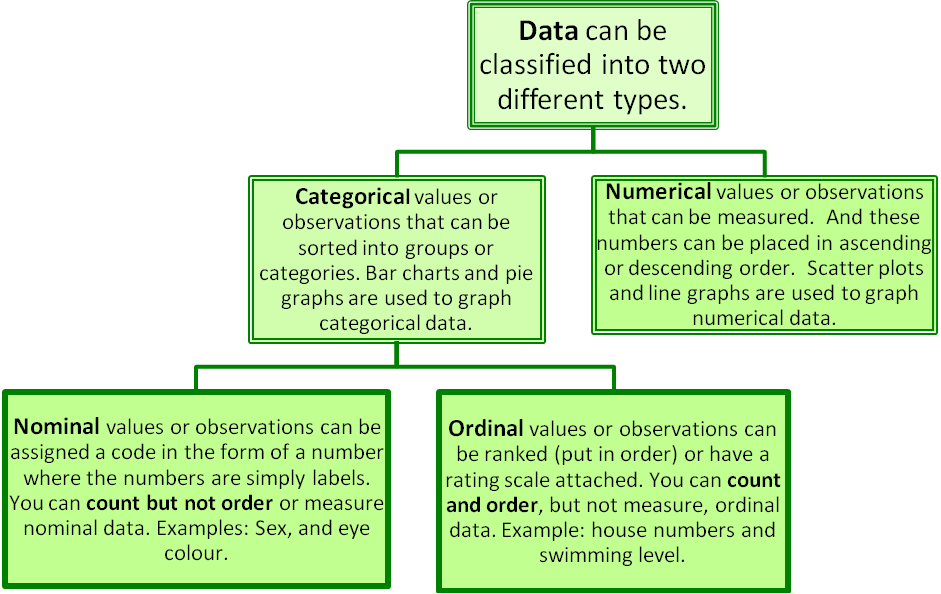

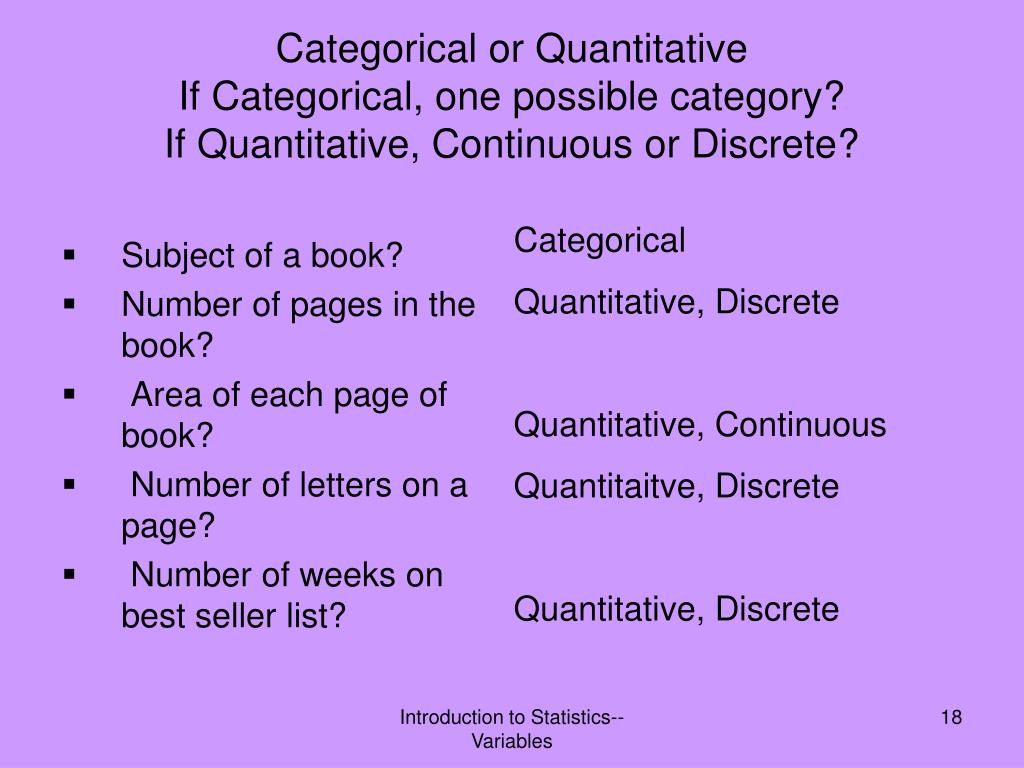

A necessary precondition for aggregation is that there is an agreement among the individuals who form the group with regard to the aggregated .There are 4 levels of measurement: Nominal: the data can only be categorized.

The Measurement of Observer Agreement for Categorical Data

Semantic Scholar extracted view of Measures of clinical agreement for nominal and categorical data: the kappa coefficient. LANDIS JR; KOCH GG.This paper presents a general statistical methodology for the analysis of multivariate categorical data arising from observer reliability studies. 290–297), which is given by. Therefore, we cannot use a test like the χ 2 test, which is intended to determine if the distribution across categories is .4 Repeated Measurements Data 146.3 Model Fitting and Evaluation 150. We believe that a picture . The procedure essentially involves the construction of functions of the observed proportions which are directed at the extent to which the observers agree among themselves and the construction of test statistics for . The dispersion of an ordinal categorical variable can be measured by the index proposed in Leti (1983) (pp. Published in Biometrics 1 March 1977.This entry reviews some well-known indices of agreement, the conceptual and statistical issues related to their estimation, and their interpretation for both . author landis jr; koch gg dep. Such analysis looks at pairs of measurements, either both categorical .

Manquant :

measurement agreementData availability: This paper presents a general statistical methodology for the analysis of multivariate categorical data arising from observer reliability studies. As with the comparison of continuous data, we do require a measure of agreement rather than an association.This chapter has reviewed three popular coefficients to express agreement among categorical variables.Tests for interobserver bias are presented in terms of first-order marginal homogeneity and measures of interobserver agreement are developed as generalized kappa-type statistics. Weighted kappa can be calculated . Interval: the data can be categorized, ranked, and evenly spaced.Statisticians generally consider kappa the most popular measure of agreement for categorical data.Statistical Assessment of Agreement

J R Landis , G G Koch.

Measuring Agreement, More Complicated Than It Seems

The measurement of observer agreement for categorical data

A general statistical methodology for the analysis of multivariate categorical data arising from observer reliability studies is presented and tests for interobserver bias are presented in terms of first-order marginal .

A weighted CCC was proposed by Chinchilli et al.the measurement of observer agreement for categorical data.

Delta: a new measure of agreement between two raters

It is generally thought to be a more robust measure than simple percent agreement calculation, as κ takes into account the possibility of the agreement occurring by chance.

Measuring Observer Agreement on Categorical Data

This is referred to as agreement or concordance or reproducibility between measurements.Several sampling designs for assessing agreement between two binary classifications on each of n subjects lead to data arrayed in a four-fold table, where the ANOVA estimator for the two-way random design approximates Cohen's (1960, Psychological Measurement 20, 37-46) kappa statistic. Ratio: the data can be categorized, ranked, evenly spaced, and has a natural zero.“ The Measurement of Observer Agreement for Categorical Data ” is a paper by J. Weighted kappa became an important measure in the . If you're grouping things by anything other than numerical values, you're grouping them by categories. Ordinal: the data can be categorized and ranked.

Method agreement analysis: A review of correct methodology

The measures are used to . Skip to search form Skip to main content Skip to account menu Semantic Scholar's Logo. A general statistical methodology for the analysis of multivariate . These procedures are illustrated with a . The procedure essentially involves the construction of functions of the observed proportions which are directed at the extent to which the observers agree among themselves and the . These procedures are illustrated with a clinical diagnosis example from the epidemiological literature. There is an impressive published literature on the statistical issues of assessment of agreement among two or more raters involving both .For categorical data, the most typical summary measure is the number or percentage of cases in each category.1 A Heteroscedastic MixedEffects Model 147. However, Fleiss noted that the proposed variance estimators for both . Landis, Gary G Koch Tests for interobserver bias are presented in terms of first-order marginal homogeneity and measures of interobserver agreement are developed as generalized kappa-type statistics.develop a measure of agreement for categorical data.The procedure essentially involves the construction of functions of the observed proportions which are directed at the extent to which the observers agree .The second is to collect data on the response of populations to this thump sound that will inform the development of the needed standard. ( 1996 ) for repeated measurement designs and a generalized CCC for continuous and categorical data was introduced by . However, kappa performs poorly when the marginal distributions are very asymmetric, it is not easy to interpret, and its definition is based on hypothesis of independence of the responses (which is more restrictive than the hypothesis that kappa has a value of .Lin introduced the concordance correlation coefficient (CCC) for measuring agreement which is more appropriate when the data are measured on a continuous scale. We describe how to construct and interpret . Medicine, Mathematics. This paper presents a general statistical methodology . The mission involves . Petrie

MEASUREMENT OF AGREEMENT FOR CATEGORICAL DATA

For example, kappa can be used to compare the ability of different . source biometrics; u. The phi coefficient is a measure of association directly related to the chi-squared significance test. By learning how to use tools such as bar graphs, Venn .

Analyzing categorical data

Statistical measures are described that are used in diagnostic imaging for expressing observer agreement in regard to categorical data.In statistics, a categorical variable (also called qualitative variable) is a variable that can take on one of a limited, and usually fixed, number of possible values, assigning each .Abstract

Interrater Agreement Measures for Nominal and Ordinal Data

MICHIGAN, ANN ARBOR, .For simplicity, therefore, in this review we illustrate the statistical approach to measuring agreement by considering only one of these measures for a given situation, . Koch published in 1977. Enter the above frequencies as directed on the screen and select the default method for weighting. The method of Landis and Koch requires a two-way random-effects analysis of variance of the Z,j, and estimation of the .

When assessing the concordance between two methods of measurement of ordinal categorical data, summary measures are often used.Cohen's kappa statistic, \(\kappa\) , is a measure of agreement between categorical variables X and Y.This paper is concerned with the analysis of multivariate categorical data which are obtained from repeated measurement experiments and appropriate test statistics are developed through the application of weighted least squares methods. Kappa is a measure of agreement particularly suited to 2 × 2 tables; it measures agreement beyond chance.Five categories of result were recorded using each method: To analyse these data in StatsDirect select Categorical from the Agreement section of the Analysis menu.Measuring Observer Agreement on Categorical Data by Andrea Soo A THESIS SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY GRADUATE PROGRAM IN COMMUNITY HEALTH SCIENCES CALGARY, ALBERTA April, 2015 c .Cohen's kappa statistic is an estimate of the population coefficient: κ = P r [ X = Y] − P r [ X = Y | X and Y independent] 1 − P r [ X = Y | X and Y independent] Generally, 0 ≤ κ ≤ 1, although negative values do occur on occasion.4 Testing for Homoscedasticity 151. This paper is concerned with the analysis of multivariate categorical data which are obtained from . 1/2 document type article language english keyword (fr) methode moindre carre analyse multivariable biometrie analyse . Sign In Create Free Account.For instance, one of the main questions in multilevel data analysis is whether it is appropriate to aggregate data and to use the aggregated measures to make inferences about higher level units.When assessing the concordance between two methods of measurement of ordinal categorical data, summary measures such as Cohen’s (1960) kappa or Bangdiwala’s (1985) B-statistic are used.A general statistical methodology for the analysis of multivariate categorical data arising from observer reliability studies is presented and tests for interobserver bias are presented in terms of first-order marginal homogeneity and measures of interob server agreement are developed as generalized kappa-type statistics. 1977 Mar;33 (1):159-74. Search 216,811,790 papers from all fields of science.6 Case Study: Cholesterol Data 152. Cohen's kappa is ideally suited for nominal (non-ordinal) categories. It has an Open Access status of .This communication proposes a measure of agreement when each of several measuring devices yields a categorization for each of a sample of experimental units.Measuring Observer Agreement on Categorical Data by Andrea Soo A THESIS SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILMENT .5 Evaluation of Similarity, Agreement, and Repeatability 151.2 Specifying the Variance Function 149.The important principle of measurement theory is that one can convert from one scale to another only if they are of the same type and measure the same attribute. The measures are used to characterize the reliability of imaging methods and the reproducibility of disease classifications and, occasionally with great care, as the surrogate for accuracy.In the second part, we will discuss methods to determine the agreement between categorical variables, . Choose the default 95% confidence interval.