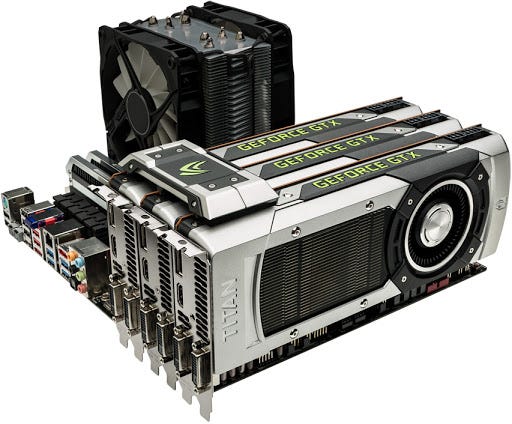

Multiple gpu server

Fonctionnalités .The AIME A8004 is the ultimate multi-GPU server, optimized for maximum deep learning training, inference performance and for the highest demands in HPC computing: Dual EPYC Genoa CPUs, the fastest PCIe 5. Rent high quality, top performance GPU bare metal servers for deep learning.; NVIDIA DGX Systems NVIDIA's latest generation of infrastructure for enterprise AI. Get a multi GPU server setup.Select two highest-end server CPUs to pair with the eight A100 GPUs, to keep up with the A100.GPU Servers For AI, Deep / Machine Learning & HPC | Supermicro. Preinstalled AI frameworks TensorFlow, PyTorch, Keras and Mxnet LLaMa (short for Large Language Model Meta AI) is a collection of pretrained state-of-the-art large language models, developed by Meta AI.How to run 30B/65B LLaMa-Chat on Multi-GPU Servers.argv[1] # Choose device from cmd line. For example, to run API server on 4 GPUs: $ python-m vllm. Which GPU cards to be selected.The 5 Best GPUs for Deep Learning to Consider in 2023 - .To run multi-GPU serving, pass in the --tensor-parallel-size argument when starting the server.

GPU servers rental for deep learning

TensorFlow Multiple GPU: 5 Strategies and 2 Quick Tutorials

This section will provide an overview of how to set up the workstation and how to get it running with the required software.This means that only a small number of applications can run simultaneously on a single GPU.

Multi-GPU Servers: Multi-GPU servers are designed to accommodate multiple GPUs within a single server chassis. Multi-GPU in carla means that the user can start several servers (called secondary servers) that will do render work for the main server (called primary server) using a dedicated GPU from the system. Créer un compte Connexion.Moreover, multiple GPU servers can be registered into one gpuview dashboard and all stats are aggregated and accessible from one place.Multi-GPU Servers: Designed to accommodate multiple GPUs within a single server chassis, these servers offer enhanced performance by combining multiple GPUs.

Multi-GPU Servers

Rechercher Rechercher.

It includes tuning your GPU settings, ensuring proper cooling, and keeping your drivers up to date.

Elastic GPU Service

To measure this isolation level, we ran the workload first on a single instance (reference time); then we launched the same workload on multiple MIG instances, simultaneously. Teams that want to develop and run more GPU applications or increase the . RESERVED CLOUD.Scalable parallel computing GPU dense servers that are built for high performance.The Multi-GPU range of servers are highly dense and scalable solutions designed to maximise the performance modern GPUs provide.

Multi-vGPU

The problem is this solution is much more expensive.This story provides a guide on how to build a multi-GPU system for deep learning and hopefully save you some research time and experimentation.

High End Multi GPU Server Rental

Install packages to run AI ML codes.Then you can use server gpus and have multiple users per gpu, and don't have to have kvm extenders, and do it all over the network.For lighter workloads, multiple virtual machines (VMs) can share GPU resources with NVIDIA virtual GPU software. For example, to run API server on 4 GPUs: $ python -m . GPU: Up to 4 double . Unlike traditional servers that primarily rely on CPUs (Central Processing Units), GPU servers are equipped with . Use a minimum of four PCIe x16 links between the two CPUs and eight A100 . To scale vLLM beyond a single machine, start a Ray runtime via CLI before running vLLM: $ # On head node $ ray start- . Parameter Server Strategy. Use a single RTX 6000 GPU with six VCPUs, 46 GiB RAM, 658 GiB temporary storage at just $1. You'll also want to consider load balancing if running multiple .Rent high quality, top performance GPU bare metal servers for deep learning. The primary server will distribute the sensors, that are created by the user, to the different secondary servers . Artificial Intelligence (AI) Scientific .com5 Best GPU for Deep Learning & AI 2023 (Fast Options!) - .Multi Worker Mirrored Strategy. What Makes a GPU Server Different from Other Servers? What . Access from any location of the world.

How to run 30B/65B LLaMa-Chat on Multi-GPU Servers

With Lambda GPU Cloud, you can even save 50% on computing, reduce cloud TCO, and will never get multi-year commitments. import numpy as np import tensorflow as tf. Performance for both RTX GPUs is similar, however , the RTX 8000 GPU would be a best choice for applications that require a higher amount of memory.

GPU Servers for AI: Everything You Need to Know

Parameter server training is a common data-parallel method to scale up model training on multiple machines.; Scalar ServerPCIe server with up to 8x customizable NVIDIA Tensor Core GPUs and dual Xeon or AMD EPYC processors.

Multi GPU: An In-Depth Look

2 GPU 4 GPU 8 GPU . from datetime import datetime. Running multiple of NVIDIA's RTX A6000 video cards provides excellent scaling in GPU-based rendering engines! This is no surprise, as it was true with the RTX 30 Series and other GeForce and Quadro video cards as well, but it is still impressive to see what these .GPU-accelerated servers power all aspects of genAI, AI and ML. Workstation Specialists GPU compute servers can be configured with multiple high-performance GPU’s such as the Professional . Launch GPU instance Talk to an engineer.

The GPU Cloud for AI.GPU Dedicated Servers can be a great option for running Android emulators, especially if you're running multiple instances at once or need high-performance and customization options. Cloud-Based GPU Servers: These servers have gained popularity due to their .8TB/s bidirectional, direct GPU-to-GPU interconnect that scales multi-GPU input and output (IO) within a server. Central Storage Strategy.

How to Build a Multi-GPU System for Deep Learning in 2023

Wide Selection of GPU Options Available.A GPU server, also known as a Graphics Processing Unit server, is a specialized type of server that is designed to leverage the computational power of GPUs for performing complex graphical calculations and rendering.In this blog, we discussed the performance of the Dell EMC DSS 8440 GPU Server and NVIDIA RTX GPUs for HPC and AI workloads.Carla Multi-GPU feature.5gb: 1, 4 or 7 workloads running in parallel. Step 2: Allocate Space, Power and Cooling.In this article: GPU Cluster Uses.

GPU Servers For AI, Deep / Machine Learning & HPC

High Density Server Compute, Storage, and Networking are possible in high density, multi-node servers at lower TCO and greater efficiency.Either way, you must install your operating system, GPU drivers, and necessary software.Multi-GPU Servers: Multi-GPU servers are specifically designed to accommodate multiple graphics cards within a single server chassis. Visualize outcomes and drive business intelligence (BI) needs with GPU-accelerated servers. Compared to the famous ChatGPT, the LLaMa models are available for download and can be run on available hardware.

Hyperplane ServerNVIDIA Tensor Core GPU server with up to 8x H100 GPUs, NVLink, NVSwitch, and InfiniBand.Enhance Your Performance with Multi-GPU Server Rentals. The NVIDIA NVLink Switch chips connect multiple . GPU supports up to 24 million pps, a bandwidth of up to 64 Gbit/s over VPCs, and an 800G RDMA network. We only used the three instance sizes that allows exposing multiple MIG instances at the same time: 1g.It uses the SHENLONG architecture to improve server performance and reduce I/O latency. MPS is a binary-compatible client-server runtime implementation of the CUDA .Learn about the use of multi GPU in deep learning projects. Deploying Software .Amazon EC2 G4 instances are the industry’s most cost-effective and versatile GPU instances for deploying machine learning models such as image classification, object detection, and speech recognition, and for graphics-intensive applications such as remote graphics workstations, game streaming, and graphics rendering.This is the most common setup for researchers and small-scale industry workflows. GPU Workstation.For the more advanced and larger AI and HPC model, the model requires multiple nodes of aggregate GPU memory to fit. Configurable NVIDIA A100, RTX 3090, Tesla V100, Qaudro RTX 6000, NVIDIA RTX A6000, RTX 2080TI GPUs.In order to set up a multi-GPU system you must configure the necessary hardware components and configure the operating system.solutionsreview. Visit the DA Solutions page .

Simplify GPU Sharing in Multi-GPU Environments‚ Part 1

To accomplish this with MPS, launch . Check out the Parameter server training . Thumbnail view of GPUs across . For double precision workloads, or workloads that require high . This can be accomplished by using the utility ‘taskset’, which allows binding a running program to multiple cores or launching a new one on them.Reasons for Low GPU Utilization

Multi-GPU Server, Multiple GPU Cards Rental, Multi GPU Gaming

Variables are created on parameter servers and they are read and updated by workers in each step.

Virtualization Multiplied with Enhanced Virtual GPU (vGPU)

By leveraging the performance of multiple cards, the Multi . It is suitable for high-throughput scenarios where multiple threads run in parallel to process computing tasks. Discover our range of multi-GPU server plans featuring top-tier options like the 2xRTX 4090, 3xV100, 4xA100, and .

GPU Server: Use Cases, Components, and Leading Solutions

TL;DR: NVIDIA RTX A6000 Multi-GPU Rendering Performance. These servers offer enhanced performance by harnessing the combined computational power of multiple GPUs, making them ideal for high-performance computing (HPC) and large-scale deep learning tasks.For setups where multiple GPUs are used with an MPS control daemon and server started per GPU, we recommend pinning each MPS server to a distinct core. They are ideal for high-performance computing and large-scale deep learning. High-performance Computing (HPC) tasks are executed with ease thanks to the parallel computing capabilities of the latest NVIDIA and AMD GPUs. On-demand & reserved cloud NVIDIA GPUs for AI training & inference.Multi-GPU Examples¶ Data Parallelism is when we split the mini-batch of samples into multiple smaller mini-batches and run the computation for each of the smaller mini .

Applications Include : Deep Learning.This is part of a series of articles about Multi GPU.

To promote the optimal server for each workload, NVIDIA has introduced GPU-accelerated server platforms, which recommends ideal classes of servers for various Training (HGX . For larger models and increasingly demanding workflows, NVIDIA Quadro ® Virtual Desktop Workstation (vDWS) and NVIDIA Virtual Compute Server (vCS) software allow multiple GPUs to be assigned to a single VM. You can also have multiple systems in a cluster so if a server fails the users can be switched to a different server. training high-resolution image classification models on tens of millions of images using 20-100 GPUs. Scalable, parallel computing GPU dense servers that are built for high performance.Multi GPU workstations, GPU servers and cloud services for Deep Learning, machine learning & AI.api_server \ $ --model facebook/opt-13b \ $ --tensor-parallel-size 4. High Performance . This is a good setup for large-scale industry workflows, e. You'll want to optimize your GPU dedicated server's performance.

The Multi-Process Service takes advantage of the inter-MPI rank parallelism, increasing the overall GPU utilization. For example, a deep learning recommendation model (DLRM) with terabytes of embedded tables, a large mixture-of-experts (MoE) natural language processing model, and the HGX H100 with NVLink . UBUNTU server installation. Step 1: Choose Hardware. Get from command line the type of processing unit that you desire to use (either “gpu” or “cpu”) device_name = sys. Get a multi GPU server. Visit the AI Solutions page.