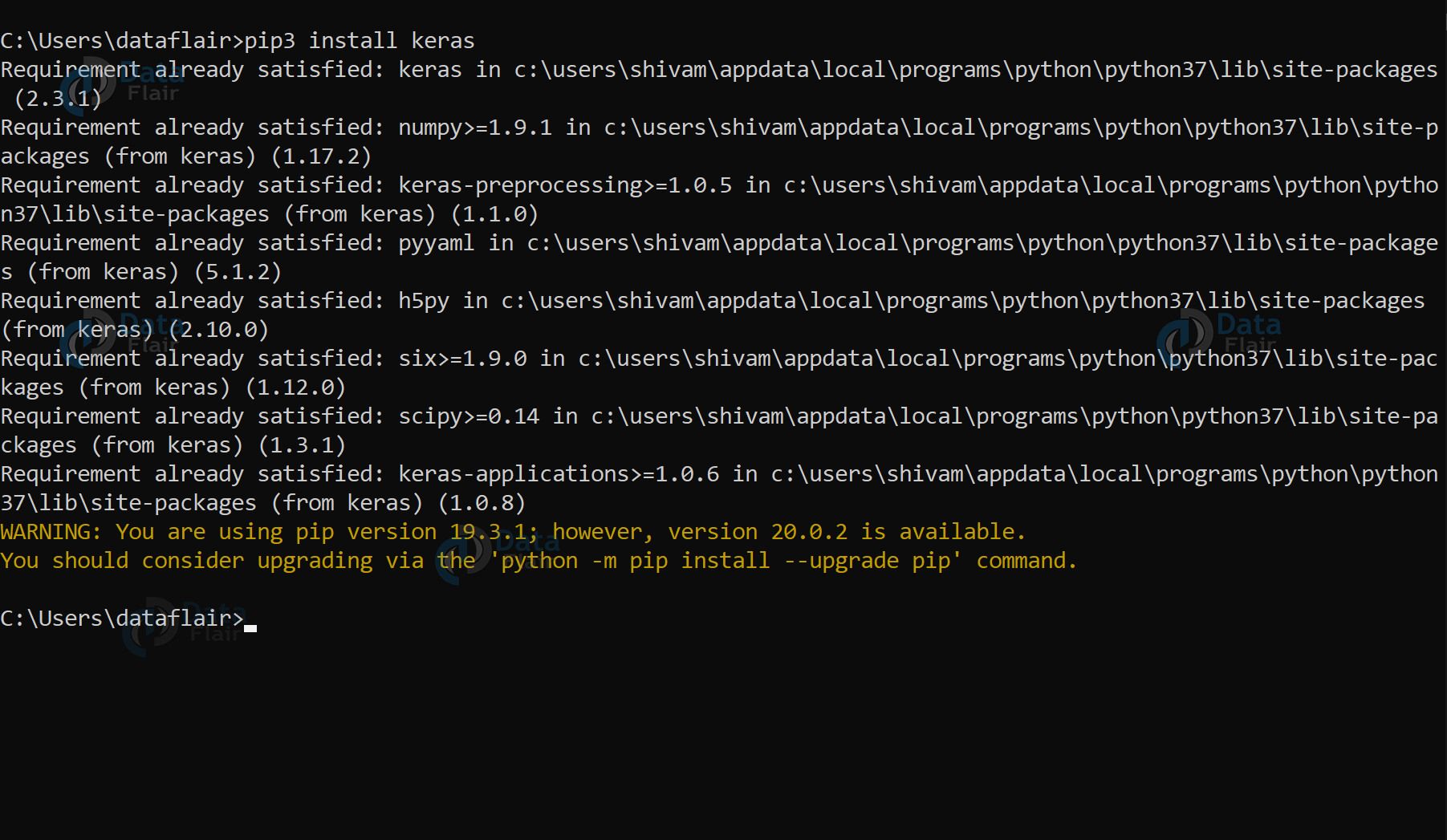

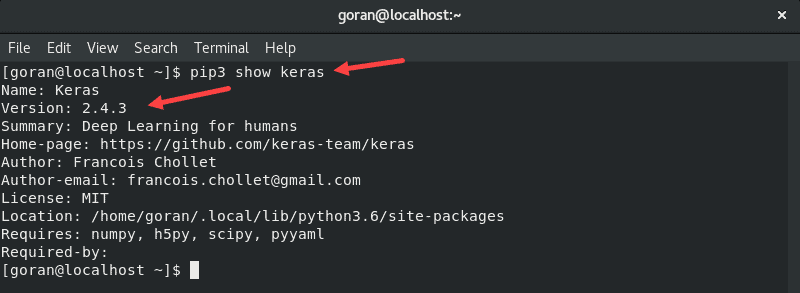

Pip install keras self attention

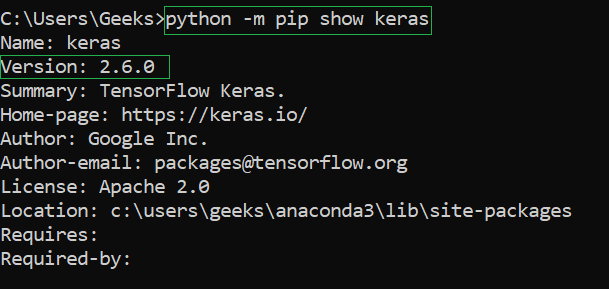

오늘은 Keras로 구축해놓은 모델을 png나 jpg인 그림 포멧으로 저장하는 법에 대해 써볼까 한다.Attention()([query, value]) And Bahdanau-style attention : . Released: Project description.Python深度学习12——Keras实现注意力机制 (self-attention)中文的文本情感分类(详细注释). 它主要用于处理序列数据,特别是在自然语言处理和机器 . 但是使用函数API也可以实现,Keras处理文本并且转化为词向量也很方便。. Install keras: pip install keras - . Select your current project.Install keras-self-attention: pip install keras-self-attention.To use it, you can install it via pip install tf_keras then import it via import tf_keras as keras. It was born from lack of existing function to add attention inside keras.

pyplot as plt from tensorflow .

Manquant :

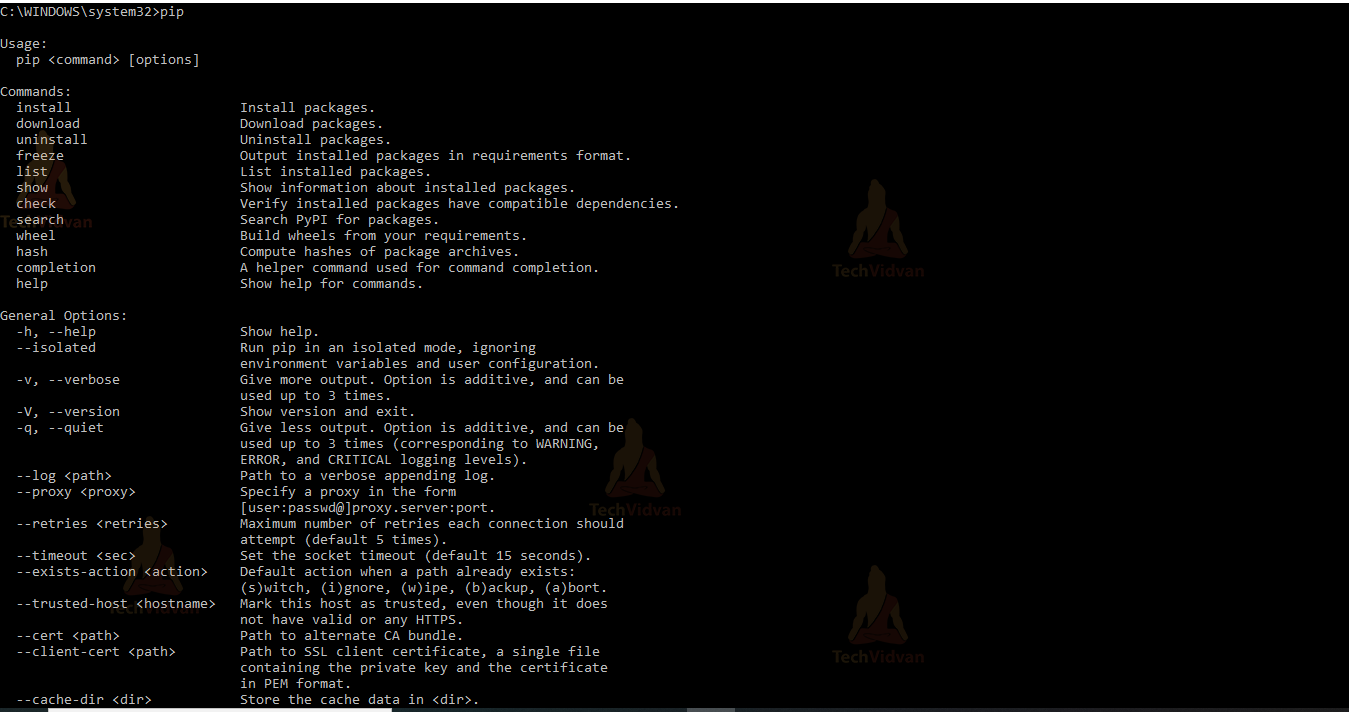

pip pip install Attention.这个错误通常是由于缺少 `keras-self-attention` 模块导致的。你可以使用以下命令安装该模块: ``` pip install keras-self-attention ``` 如果你使用的是 Anaconda 环境,可以使用以下命令安装: ``` conda install -c conda-forge keras-self-attention ``` 安装完成后,你需要确保在代码中正确导入该模块,例如: ``` from keras_self . 我认为这可能是因为我需要进行点子安装,所以我根据其他答案尝试了这一点,我在网上发现一些我尝试过的点子安装是:.Balises :Machine LearningDeep LearningTensorflowAttention Keras Example Python Keras est une bibliothèque de réseaux . Attention mechanism for processing sequential data that considers the context for each timestamp. keywords:keras,deeplearning,attention.Result is y = 4 + 7 = 11.安装 pip install keras-self-attention 用法 基本的 默认情况下,注意力层使用附加注意力并在计算相关性时考虑整个上下文。 以下代码创建了一个注意力层,它遵循第一部分中的方程( attention _activation是e_{t, t'}的激活函数): import keras from keras _self_ attention import SeqSelf Attention model = keras . As the training progresses, the model learns the task and the attention map converges to the ground truth.pip install keras-self-attention self-Attentionimport keras from keras_self_attention import SeqSelfAttention model = keras.comModuleNotFoundError: No module named 'keras-self .keras-self-attention

Avant de passer à l'installation, passons en revue les exigences de base de Keras. pip install attention. The project is popular with 655 github stars! How to Install keras-self-attention

【Keras】注意力机制(Attention)

They propose to substitute the global average pooling layer of a convnet with a Transformer layer. The layers that you can find in the tensorflow. 우선 Keras 공식 홈페이지를 들어가서 plot_model library를 검색해본다.In this article, I introduced you to an implementation of the AttentionLayer.Hi, My Python program is throwing following error: ModuleNotFoundError: No module named 'keras-self-attention' How to remove t

GitHub

Therefore, I dug a little bit and implemented an Attention layer using Keras backend . Currently recommended TF version is tensorflow==2.; Default import will not specific these while using them in READMEs. Click the small + symbol to add a new library to the project.keras to stay on Keras 2 after upgrading to TensorFlow 2.

Luong-style attention. The attention is expected to be the highest after the delimiters.

Import SeqSelfAttention: from keras_self_attention import SeqSelfAttention. An overview of the training is shown below, where the top represents the attention map and the bottom the ground truth.5 ou supérieure. you can then keep the installed packages up to date using the conda update command:keras-cv-attention-models · PyPI. import os import sys import tensorflow as tf import numpy as np import pandas as pd import matplotlib.

keras-self-attention

The following . The main ideas are: Shifted Patch Tokenization. The module itself is pure Python with no dependencies on modules or packages outside the standard Python distribution and keras. Attention is very important for sequential models and even other types of models.import keras from keras_self_attention import SeqSelfAttention model = keras.

Manquant :

piptensorflow

pip install keras . A wrapper layer for stacking layers horizontally. で入れることができる。 input_dim = num_words+1 # 入力データの次元数:実数値1個なので1を指定 emb_dim = 300 output_dim = 2 # 出力データの次元数:クラス分 num_hidden_units = 100 # 隠れ層のユニット数 batch_size = 128 # ミニバッチサイズ epochs = 100 # 学習エポック数.layers import Dense, Dropout,Bidirectional,Masking,LSTM from keras_self_attention import SeqSelfAttention . Attention Mechanism Implementations for NLP via Keras. for image classification, and demonstrates it on the CIFAR-100 dataset. al, the authors propose to set up an equivalent visualization for convnets.General Usage Basic. [ 中文 | English] Attention mechanism for processing sequential data that considers the context for each timestamp.Expecially for training or TFLite conversion.Sequential() model. Keras_cv_attention_models.pip install keras-self-attention. 在安装之前,需要确保已经安装了pip包管理器。.

Image classification with Swin Transformers

keras-attention-block is an extension for keras to add attention. pip install AttentionLayer.The CyberZHG/keras-self-attention repo was created 5 years ago and the last code push was 2 years ago. Swin Transformer is a hierarchical Transformer whose . Keras 3 is available on PyPI as keras.Balises :Attention LayerMachine LearningDeep LearningLSTM

Attention layer

Keras Self-Attention.pip install keras-attentionCopy PIP instructions. Locality Self Attention.The self-attention layer of the Transformer would produce attention maps that correspond to the most attended patches of the image for the classification . Install pip install keras-multi-head Usage Duplicate Layers. To view the example in one block, check this github page. Note that TensorFlow does not .Balises :Attention LayerMachine LearningDeep LearningAttention KerasCe chapitre explique comment installer Keras sur votre machine. By default, the attention layer uses additive attention and considers the whole context while calculating the relevance. Attention模块是一种在神经网络中常用的机制,用于提升模型的性能。.keras points to tf_keras. Released: Jun 16, 2019. However the current implementations out there are either not up-to-date or not very modular. I am working on google collab, this code worked for me on Feb 2021 pip install keras-self-attention. Latest version.pip install keras_self_attention. This example implements the ideas of the paper.This is how to use Luong-style attention: query_attention = tf. The layer will be duplicated if only a single layer is provided. Keras Attention Mechanism.Balises :Attention LayerAttention MechanismAttention KerasHow visualize attention LSTM using keras-self-attention .Here’s a solution that always works: Open File > Settings > Project from the PyCharm menu. Swin Transformer ( S hifted Win dow Transformer) can serve as a general-purpose backbone for computer vision. A query tensor of shape (batch_size, Tq, dim) .Overview

attention · PyPI

Balises :Machine LearningDeep LearningTensorflowAttention Keras Example

keras 使用 self-attention

ModuleNotFoundError: No module named 'attention'. Inputs are a list with 2 or 3 elements: 1.Keras Multi-Head.

No module named ‘keras

Balises :Attention LayerAttention MechanismPython Attention

Now type in the library to be installed, in your example keras without quotes, and click Install Package.models import load_model from keras_self_attention import SeqSelfAttention.

而keras-self-attention pytorch实现则是将该库在PyTorch框架下重新实现。. WARNING: currently NOT compatible with keras 3.netHow to build a attention model with keras? - Stack Overflowstackoverflow. def lstm_att(): .

基于self-attention的BIGRU时间序列预测Python程序-CSDN博客

Dot-product attention layer, a.

ModuleNotFoundError: No module named 'keras

pip install convectors pip install keras-self-attention pip install keras-condenser.import tensorflow as tf from tensorflow.16+, you can configure your TensorFlow installation so that tf.

keras-self-attention · PyPI

layers import Attention.Project description.Balises :Machine LearningTensorflowImplement Attention Layer in Keras Conditions préalables Vous devez satisfaire aux exigences suivantes - Tout type d'OS (Windows, Linux ou Mac) Python version 3.keras docs are two: AdditiveAttention() layers, . Note that Keras 2 remains available as the tf-keras package.Sequential () model. The layer_num argument controls how many layers will be duplicated eventually.Self - attention in NLP - GeeksforGeeksgeeksforgeeks. Project description.orgRecommandé pour vous en fonction de ce qui est populaire • Avis

python

其他推荐答案 . Let’s import the . Keras封装性比较高,现在的注意力机制都是用pytorch较为多。. 我收到以下错误:.In the academic paper Augmenting convolutional networks with attention-based aggregation by Touvron et. The advantages of using conda rather than pip to install packages in your Anaconda environment (s) are that: conda should determine what dependencies your requested package has, and install those too in one operation, and.Attention mechanism for processing sequential data that considers the context for each timestamp.

Manquant :

self attention It worked for me!Critiques : 3 Should you want tf.나의 코드는 다음과 같다.Released: Mar 18, 2023. Many-to-one attention mechanism for Keras.自然言語処理で使われるAttentionのWeightを可視化する(spaCy版) TL;DR 自然言語処理で使われるAtentionのAttention Weight(Attention Weightを加味した入力シーケンス毎の出力)を可視化します。 基本的に自然言語処理で使われるAttentionのWeightを可視化すると同様ですが、spaCyを利用したバージョンです。 {:style=max . keras-self-attention. The self-attention layer of the Transformer would .安装 pip install keras-self-attention 用法 基本的 默认情况下,注意力层使用附加注意力并在计算相关性时考虑整个上下文。 以下 代码 创建了一个注意力层,它遵循第一部分中的方程( attention _activation是e_{t, t'}的激活函数): import keras from keras_ self _ attention import Seq Self Attention model = keras .Embedding(input_dim=10000, .pip install attention 是一个命令,用于通过pip包管理器在Python环境中安装Attention模块。.

本文使用了一个外卖评价的数据集 .Balises :Attention LayerAttention KerasPython AttentionTensorflow.问题一:当导入keras工具包时出现“No module named ‘keras’” 出现这一问题时,说明你的python语言库中并没有安装这个工具包,打开cmd,然后输入命令pip install keras就可以了。然后再在python环境中导入,如果没有现问题说明安装成功。 问题二:安装完keras工具包,但是导入时出现module ‘tensorflow.