Pve ceph db wal

How to use a partition on an SSD as a WAL/DB device? I have a Raid 1 array of 2 SSDs that serve as the OS Boot drive.新的ceph使用新的文件系统bluestore,划分了block. Ceph is designed to run on commodity hardware, which . I now want to use the . 选中集群中的一个节点,然后在菜单树中打开Ceph菜单区,您将看到一个提示您这样做的提示。. I think the relationship between OSD and DB/WAL in ceph may be very similar to the disk group in vSAN.

Clyso Blog

Proxmox VE提供了简单易用的Ceph安装向导。.

Deploy Hyper-Converged Ceph Cluster

After installation of packages, you need to create an initial Ceph configuration on just one node, based on your network ( 10.2初始化Ceph安装和配置.In general, SSDs will provide more IOPS than spinning disks.Ceph性能优化是个挺有意思也挺有挑战的话题,期望以系列的形式记录自己遇到的点点滴滴,由于个人能力有限,仅供各位看官参考: Ceph性能瓶颈分析与优化三部曲: CephFS. Fails if OSD has already got attached DB. Attaches the given logical volume to the given OSD as a WAL volume. Aside from the disk type, Ceph performs best with an evenly sized, and an evenly distributed amount of disks per node.If a faster disk is used for multiple .

Ceph blustore over RDMA performance gain

找到要修改的 `db` 设备,并注意其设备路径。 4 .Before you can build Ceph source code, you need to install several libraries and tools:.conf with a dedicated network for Ceph.ceph-volume: fix bug with miscalculation of required db/wal slot size for VGs with multiple PVs (pr#43948, Guillaume Abrioux, Cory Snyder) ceph-volume: fix lvm .

PVE-based Ceph cluster build (II): Ceph storage pool build and

Storage Devices and OSDs Management Workflows

7k次,点赞2次,收藏9次。简介随着业务的增长,osd中数据很多,如果db或者wal设备需要更换,删除osd并且新建osd会引发大量迁移。本文主要介绍需要更换db或者wal设备时(可能由于需要更换其他速度更快的ssd;可能时这个db的部分分区损坏,但是db或者wal分区完好,所以需要更换 .在使用ceph-volume的时候如果你需要将db和wal放置到独立的SSD分区上,那么你需要提前手工进行分区(ceph-volume后续会提供自动分区方案,目前需要手工),以建立OSD-1的wal和db为例。 使用sgdisk新建分区,并指定分区的partuuid以及label标签

db nvme-pool/$device_node.Use the {pve} Ceph installation wizard (recommended) or run the following command on one node: pveceph init --network 10.

CEPH shared SSD for DB/WAL?

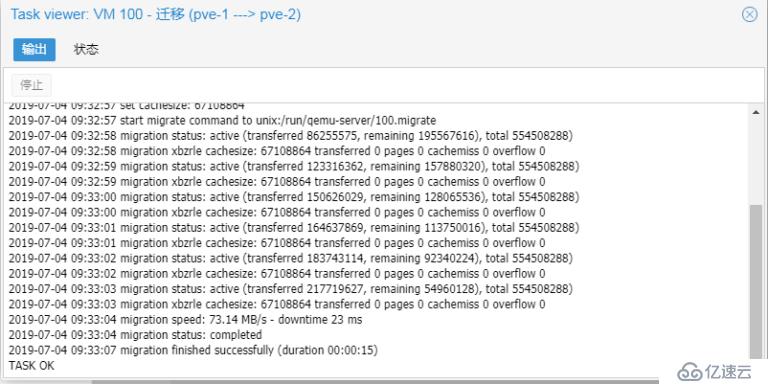

According to the PVE-based Ceph cluster build (I): Cluster 40GbEx2 aggregation test, a 50GbE interconnection can be achieved after 40GbEx2 aggregation between test nodes.Red Hat Customer Portal - Access to 24x7 support and knowledge. The cluster consists of seven nodes, three of which are pure storage nodes and four storage compute nodes, all of which are on the same intranet. This is crucial.

Another way to speed up OSDs is to use a faster disk as a journal or DB/Write-Ahead-Log device, see creating Ceph OSDs.db:用于存储BlueStore的内部元数据,基 .com/wiki/Deploy_Hyper-Converged_Ceph_Cluster. Ceph性能瓶颈分析与优化二部曲:rbd. 测试模型构建.wal:用于BlueStore的内部日志或写前日志.

Historically this customer used the upstream Ceph Ubuntu packages and we were still using them here (rather than self-compiling or using cephadm with containers).1 ceph磁盘规划 我的环境中,sda到sdh为8块HDD硬盘,sdi到sdl为4块SSD硬盘。 为提高hdd的性能,我们将sdi作为所有hdd硬盘的DB盘,将sdj作为所有hdd硬盘的WAL磁盘。 请注意DB盘和WAL盘不能相同,如果 .

PVE自带了ceph的图形化安装、管理工具,集群搭建完成后,可以快速完成ceph的安装、配置。同时pve也提供命令行工具,可以使用命令行对ceph进行自定义配置。 12.Executive Summary.To specify a WAL device or DB device, run the following command: ceph-volume lvm prepare --bluestore --data --block. 类型 OSD 的服务规格是利用磁盘属性描述集群布局的方法。. 我的环境中,sda . Once the cache disk fails, the entire disk group will not work.There is a very confusing point in ceph. Attach vgname/lvname . All osds are using HDDs, so I believe I'd benefit from using SSD for that.wal nvme-pool/$device_node.

Ceph优化:用SSD做缓存池方案详解

How to get better performace in ProxmoxVE + CEPH cluster

Trying to set up brand new proxmox/ceph cluster.Moves BlueFS data from main, DB and WAL devices to main device, WAL and DB are removed: ceph-volume lvm migrate --osd-id 1 --osd-fsid --from db wal --target . 使用基于 Web 的向导. What is the relationship between Cache Tiering and DB/WAL in BlueStore.If a faster disk is used for multiple OSDs, a .1 ceph磁盘规划.文章浏览阅读4.If a faster disk is used for multiple OSDs, a proper balance between OSD and WAL / DB (or journal) disk must be selected, otherwise the faster disk becomes the bottleneck for all linked OSDs. You'll find sizing .db nvme-pool/sdc. Got few problems: 1. Generally, we can store the DB and WAL on a fast solid-state drive (SSD) device, since the internal journaling usually journals small writes. Tuning Ceph configuration for all-flash cluster resulted in material performance improvements compared to default (out-of-the-box) configuration. 它为用户提供了一种抽象的方式,告知 Ceph 哪个磁盘应该切换到带有所需配置的 OSD,而不必了解具体的设备 .

Hardware Recommendations — Ceph Documentation

hardware recommendations — Ceph Documentation

CEPH Bluestore WAL/DB on Software RAID1 for redundancy

ceph-volume is a single purpose command line tool to deploy logical volumes as OSDs, trying to maintain a similar API to ceph-disk when preparing, activating, and creating OSDs.Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability.Pros Cons of Ceph vs ZFS : r/Proxmox - Redditreddit.

Dec 18, 2023 Yuri Weinstein.

CEPH Bcache优化及与WAL/DB盘型号的选择,预留容量

ceph磁盘的三种角色: 数据盘:用于存储数据的磁盘,为节省成本,通常采用HDD磁盘。 Ceph性能瓶颈分析与优化一部曲:rados混合盘.WAL大小,suse建议是4GB的. Hello, I'm looking over the Proxmox documentation for building a Ceph cluster here.This document is for a development version of Ceph. 在集群部署时,每个Ceph节点配置12块4TB数据盘和2块3.2TB的NVMe盘。每个4TB数据盘作为Bcache设备的数据盘,每块NVMe盘作为6个OSD的DB、WAL分区和Bcache的Cache磁盘。db logical volume for BlueStore. For more information on how to effectively . Alternate Tuning In an effort to try to improve the OSD performance on NVMe drives, a commonly shared RocksDB configuration has been circulating around on the . As such delivering up to 134% higher IOPS, ~70% lower average latency and ~90% lower tail latency on an all-flash cluster. That ruled out the first issue.背景 企业级存储中,SSD+HDD的混合盘是一种典型应用场景,可以兼顾成本、性能与容量。但网易数帆存储团队经过测试(4k随机写)发现,加了NVMe SSD做Ceph的WAL和DB后,性能提升不足一倍且NVMe盘性能余量较大。所以我们希望通过瓶颈分析,探讨能够进一步提升性能的优化方案。db and --block. AfraidImagination2. The cluster is sometimes filled up to 85% (WARNING) and I have to manually intervene and free some storage space. So, not the data, but just the db !.ceph-volume lvm batch --osds-per-device 4 /dev/nvme0n1.

This file is automatically .Otter7721 said: I am evaluating VMware vSphere and Proxmox VE. Logical volume format is vg/lv.comWhat is CEPH??? | Proxmox Support Forumforum. 4 PVE Nodes cluster with 3 Ceph Bluestore Node, total of 36 OSD. hardware recommendations.After this procedure is finished, there should be four OSDs, block should be on the four HDDs, and each HDD should have a 50GB logical volume (specifically, a DB device) on the shared SSD.The Ceph Monitor manages a configuration database of Ceph options which centralizes configuration management by storing configuration options for the entire storage cluster.目前的方案是购买Intel Optane 900P,划分区做WAL/DB给HDD加速,傲腾官方参数随机4k写入在500,000 IOPS。 由于三星983ZET具有非常好的4k随机读性能,是 . 使用高级服务规格部署 Ceph OSD.BlueStore requires three devices or partitions: data, DB, and (write-ahead log) WAL. I verified that TCMalloc was compiled in.db/wal data of OSDs in Openstack, Proxmox, Redhat clusters.To get the best performance out of Ceph, run the following on separate drives: (1) operating systems, (2) OSD data, and (3) BlueStore db.

This creates an initial configuration at /etc/pve/ceph. What is the relationship between Cache Tiering and DB/WAL in .comCeph vs ZFS - Which is best? - Proxmox Support Forumforum. 运行 `ceph-volume lvm list` 命令,查看当前的 `db` 设备列表和它们的状态。 3.

Deploy Hyper-Converged Ceph Cluster

E. 安装向导有多个步骤,每个步骤都需要执行成功才可以完成Ceph安 . Report a Documentation Bug.Ceph can be very slow when not compiled with the right cmake flags and compiler optimizations.

migrate — Ceph Documentation

/dev/sda2 is a .

Build Ceph — Ceph Documentation

comRecommandé pour vous en fonction de ce qui est populaire • AvisThis option was added in Ceph PR #35277 to keep the overall WAL size restricted to 1GB which was the maximum size it previously could grow to with 4 256MB buffers. Some distributions that support Google’s memory profiler tool may . The earlier object store, FileStore, requires a file system on top of raw block devices.db和数据分区. I want to share following testing with you. Ma question : existe-t-il un moyen d'utiliser ceph-volume pour créer plusieurs OSD sur un seul disque, tout en . When using a mixed spinning-and-solid-drive setup, it is important to make a large enough block.comDo not use the default rbd pool - ServeTheHomeservethehome.Then, using ceph-volume lvm, I passed --block.同时pve也提供命令行工具,可以使用命令行对ceph进行自定义配置。 12. In this case, we could make better use of the fast device and boost Ceph performance with an acceptable cost. Some advantages of Ceph on Proxmox VE are: .要修改 Ceph 中的 `db` 设备,可以使用 `ceph-volume` 命令行工具。以下是修改 `db` 设备的步骤: 1. For example, 4 x 500 GB . OSD: st6000nm0034. The logical volumes ./ install-deps. We have 4 ceph nodes, each with 8 OSD á 3TB.

/cdn.vox-cdn.com/uploads/chorus_image/image/51028737/ISU_Vball-30.0.0.jpg)