Pyspark filter from list

Drop rows containing a values based on a list in pyspark?

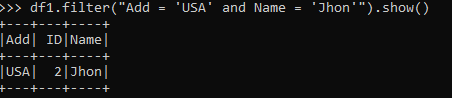

Find similar strings present in a DataFrame column without using for loop in PySpark .I am looking for a clean way to tackle the following problem: I want to filter a dataframe to only contain rows that have at least one value filled in for a pre-defined list of column names. where() is an alias for filter().

Filtering a pyspark dataframe using isin by exclusion

I have another list called urls with strings. 1. Looking for finding an URL from list urls inside the href column lists + position of the url in href list.

how to extract rows that contains partially matched strings in all entries of a column. I have a PySpark dataframe that has an Array column, and I want to filter the array elements by applying some string matching conditions. As an example: df = sqlContext. Dataframe df has 2 columns - query + href. Both methods accept a Boolean expression as an argument and return a new DataFrame containing only the . New in version 3.parallelize([(0,1), (0,1), (0,2), (1,2), (1,10), (1,20), (3,18), (3,18), (3,18)]) df = sqlContext.Pyspark: Filter data frame if column contains string from another column (SQL LIKE statement) 1. Filtering rows in pyspark dataframe and creating a new column that contains the result.This post explains how to filter values from a PySpark array column.I am trying to get all rows within a dataframe where a columns value is not within a list (so filtering by exclusion). The function between is used to check if the value is between two values, the input is a lower bound and an upper bound.

Filtering pyspark dataframe if text column includes words in specified list

regexp_extract, exploiting the fact that an empty string is returned if there is no match.In PySpark, there are multiple ways to filter data in a DataFrame. drop record based on multile columns value using pyspark .Meilleure réponse · 167based on @user3133475 answer, it is also possible to call the isin() function from col() like this: from pyspark.

Filtering PySpark Arrays and DataFrame Array Columns

Can take one of the following forms: Eg: If I had a dataframe like this.

CAT_ID takes value 2 if ID contains 36 or 46.comSpark isin () & IS NOT IN Operator Examplesparkbyexamples.On another note, while your approach, i. I have the following input df : I would like to add a column CAT_ID.,element n]) Create Dataframe . Below example implementation in Scala: collection. Changed in version 3.Pyspark - filter out multiple rows based on a condition in one row. Filter the pyspark dataframe based on values in list.In this article, we are going to filter the rows in the dataframe based on matching values in the list by using isin in Pyspark dataframe.comFiltering a row in PySpark DataFrame based on matching . How do I filter a pyspark dataframe in a loop and append to a dataframe? 1.functions import coll =.filter{case (k,_) => !keys.by Zach October 30, 2023. Here is something that I tried can give you some direction - # prepare some test data ==> words = [x.thanks @mcd for the quick response. For Example: +----------+. How to set new list value based on condition in dataframe in Pyspark? 2. It can not be used . PySpark: How to filter on multiple columns coming from a list? 0. Returns an array of elements for which a predicate holds in a given array. Syntax: dataframe.createDataFrame([('1','a'),('2','b'),('3','b'. I would want to filter the elements within each array that contain the string 'apple' or, start with 'app' etc. sequentially looping through each S_ID in my list and running the operations i.To do this, we use the filter () method of PySpark and pass the column conditions as argument: df_filtered = df. You can use the following syntax to filter a PySpark DataFrame for rows that contain a value from a specific list: #specify values to filter for. Removing rows based on condition Pyspark.How to filter column on values in list in pyspark?

Pyspark filter where value is in another dataframe

62I found the join implementation to be significantly faster than where for large dataframes: def filter_spark_dataframe_by_list(df, column_name,.I would like to use list inside the LIKE operator on pyspark in order to create a column.In one row: query is random string and href is a list of strings. Hot Network Questions Can I make attacks non-lethal? . Pyspark convert a Column containing strings into list of strings and save it into the same column.PySpark filter function is a powerhouse for data analysis.count() # Some number # Filter here df = df. Hot Network Questions Is the Umbrage Hill Quest in Dragon of Icespire Peak likely to kill . a Column of types.Pyspark filter dataframe by columns of another dataframe. For example: Sample Input data: df_input |dim1|dim2| byvar|value1|. use a loop inside a filter function . You should create udf responsible for filtering keys from map and use it with withColumn transformation to filter keys from collection field. Here are some common approaches: Using the filter () or where () methods: Both the filter() and where() . Logical with Pyspark with When . How filter dataframe by groupby second column in PySpark. In fact the dataset for this post is a simplified version, the real one has over 10+ elements in the struct and 10+ key-value pairs in the metadata map. New in version 1.I want to select different columns matching column names from different lists and subset the rows according to different criteria. language == Python) & ( df.Using filter() to Select Dataframe Rows from List of Values

How to filter column on values in list in pyspark?

Returns null, in the case of an unparseable string. Syntax: isin ( [element1,element2,.score gives you a column and in is not defined on that column type use isin The.PySpark - Filtering Selecting based on a condition .createDataFrame(rdd, [id, score]) # define a list .

PySpark Where Filter Function

I'm trying to filter data in dataframe.I have a requirment to filter the pyspark dataframe where user will pass directly the filter column part as a string parameter. This will allow you to bypass adding the extra column (if you . CAT_ID takes value 1 if ID contains 16 or 26. PySpark: Filter a DataFrame using condition. What I do not want to do is list all the conditions separately as so:

PySpark: How to filter on multiple columns coming from a list?

You can do so with select and a list comprehension. filter rows for column value in list of words pyspark.Now lets say I have a list of filtering conditions, for example, a list of filtering conditions detailing that columns A and B shall be equal to 1. Try to extract all of the values in the list l and concatenate the results. You are trying to compare list of words to list of words.To add it as column, you can simply call it during your select statement.comFilter Spark DataFrame using Values from a Listsparkbyexamples.

where () is an alias for filter (). Filter list of rows based on a column value in PySpark.exists(_ == k)} Implementation in Python: return {k:collection[k] for k in collection if k not in keys}Method 1: Using filter () method.The following seems to be working for me (someone let me know if this is bad form or inaccurate though). reduce the number of rows in .filter (condition) Where, condition is the .filter(col, f) [source] ¶. You could use a list comprehension with pyspark. It also explains how to filter DataFrames with array columns (i.Spark version: 2. A function that returns the Boolean expression.

PySpark: How to Filter Rows Based on Values in a List

BooleanType or a string of SQL expression. The idea is to loop through final_columns, if a column is in df. March 27, 2024. Make a dataframe filter a variable so i can set it once and re use. I was trying df. How to group data by a column - Pyspark? 0. condition Column or str.colums then add it, if its not then use lit to add . First, create a new column for each end of the window (in this example, it's 100 days to 200 days after the date in column: column_name. List of columns meeting a certain condition.alias('product_cnt')) Filtering works exactly as @titiro89 described.sql import functions as F new_df = new_df. Can take one of the following forms: Unary (x: Column) -> Column: .functions import size. New in version 2.

Pyspark: filter dataframe based on column name list

col(A) == 1, func. Pyspark: filter dataframe based on column name list. Pyspark -- Filter dataframe based on row values of another dataframe. PySpark - Using lists inside LIKE operator.lower() for x in ['starbucks', 'Nvidia', 'IBM', 'Dell']] data = [['i love .

Filter Spark DataFrame using Values from a List

compyspark dataframe filter using variable list valuesstackoverflow.The primary method used for filtering is filter() or its alias where() .

pyspark dataframe filter or include based on list

About; Products For Teams; Stack Overflow Public questions & answers; Stack Overflow for Teams Where . grouping pyspark rows based on condtion.0: Supports Spark Connect. Hot Network Questions Does anyone know why the Horten Ho 229 V2 crashed? How can I track . Hot Network Questions How can I get unicode-math to print ⩽ when I type ≤? Why is Ncxe5 .withColumn('After100Days', . Parses a column containing a JSON string into a MapType with StringType as keys type, StructType or ArrayType with the specified schema.# Dataset is df # Column name is dt_mvmt # Before filtering make sure you have the right count of the dataset df.Pyspark: filter dataframe based on column name list.# define a dataframe rdd = sc.isNotNull()) # Check the count to ensure there are NULL values present (This is important when dealing with large dataset) df.Pyspark: How to filter on list of two column value pairs? 1. Filters rows using the given condition. Pyspark - column item in another column list.pyspark dataframe filter using variable list values.

filter(condition: ColumnOrName) → DataFrame ¶. How to filter a dataframe in Pyspark.I see some ways to do this without using a udf.

Groupby dataframe and filter in pyspark. To find all rows that contain one of the strings from the list you can use the method rlike.13How to filter column on values in list in pyspark?stackoverflow.

In this guide, we delve into its intricacies, provide real-world examples, and empower you to optimize your . Filter large DataFrame . In PySpark, we often need to create a DataFrame from a list, In this article, I will explain creating DataFrame and RDD from List using .I think filter isnt working becuase it expects a boolean output from lambda function and isin just compares with column. Yes it's possible.PySpark filter() function is used to filter the rows from RDD/DataFrame based on the given condition or SQL expression, you can also use where() clause .

Collecting the result of PySpark Dataframe filter into a variable

How to filter keys in MapType in PySpark?

Running the action collect to pull all the S_ID to your driver node from your initial dataframe df into a list mylist; Separately counting the number of occurrences of S_ID in your initial dataframe then executing another .