Pyspark read csv

apache spark sql - how to read csv file in pyspark?

sql import SQLContext.How to read Avro files in Pyspark on Jupyter Notebook ?! Avro is built-in but external data source module since Spark 2. Non empty string. See examples, options and formats for CSV data source.Reading csv file in pySpark with double quotes and newline character. Must be a single character.csv method to specify the characters used to quote and escape fields in the file.How to Read CSV File into PySpark DataFrame (3 Examples) You can use the spark.

DataFrameReader.csv() function to read a CSV file into a PySpark DataFrame with different options.load(path=None, format=None, schema=None, **options) [source] ¶.csv (path: Union [str, List [str]], schema: Union[pyspark. To read a csv file in pyspark with a given delimiter, you can use the sep parameter in the csv () method. Once CSV file is ingested into HDFS, you can easily . In this article, we shall discuss different spark read options and spark . csv header parsing in pyspark. Home » PySpark SQL Tutorials » PySpark Read CSV with SQL Examples.

How to read csv with second line as header in pyspark dataframe

csv and then create dataframe with this data using .StructType, str, None] = None, sep: Optional [str] = None, .

CSV Files

how to read csv file in pyspark? 2.Learn how to use the spark.Before we dive into reading and writing data, let’s initialize a SparkSession. pyspark csv write: fields with new line chars in double quotes .csv () method: csv_file = path/to/your/csv/file.To read a CSV file using PySpark, you can use the read.CSV is a common format used when extracting and exchanging data between systems and platforms.') # optionally also header=True of course.Spark provides several read options that help you to read files.csv', sep=';', decimal='. sc = SparkContext() after that, SQL library has to be introduced to the system like this: from pyspark. Copy and paste the following code into the new empty notebook cell.在使用pyspark读取csv .csv(csv_file, header=True, .Pyspark Read CSV File Using The csv () Method.I would recommend reading the csv using inferSchema = True (For example myData = spark.

Spark Read() options

PySpark 使用正确的数据类型读取CSV文件 在本文中,我们将介绍如何使用PySpark正确地读取CSV文件,并将其转换为正确的数据类型。PySpark是一个用于在Apache Spark上进行大数据处理的Python库,它提供了强大的分布式数据处理能力,并能够处理多种类型的数据。 阅读更多:PySpark 教程 1.databricks:spark-csv_2. df_csv = spark.It returns a DataFrame or Dataset depending on the API used. pyspark - read csv with custom row delimiter.Learn how to read and write CSV files using Spark SQL functions in Python, Scala and Java.PySpark - read csv skip own header. Read CSV With inferSchema Parameter.cache() Of you course you can add more options. pip install pyspark.

2 there was added new option - wholeFile. First of all, the system needs to recognize Spark Session as the following commands: from pyspark import SparkConf, SparkContext.0 pyspark-shell. You can use csv instead of Databricks CSV - the last one redirects now to default Spark reader.

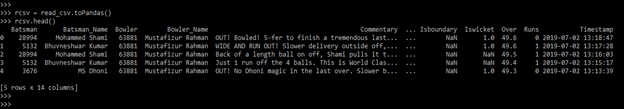

This step creates a DataFrame named df_csv from the CSV file that you previously loaded into your Unity Catalog volume.read_csv function.Read CSV (comma-separated) file into DataFrame or Series.When I am trying to import a local CSV with spark, every column is by default read in as a string.0: Supports Spark Connect. pyspark read text file with multiline column. To be more specific, the CSV looks.To read a CSV file with multiline fields using PySpark, you can use the quote and escape arguments in the spark. See three examples of reading CSV files . PySpark read CSV file with custom record separator.pyspark - Get CSV to Spark dataframe28 avr. Read multiple CSV files with header in only first file - Spark. This function will go through the input once to determine the input schema if inferSchema is enabled.Spark Write DataFrame to CSV File - Spark By {Examples}sparkbyexamples. In this pyspark read csv tutorial, we will use Spark SQL with a CSV . PySpark - READ csv file with quotes. Hot Network Questions Perturbation of zeros of an entire function of . New in version 1.Learn how to read CSV data from a file with Spark using various methods and options. Here are three common ways to do so: Method 1: Read CSV File.Learn how to read CSV files into DataFrame or Series using pyspark.csv', header='true', inferSchema='true'). columnNameOfCorruptRecord='broken') Output: Header length: 2, schema size: 3.For example, let us take the following file that uses the pipe character as the delimiter. For example, to read a CSV file with ’”’ as the quote character and ” as the escape character, you could use the following. Read CSV (comma-separated) file into DataFrame or Series.csv是PySpark中一个常用的方法,用于读取CSV文件并转换为DataFrame对象。 阅读更多 . import findspark findspark.CSV files are a popular way to store and share tabular data. formatstr, optional. sep str, default ‘,’ Delimiter to use. The following examples show how to . Reading a file in Spark with newline(\n) in fields, escaped with backslash(\) and not quoted.csv() 方法接受文件的路径作为参数,并返回一个DataFrame对象。在读取csv文件时,我们可以指定一些选项来控制读取的行为,如指定文件的分隔符、是否使用首行作为列名、是否自动推断列 .load' rather than '. The other solutions posted here have assumed that those particular delimiters occur at a pecific place. I am trying to use pyspark to read this CSV and keep only the columns that I know about. pathstr or list, optional. Are names of chemicals not proper . New in version 3. The path string storing the CSV file to be read. headerint, default ‘infer’.option(header,true). Make sure you match the version of spark-csv with the version of Scala installed.Here is what I have tried. Hot Network Questions PhD supervisor wants to assume my apartment lease and buy my improvements to the apartment when I move out How . sqlContext = SQLContext(sc) and finally you can read your CSV by the following .Read in CSV in Pyspark with correct Datatypes. Parses a column containing a CSV string to a row with the specified schema. Hot Network Questions Beginner: Solder won’t flow onto thermostat tabs Is this Star Trek: Picard Romulan character meant to be a reference to . demo_file Download. Then you can simply get you want: Another way of doing this (to get the columns) is to use it this way: And to get the headers (columns) just use.

csv', something like this: format='com. I suggest you use the function '. Please deploy the application as per the .

How to read Avro files in Pyspark?

csv方法跳过多行。PySpark是一个用于大规模数据处理的强大工具,它提供了灵活的数据处理和分析功能。read. Reading Csv file written by Dataframewriter Pyspark.January 22, 2023 by Todd M. How to make first row as header in PySpark reading text file as Spark context. If I add broken to the schema and remove header validation the command works with a warning.

How to Read CSV File into PySpark DataFrame (3 Examples)

PySpark 如何使用read.

python

Working With CSV Files In PySpark

The data source API is used in PySpark by creating a DataFrameReader or DataFrameWriter object and using it to read or write data from or to a specific data . How to read multiline . See the options and examples for specifying the header, . How to properly import CSV files with PySpark.appName(Read and Write Data Using PySpark) \ . In this comprehensive guide, we will explore how to read CSV files into dataframes using Python's Pandas library, PySpark, R, and the PyGWalker GUI. However, my columns only include integers and a timestamp type. header int, default ‘infer’ Whether to to use as the column names, and the start of . How to read multiline CSV file in Pyspark.Answered for a different question but repeating here. Spark应用程序的入口点。 接下来,我们可以使用sparkSession的read. Read CSV With Header as Column Names.To read a CSV file you must first create a DataFrameReader and set a number of options. columnNameOfCorruptRecord='broken') Output: This command does not store the corrupted records.I want to read a CSV file but I am not interested on all the columns and I don't even know what columns are there. See parameters, options, examples and source code. Basic CSV Reading. Method 2: Read CSV File with Header.

PySpark Read CSV File With Examples

comRecommandé pour vous en fonction de ce qui est populaire • Avis sepstr, default ‘,’.Learn how to read a CSV file and create a DataFrame in PySpark using the spark. This works with Spark's Python interactive shell.init() from pyspark.Here we are going to read a single CSV into dataframe using spark.

csv跳过多行 在本文中,我们将介绍如何在PySpark中使用read.csv — PySpark master .

PySpark 使用正确的数据类型读取CSV文件

See answers from experts and users with code examples and explanations. Returns null, in the case of an unparseable string.csv) it will read all file and handle multiline CSV.

dataframe

Let’s start with the most basic .load(filePath) Here .sql import SparkSession spark = SparkSession. Why Should You Avoid Using .read() is a method used to read data from various data sources such as CSV, JSON, Parquet, Avro, ORC, JDBC, and many more.

Loads data from a data source and returns it as a DataFrame.

a column, or Python string literal with schema in DDL format, to use when parsing the CSV column. This code loads baby name data into DataFrame df_csv from the CSV file and then displays the contents of the DataFrame.csv()方法来读取csv文件。read.