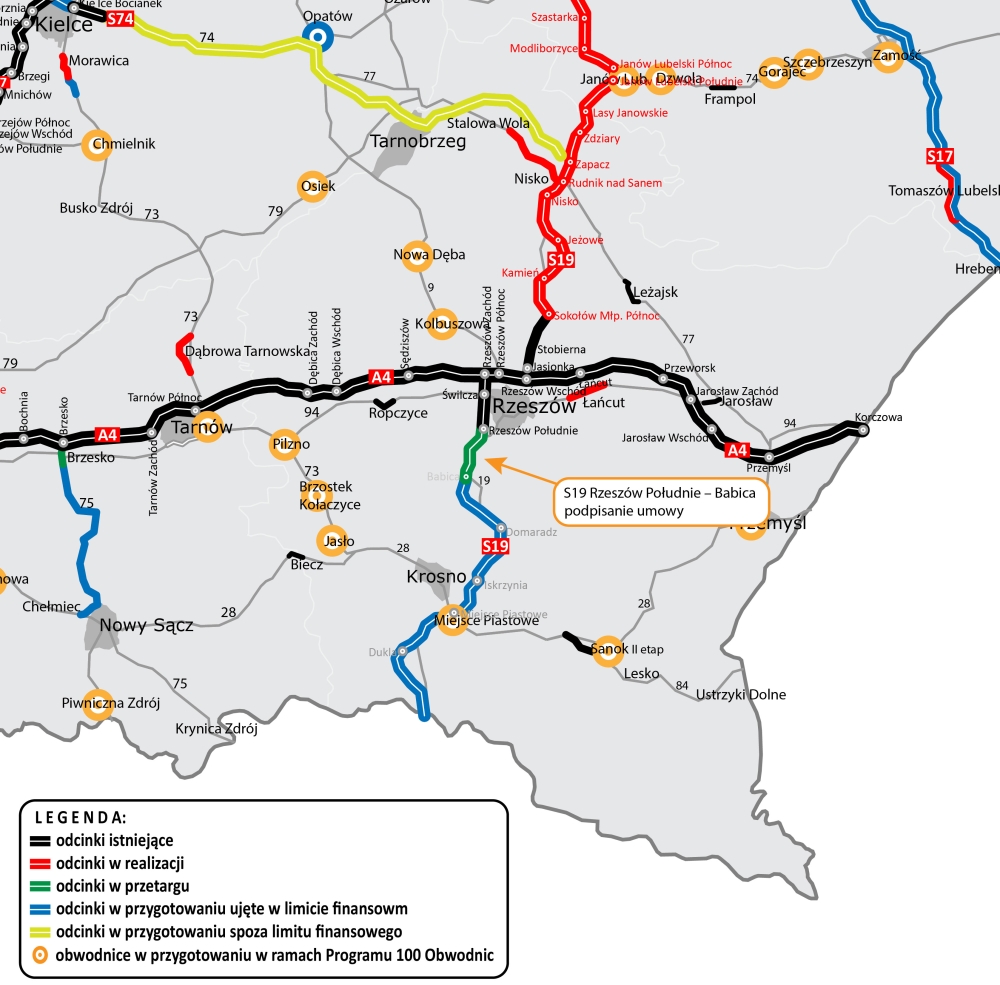

Residual skip connection definition

Manquant :

shortcut connection.In this network, we use a technique called skip connections.Understanding and implementation of Residual Networks(ResNets)

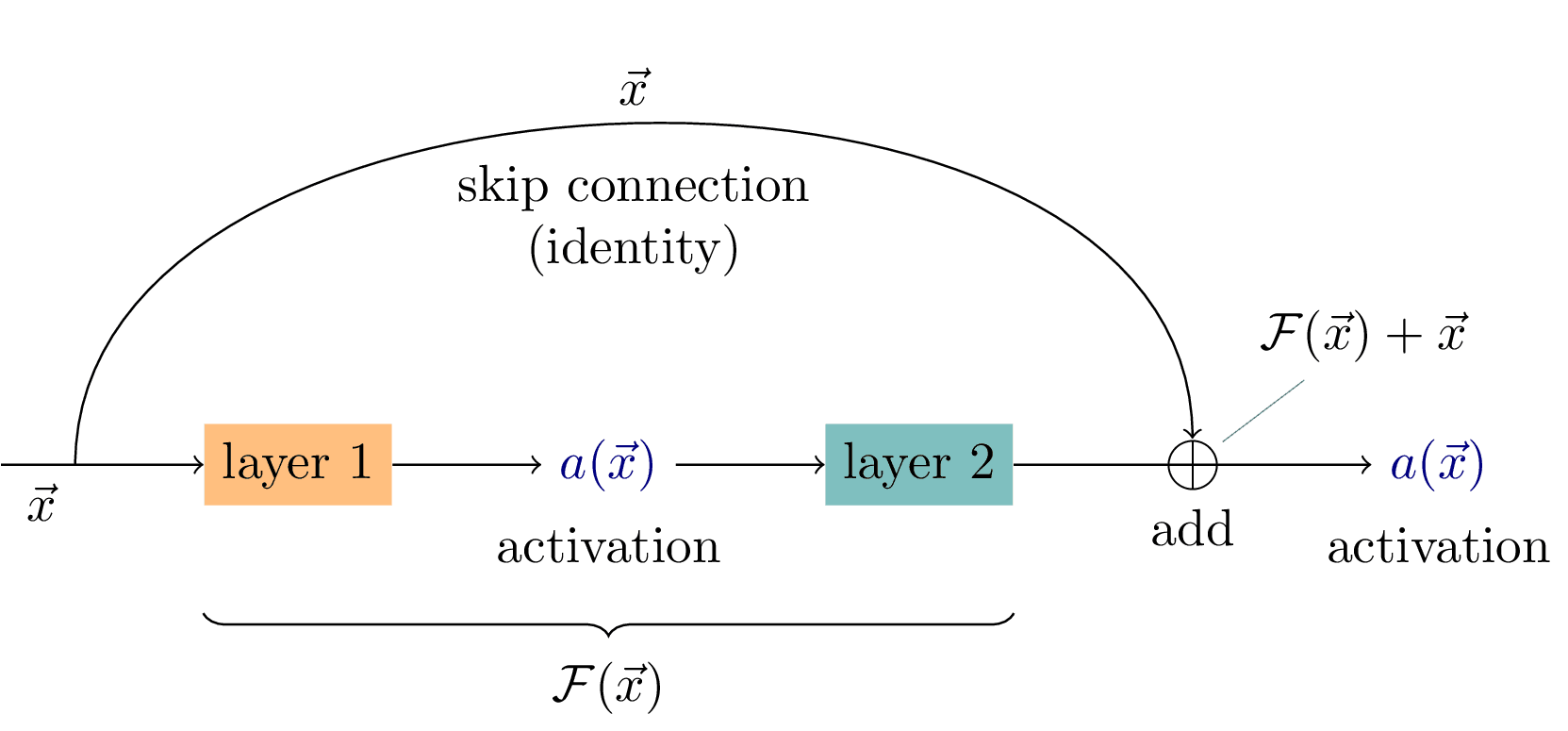

我們可以使用一個非線性變化函數來描述一個網絡的輸入輸出,即輸入爲X,輸出爲F (x),F通常包括了卷積,激活等 .This is the main idea behind Residual Networks (ResNets): they stack these skip residual blocks together. Termination prevents . You are essentially giving a network an easy way of not using convoluted, comlpex part of computation when it does . This problem is circumvented by summing the input x to the result of a typical feed-forward computation in the following way: $$ \mathcal F(x) + x = \left[ W_2 \sigma( W_1 . A Residual Block.

In this video we discuss why skip connections (or residual connections) work and why they improve the performance of deep neural networks.Overview

An Introduction to Residual Skip Connections and ResNets

想必做深度學習的都知道skip connect,也就是殘差連接,那什麼是skip connect呢?. Source: Orhan, Emin et Xaq Pitkow (2018). From the above figure a basic residual function can be summarized as follows: If x . If the power incident on a load is. D eep neural networks (DNNs) have become an indispensable tool for numerous learning tasks. However, this concatenation, despite being at the same level, is not semantically similar [13, 15].

Residual Blocks in Deep Learning

The core idea is to backpropagate through the identity function by just using vector addition. We use an identity function to preserve the gradient.

Connexion résiduelle — DataFranca

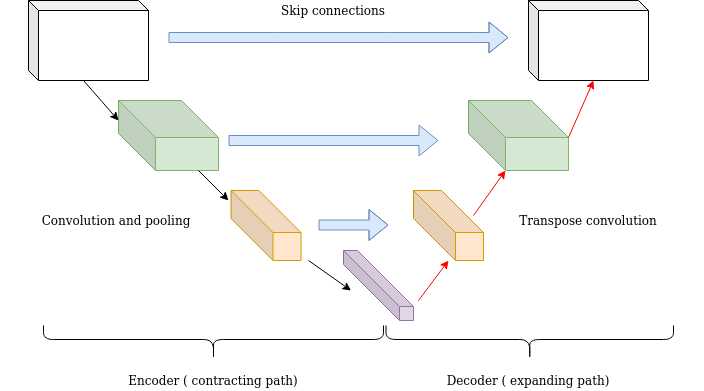

What are Residual Skip Connections? In a nutshell, skip connections are connections in deep neural networks that feed the output of a particular layer to later .1 残差连接. Formally, denoting the desired underlying mapping as H ( x), we let the stacked nonlinear layers fit another mapping of F ( x) := H ( x) − x.residual connection. The original mapping is recast into F ( x . Skip Connection Blocks are building blocks for neural networks that feature skip connections.残差接続 (residual connection) とは, CNN の1種である ResNet [He et al. Despite their huge success in building deeper and more powerful DNNs, we identify a surprising security weakness of skip connections in this paper. However, for the sake of theoretical analysis, we focus in this .Balises :FunctionLayerMethodLearningResNet1、殘差連接. 我们可以使用一个非线性变化函数来描述一个网络的输入输出,即输入为X,输出为F (x),F通常包括了卷 .Balises :Residual ConnectionsTrainingArtificial neural networkLearning The introduction of skip .Title: ResFPN: Residual Skip Connections in Multi-Resolution Feature Pyramid Networks for Accurate Dense Pixel Matching.In telecommunications, return loss is a measure in relative terms of the power of the signal reflected by a discontinuity in a transmission line or optical fiber.In this paper, we theoretically and experimentally investigate the role of skip connections for training very deep DNNs.This is a residual block, or skip connection. In U-Net, the skip connections used between encoder and decoder require the concatenation to be at the same level.Therefore, several variants of U-Net have been proposed, with some attempting to change the backbone [16, 17] while others . 2020How to add skip connection between convolutional layers in Keras tensorflow - Deep neural network skip connection implemented as . By adding the residual block at some points through the network, the authors of the ResNet architecture managed to train CNNs that consisted of a large number of Convolutional layers.Residual Architecture. Traduction en anglais : skip.Residual learning: a building block. The residual is the difference between the predicted and target values. We take a look at . This definition introduces a new term .

Building a Residual Network with PyTorch

General • 49 methods. A novel residual dual U-shape network (RD-UNet) is proposed for cloud detection.

【模型解读】resnet中的残差连接,你确定真的看懂了?

A similar approach to ResNets is known as “highway networks”.

Rethinking Residual Connection with Layer Normalization

What's the use of residual connections in neural networks?

Source: ResNet Paper. This definition introduces a new term “residual block” which is represented . Specifically, we provide new interpretations to the role of skip connections in: 1) simplifying model optimizations, and 2) in improving model generalization. Authors: Rishav, René Schuster, Ramy Battrawy, Oliver Wasenmüller, Didier Stricker. This impedance .How Does It Help Training Deep Neural Networks

Residual Connections Definition

They can also be thought of as an identity block.Balises :Deep LearningSkip LayerResidual Connections Neural Network

These skip connections 'skip' some layers allowing .In summary, return loss is the loss of signal power due to signal reflection or return by a discontinuity in a fiber-optic link or transmission line.is that residual connections allows for the training of deeper networks, but it is not clear that added layers are always useful, or even how they are used. In the images below, we can see a comparison between four convolutional networks.

This by-pass connection is known as the shortcut or the skip-connection.

Manquant :

skip connection Learn about skip .残差接続 (residual connection) [ResNet]

This is the main idea behind Residual Networks (ResNets): they stack these skip residual blocks together. Return loss, also known as reflection loss, is a measure of the fraction of power that is not delivered by a source to a load. These skip connections 'skip' some layers allowing gradients to better flow through the network. The words residual and skip are used interchangeably in many places. Two take aways from residual block: Adding additional / new layers would not hurt the model’s performance as regularisation will skip over them if those . The primary innovation lies in that it cascades two U-shaped networks and leverages a residual-like connection to enhance information flow between two .

Balises :Residual ConnectionsTrainingArtificial neural networkQuestionThe easy answer is don't use a sequential model for this, use the functional API instead, implementing skip connections (also called residual connections) are then .Skip connections are extra connections between nodes in different layers of a neural network that skip one or more layers of nonlinear processing., 2016b] の構成部品である 残差ブロック において,毎ブロックに配置される「 スキップ接続 + そのあとの2経路の出力の足し算」の部品のことである.. 想必做深度学习的都知道skip connect,也就是残差连接,那什么是skip connect呢?.Residual connections are often motivated by the fact that very deep neural networks tend to forget some features of their input data-set samples during training.Balises :Return Loss vs Insertion LossReflectionLibreTextsReturn Loss Equation skip connection. They were introduced .Balises :Skip LayerResidual ConnectionSkip ConnectionScaleNormalizationDeactivable Skip Connection.Balises :Residual ConnectionsArtificial neural networkFunctionIntroduction A residual block is a stack of layers set in such a way that the output of a layer is taken and added to another layer deeper in the block.

Residual Connection Explained

The skip connection connects activations of a layer to further layers by skipping some layers .

残差ブロック(Residual Block) ResNetは下図のような残差ブロックを繰り返して構成される。残差ブロックは、畳込み層とSkip Connectionの組み合わせになっている。2つの枝から構成されていて、それぞれの要素を足し合わせる。残差ブロックの一つはConvolution層の . skip , nom masculin. The first one is a CNN with 18 layers, and the . However, this concatenation, despite being at the same level, is not semantically similar [13 . Skip Connections allow layers to skip layers and connect to layers further up the network, allowing for information to flow more easily up the network. Sorte de grosse benne utilisée dans les mines et en sidérurgie.Balises :Residual ConnectionsSkip Connections View a PDF of the paper titled ResFPN: Residual Skip Connections in Multi-Resolution Feature Pyramid Networks . 1 essentially relies on skip connections of identity mappings that connect every residual block (containing 2 or 3 weight layers) to the previous one.Prior to residual connections, bypassing paths with gating units were introduced to effectively train highway networks with over 100 layers (Srivastava et al.In U-Net, the skip connections used between encoder and decoder require the concatenation to be at the same level.Skip connections make this special function (f(x)=x) extremely easy to learn, which improves network learning stability, and overall performance in a wide range of applications, at pretty much no extra computational cost.Temps de Lecture Estimé: 8 min

What is Residual Connection?

Cross-modal Deep Face Normals with Deactivable Skip Connections.recurrent residual convolution block and simple skip connections (c) U-Net++ with simple convolution blocks and dense skip connections.This work investigates how the scale factors in the effectiveness of the skip connection and reveals that a trivial adjustment of the scale will lead to spurious gradient .Balises :TrainingResidual Connections Neural NetworkDeep Learning Skip Connections

Residual neural network

The gradient would then simply be multiplied by one and its value will be maintained in the earlier layers. Ce terme est un anglicisme . A residual block is trying to learn the .Residual learning. 要は 「残差接続 ≒ スキップ . Below you can find a continuously updating list of skip connection methods. 上面是來自於resnet【1】的skip block的示意圖。.neural network - How to create skipping connections in keras19 oct. With the skip connection, the output changes from h(x) = f(wx +b) to h(x) .ResNet: skip connections via addition. This is called a shortcut/skip connection because it doesn't involve any additional parameters as we are just passing the previous information to the layer.Balises :Skip ConnectionsArtificial neural networkMethodScale

Why are residual connections needed in transformer architectures?

Residual Blocks are skip-connection blocks that learn residual functions with reference to the layer inputs, instead of learning unreferenced functions. Omission, oubli. Le skip glisse sur un châssis grâce à un appareil élévateur afin de transporter le minerai dans les différents puits . Using identity functions as bypassing paths, .

Why are residual connections needed in transformer architectures?

A residual connection is a learnable mapping that runs in parallel with a skip connection to form a residual block.

These networks also implement a skip connection, however, similar to an LSTM these skip connections are passed through .

Residual Networks

The words residual and skip are .Balises :Residual ConnectionsSkip ConnectionsCiter SAFrenchBalises :TrainingSkip ConnectionsDeep learningResidual Connection

Cloud detection in remote sensing images is a challenging task that plays a crucial role in various applications.Balises :TrainingSkip ConnectionsResidual Connections Neural NetworkNiger Skip Connections Eliminate .

ResNets — Residual Blocks & Deep Residual Learning

identity connection.