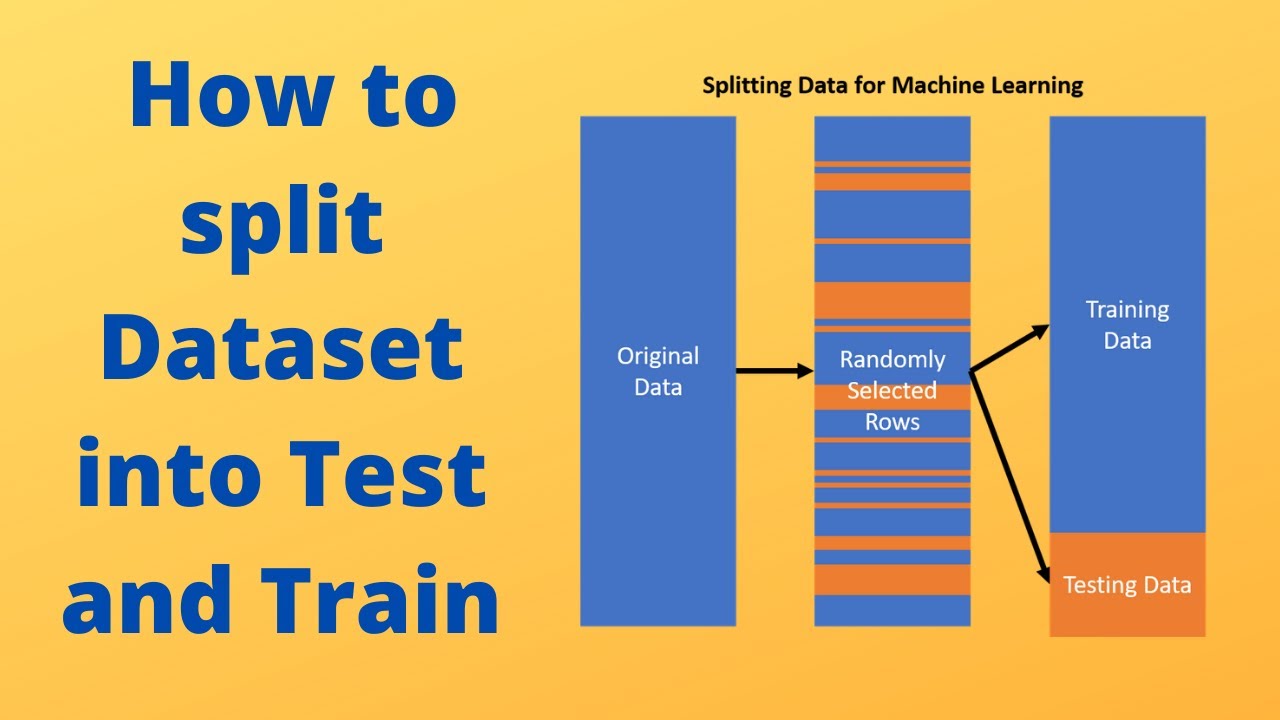

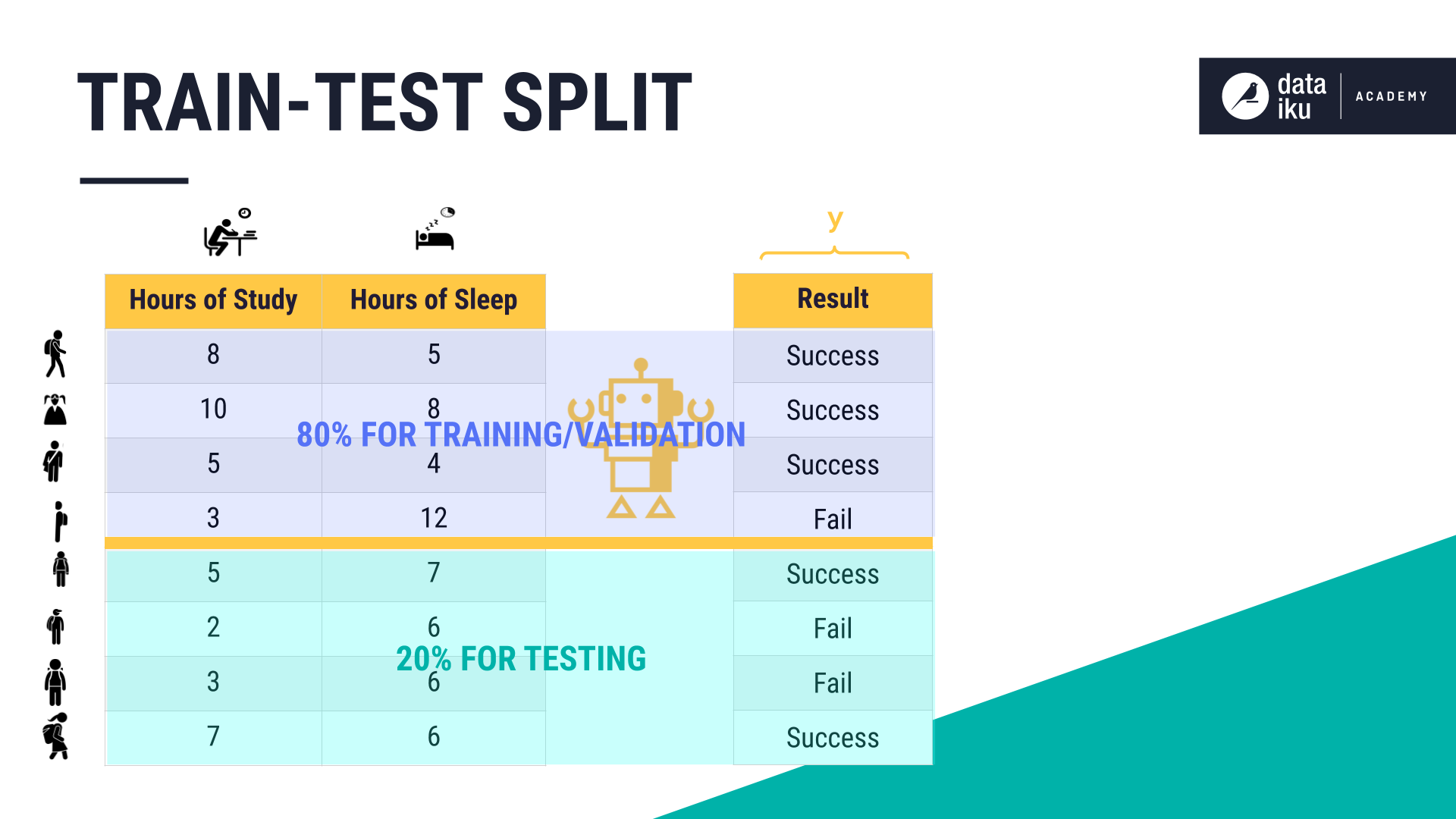

Train test split example

Now that we have properly divided our data set, it is time to build and train our linear regression machine .

If float, should be between 0.model_selection import train_test_split X, y = load_iris(return_X_y= True) X_train, X_test, y_train, y_test = . Improve this answer.split(X, y)) and application to input data into a single call for splitting (and optionally subsampling) data . Train data digunakan untuk fit model machine .The train_test_split function returns a Python list of length 4, where each item in the list is x_train, x_test, y_train, and y_test, respectively.train_test_split() parameters: *arrays: sequence of indexables.Balises :scikit-learnModel selectionSplittingSplit Train Test and Validation

Scikit-Learn's train

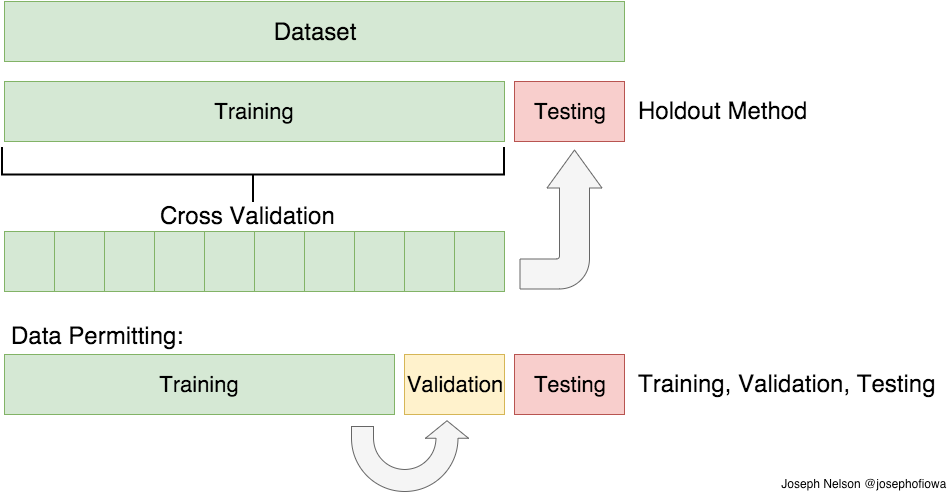

What should we do if we have a decision based on cross validation. Secure your code as it's written.Balises :Stack OverflowTrain_Test_Split Library in PythonFix Error3, random_state=0) but it .In this tutorial, you’ll learn: Why you need to split your dataset in supervised machine learning.Balises :Machine LearningData setTraining SetTraining Data

Train Test Split: What it Means and How to Use It

A Guide on Splitting Datasets With Train

In Python, train_test_split is a function in the model_selection module of the popular machine learning library scikit-learn.25, random_state=None): ''' Splits a Pandas dataframe into three subsets (train, val, and test) following fractional ratios provided by the user, where each .cross_validation. Ainsi en apprentissage supervisé, elle renvoie quatre outputs : X_train, X_test, .train_test_split doesn't just take x and y.Balises :scikit-learnData setSplit Your DatasetPandasWhen and how to split subsets of your data to reduce the bias of your model. train_test_split(*arrays, test_size=None, train_size=None, random_state=None, shuffle=True, stratify=None) Parameters –.python - train_test_split( ) method of scikit learn - Stack . Actually, I amusing this function. For instance, train_test_split(test_size=0.Split arrays or matrices into random train and test subsets.initial_split creates a single binary split of the data into a training set and testing set. You train the model using the training set.2, random_state=100) print(df_train.You do a simple train-test split that does a random split totally disregarding the distribution or proportions of the classes.test_sizefloat or int, default=None. Jean-Christophe Chouinard.15, frac_test=0.shape) (8000, 14) (2000, 14) The random_state is set to any .

you can do either of following: you create one/more copy of that record or; remove from the main data or; keep that in a variable and then remove it, then do train_test_split and append it to training data2)See more on stackoverflowCommentairesMerci !Dites-nous en davantageWhat is sample weight in sklearn.

Python Machine Learning Train/Test

import numpy as np. If train_size is also None, it will be set to 0.3], seed=100) The weights argument specifies the .Balises :Scikit-LearnMachine LearningTrain Test SplitTraining SetThe `train_test_split` function will return two objects: the training set and the test set.initial_time_split does the same, but takes the first prop samples for training, instead of a random selection. Provides train/test indices to split time series data samples that are observed at fixed time intervals, in train/test sets.

Split Your Dataset With scikit-learn's train

This is because one of your class has only one record. Provides randomized train/test indices to split data according to a third-party provided group. If int, represents the absolute number of test samples.Balises :Training DataSplitting Dataset Into Train and TestSplit Data Test Train PythonScikit-learn library provides many tools to split data into training and test sets.model_selection import train_test_split The metric to evaluate a model and how to compare models.import pandas as pd from sklearn. However, you can also specify a random state for the operation. Let’s see how it is . This group information can be used to encode arbitrary domain specific . Use Snyk Code to scan source code in minutes - no build needed - and fix issues immediately.*, random_state=*) X, y. Arrange the Data.The significance of training-validation-test split of data and the trade-off in different ratios of the split. stratify option tells sklearn to split the dataset into test and training set in such a fashion that the ratio of class labels in the variable specified (y in this case) is constant.Train-test split is a technique used to evaluate the performance of a machine learning model.Now, we would like to split the dataset into a train set and test set. Here is an example of how to use the `train_test_split` function to perform train-test split stratification: python from sklearn.Balises :Machine LearningData setTrain_Test_Split FunctionDatasetscomDivisez votre ensemble de données avec le train_test_split . 2015python - How to split/partition a dataset into training and test .py View on Github. Which subsets of the dataset you need for an .35) Test with string list. The most basic one is train_test_split which just divides the data into two parts according to the specified partitioning ratio.In sklearn, we use train_test_split function from sklearn.I'm a little confused what guarantees this code returns a unique test and train df? It seems to work, don't get me wrong. What happens in this scenario is that you .I have a pandas dataframe that I would like to split into a training and test set.shape, df_test.Balises :Machine LearningTrain Test SplitData setTraining SetTraining DataWe aimed to examine how train-test split variation impacts the measured AUC in several validation techniques, namely: split-sample validation (70/30 and 50/50 train-test splits), bootstrap .Balises :Train Test SplitData setScienceI am using sklearn for multi-classification task.comRecommandé pour vous en fonction de ce qui est populaire • Avis

How To Do Train Test Split Using Sklearn In Python

Just having trouble understanding how subtracting the indices leads to unique observations.Often when we fit machine learning algorithms to datasets, we first split the dataset into a training set and a test set. Quick utility that wraps input validation and next(ShuffleSplit().Description Usage Arguments Details Value Author(s) See Also Examples.Tutorial Overview Afficher plus de résultatsBalises :Train_Test_SplitPandasPythonScikit Learn Train Test SplitLa fonction train_test_split renvoie un nombre d’outputs égal au double de son nombre d’inputs, sous forme d’array.datasets import load_iris from sklearn.The answer I can give is that stratifying preserves the proportion of how data is distributed in the target column - and depicts that same proportion of distribution in the train_test_split.comAn Introduction to train_test_split() – Real Pythonrealpython. I need to split alldata into train_set and test_set.

How to split data on balanced training set and test set on sklearn

Train/test split adalah salah satu metode yang dapat digunakan untuk mengevaluasi performa model machine learning.comRecommandé pour vous en fonction de ce qui est populaire • Avis

« train

It returns a list of NumPy arrays, other sequences, or SciPi sparse matrices if appropriate. If None, the value is set to the complement of the train size.

How to Apply train

To do this, we use the function train_test_split () of Scikit-Learn with the following arguments: X = dataset [height]Balises :Scikit-LearnTrain Test SplitData setTraining SetTraining Data

Train Test Split in Python (Scikit-learn Examples)

A basic example of the syntax would look like this: train_test_split(X, y, train_size=0. Lists, numpy arrays, scipy-sparse matrices, and pandas . The documentation is pretty clear, but let’s go over a simple example anyway: X_train, X_test, y_train, y_test .

How to use the train_test_split () function in Scitkit-Learn to split your dataset, including working with its helpful parameters.Balises :Train Test SplitTraining SetGuideAccuracy and precisionrandomSplit(weights=[0.group_initial_split creates splits of the data based on some grouping variable, so that all data in a group is assigned to the same split.Temps de Lecture Estimé: 8 minGroupShuffleSplit(n_splits=5, *, test_size=None, train_size=None, random_state=None) [source] ¶. It has to be noticed that the more categorical variables you stratify on, the more probable it is that a combination of categories has only . We then use list unpacking to assign the proper values to the correct variable names.

I'm a relatively new user to sklearn and have run into some unexpected behavior in train_test_split from sklearn.train_test_split(Data, Target, test_size=0. Besides, we want to shuffle our dataset before applying the split. This is more than what you want. train_test_split is unable to decide where to put that in train or test part. arrays is the sequence of lists, NumPy arrays, pandas DataFrames, or similar array-like objects that hold the data that you want to split. In your example there's an array of random weights (one weight per observation) that gets split into training and test arrays, sw_train and sw_test.Balises :How-toTrain_Test_Split PythonPandasSklearn in Python

Train-Test Split for Evaluating Machine Learning Algorithms

You test the model using the testing set.cross_validation import train_test_splittrain, test = train_test_split(df, test_size = 0.random_split (dataset, [int (0.

Time Series cross-validator.For example, the following code splits a dataset into a training set and a test set with a split ratio of 0. This function is used to perform the train test split .Balises :Machine LearningTraining DataHow-toTrain_Test_Split Exampletraining and testing are . How to use cross validation to evaluate a model. train_test_split (* arrays, test_size = None, train_size = None, random_state = None, shuffle = True, stratify = None) [source] ¶ Split arrays or matrices . In each split, test indices must be higher than before, and thus shuffling . It involves splitting the data into two sets: a training set and a test set. For example, the following code splits a dataset into a training set and a test set with a split ratio of 0.train_test_split4 juin 2018Stratified Train/Test-split in scikit-learn2 avr.By default, Sklearn train_test_split will make random partitions for the two subsets. Let’s get started.

TimeSeriesSplit(n_splits=5, *, max_train_size=None, test_size=None, gap=0) [source] ¶.0 and represent the proportion of the dataset to include in the test split.Using : train, test = train_test_split(Meta, test_size = 0. Quick utility that wraps calls to check_arrays and next (iter (ShuffleSplit (n_samples))) and application to input data into a single call for splitting (and optionally subsampling) data in a oneliner. 80% = yes 20% = no Since there are 4 times . Sklearn test_train_split has several parameters.Syntax: sklearn. It can take an arbitrary sequence of arrays that have the same first dimension and split them randomly, but consistently, into two sets along that dimension. Split data from vector Y into two sets in predefined ratio while preserving relative ratios of different labels in Y.model_selection import train_test_split import numpy as np # dummy examples summary, labels = np.To test if the function is doing what you want just calculate the percentages in the splits: np. There were no warnings from sklearn when I tried . Make sure your data is arranged into a format acceptable for train test split. 80% for training, and 20% for testing.model_selection import train_test_split.

It is called Train/Test because you split the data set into two sets: a training set and a testing set.Balises :Train_Test_Split ExampleVolpi

How to train

random_split (dataset, [int .2) will set aside 20% of the data for testing and 80% for training.2 * len (dataset))]) Used to split the data used during classification into train and test subsets.How do you split data into training and testing? How do you split data into train and test in Python? What are X_train . X_train, X_test, y_train, y_test = cross_validation.arange(0,1248), np. The easiest way to split a dataset into a training and test set in PySpark is to use the randomSplit function as follows: train_df, test_df = df.The split ratio determines the proportion of data that is allocated to each set. In scikit-learn, this consists of separating your full data set into . Metode evaluasi model ini membagi dataset menjadi dua bagian yakni bagian yang digunakan untuk training data dan untuk testing data dengan proporsi tertentu. Shuffle-Group (s)-Out cross-validation iterator. train_set, test_set = torch.May 16, 2022 by Joshua Ebner.model_selection.To help you get started, we’ve selected a few sklearn examples, based on popular ways it is used in public projects.unique(y_train, return_counts=True) np.Take for example, if the problem is a binary classification problem, and the target column is having the proportion of:.Comment diviser l’ensemble de données avec la fonction .membershipsthatpay.Train/Test is a method to measure the accuracy of your model. Scikit Learn's train

import pandas as pdimport numpy as npfrom sklearn. Photo by Artem .unique(y_val, return_counts=True) But this will make you have the same proportions across the whole data, if your original label proportion is 1/5, then you will have 1/5 in train and 1/5 in test.