Xgboost feature selection python

Data Interface.

Feature Importance With XGBoost in Python

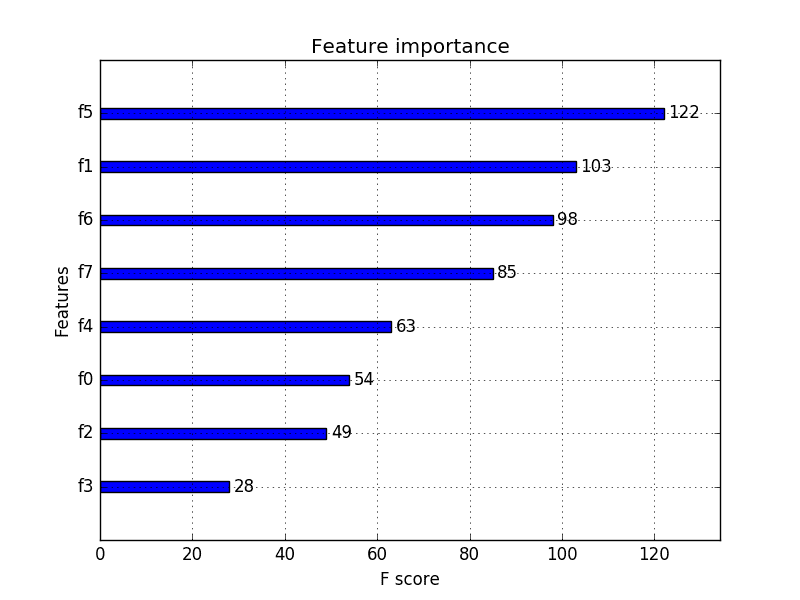

We have already covered the following in the previous sections: A) Types of Feature Selection Methods (Part 1) B) Correlation: Pearson, Point Bi-Serial, Cramer’s V (Part 1) C) Weight of Evidence and . Update: For a more recent tutorial on feature selection in .Feature Selection using XGBoost This article will give you a detailed explanation of how to do feature selection using XGBoost with a practical example. More is not always better when it comes to attributes or columns in your dataset.In this post, I will show you how to get feature importance from Xgboost model in Python. It allows restricting the selection to top_k features per group with the largest magnitude of univariate weight change, by setting the top_k parameter.How to manually plot feature importance in Python using XGBoost. The second part of a series on ML-based feature .get_score(importance_type='weight') answered Jul 25, 2017 at 18:15. Go to the end to download the full example code.

feature_selection module can be used for feature selection/dimensionality reduction on sample sets, either to improve .This operation is multithreaded and is a linear complexity approximation of the quadratic greedy selection.There is also an excellent list of sample source code in Python on the XGBoost Python Feature Walkthrough.For partition-based splits, the splits are specified .XGBClassifier(num_class = 3) Sets features selection SFSres = SFS(XGB, k_features=8,cv=5) . The use of machine learning methods on time series data requires feature engineering. First, the estimator is trained on the initial set of features and the importance of each feature is obtained. import numpy as np. featurewiz uses XGBoost repeatedly to perform feature selection. Demo for using feature weight to change column sampling.< in JSON at position 4. This helps to understand . In this post you will discover automatic feature selection techniques that you can use to prepare your machine learning data . 该函数称为 plot_importance() ,可以按如下方式使用:.Critiques : 210

python

By Jason Brownlee on September 16, 2020 in Time Series 107. max_rounds [default=100] - int (max_rounds > 0) The number of times the core BoostARoota algorithm will run.Feature Importance and Feature Selection With XGBoost in Python - MachineLearningMastery.The data features that you use to train your machine learning models have a huge influence on the performance you can achieve.

XGBoost with Python

Scales linearly.

![XGBoost - An In-Depth Guide [Python API]](https://storage.googleapis.com/coderzcolumn/static/tutorials/machine_learning/article_image/XGBoost - An In-Depth Guide [Python].jpg)

Apply L2 regularization to our XGBoost model. By Jason Brownlee on . Boruta is a random forest based method, so it works for tree models like Random Forest or XGBoost, but is also valid with other classification models like Logistic Regression or SVM. Deep-dive on ML techniques for feature selection in Python — Part 2 .Smaller values will run faster as it is running through XGBoost a smaller number of times.

Feature Selection with Boruta in Python

A detailed description of these parameters can be found here.

Using XGBoost For Feature Selection

def initilialize_poplulation(numberOfParents): learningRate = np. Feature Selection with Boruta in Python. Once we've trained an XGBoost model, it's often useful to understand which features were most important to the model. My current setup is Ubuntu 16.The feature selection process is fundamental in any machine learning project. Learn how the Boruta algorithm works for ., fn] and these names are shown in the output of plot_importance method as well. silent – Whether print messages during construction.XGBoost is one of the most popular and effective machine learning algorithm, especially for tabular data.The following shows the ways to use XGBoost for .Something went wrong and this page crashed! If the issue persists, it's likely a problem on our side. In such a case calling model. Demo for obtaining . Let's get started.Starting from version 1.feature_names is not useful because the returned names are in the form [f0, f1, . The “Boston house-prices” dataset is a built-in .iloc[:, 17] # spliting the dataset into train, test and validate for binary .

Feature selection in machine learning

Finally I have solved this issue by: model. selection_model = .XGBoost’s objective function.model_selection import train_test_split # Extract feature and target arrays X, y = diamonds.Extreme Gradient Boosting, or XGBoost for short, is an efficient open-source implementation of the gradient boosting algorithm. Feature importance - XGBoost의 기본 내장된 feature_importance를 이용하는 방법. # use feature importance for feature selection. Install XGBoost. from numpy import loadtxt. This is a collection of examples for using the XGBoost Python package.com/feature-importance-and-feature-selection-with . As such, XGBoost is an algorithm, an open-source project, and a Python library.XGBoost : Dimension Reduction (feature selection) 1. This is done using the .Critiques : 3

Feature Selection using XGBoost

# plot feature importance. In this post you will discover how to select attributes in your data before creating a machine learning model using the scikit-learn library.show() 例如,下面是一个完整的代码清单,使用内置的 plot_importance() 函数绘制 Pima Indians 数据集的 .

But there should be several ways how to .Not all data attributes are created equal. To visualize the importance, you can use a bar chart. The Boston house-prices dataset.But when you have a very large data set with hundreds if not thousands of variables, selecting the best features from your model can mean the difference between a bloated and highly complex model or a simple model with the fewest and most information-rich features.

Hyperparameter tuning in XGBoost using genetic algorithm

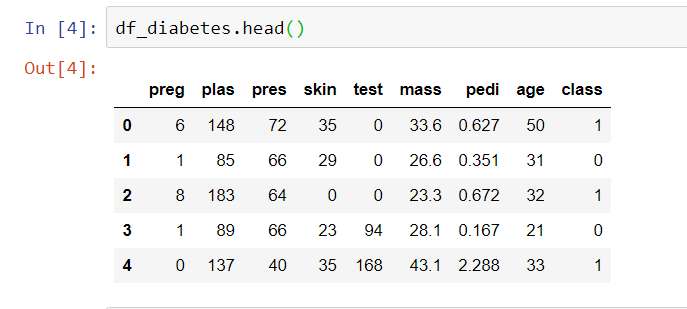

# split data into X and y.

With matpotlib library we can plot training results for each run (from XGBoost output). The training set will be used to prepare the XGBoost model and the test set will be used to make new predictions, from which we can evaluate the performance of the model.According to this post there 3 different ways to get feature importance from Xgboost: use built-in feature importance, use . feature_names (Sequence[] | None) – Set names for features. If you aren’t using Boruta for feature selection, you should try it out. In this post we’ll go through the Boruta algorithm, which allows us to create a ranking of our features, from the most.XGBoost 库提供了一个内置函数来绘制按其重要性排序的特征。.

Extreme Gradient Boosting (XGBoost) Ensemble in Python

Photo by William Felker on Unsplash. plot_importance(model) pyplot. It was initially developed by Tianqi Chen and was described by Chen and Carlos Guestrin in their 2016 paper titled “ XGBoost: A . shuffle: Similar to cyclic but with random feature shuffling prior to .Feature Selection for Time Series Forecasting with Python. from xgboost import XGBClassifier.AI-Driven Feature Selection in Python! Deep-dive on ML techniques for feature selection in Python — Part 2. Demo for using xgboost with sklearn.feature_selection import SequentialFeatureSelector as SFS xgboost classifier XGB = xgboost. It can be used on any classification model.

Learn XGBoost in Python: A Step-by-Step Tutorial

feature_types .SyntaxError: Unexpected token < in JSON at position 4. from matplotlib import pyplot.Extreme Gradient Boosting, or XGBoost for short is an efficient open-source implementation of the gradient boosting algorithm.drop('price', axis=1), diamonds[['price']] The dataset has three categorical . Then, the least important features are removed from the current set of features and the classification metric is checked again. Edit on GitHub.

This allows us to gain insights into the data, perform feature selection, and simplify models.base_margin (Any | None) – Base margin used for boosting from existing model.selection = SelectFromModel(model, threshold=thresh, prefit=True) select_X_train = selection.You are right that when you pass NumPy array to fit method of XGBoost, you loose the feature names. Here’s how you can do it: from xgboost import XGBClassifier. X = dataset[:,0:8] Y = dataset[:,8] Finally, we must split the X and Y data into a training and test dataset.使用 Python 和 XGBoost 的特征重要性和特征选择.Feature selection and ordering method. These must be transformed into input and . From installation to creating DMatrix and building a classifier, this tutorial covers all the key aspects.Feature selection is a crucial step in machine learning, especially when dealing with high-dimensional data. Feature importance 2. BorutaShap XGBoost 알고리즘으로 모델을 만들고 최대한 input variables을 줄여 경제적인 모델을 만들기 위해 두 가지 방법을 사용해 봄.< threshold\), while for categorical data the split is defined depending on whether partitioning or onehot encoding is used. top_k [default=0] The number of top features to select in greedy and thrifty feature . 4 min read · Jan 31, 2024

How to Develop Your First XGBoost Model in Python

I'm using XGBoost Feature Importance Scores to perform Feature Selection in my KNN Model using the following code (taken from this article):# this section for training and testing the algorithm after feature selection #dataset spliting X = df. missing (float | None) – Value in the input data which needs to be present as a missing value.model_selection.

在 Python 中使用 XGBoost 的特征重要性和特征选择

At the end of the log, you should see which iteration was selected as the best one.transform(X_train) # train model. Decision Tree-based methods like random forest, xgboost, rank the input features in order of importance and accordingly take decisions while classifying the data. You learned: That XGBoost is a library for developing fast and high performance gradient boosting tree models.

Feature Importance and Feature Selection With XGBoost

from numpy import sort. 原文: https://machinelearningmastery. - importance가 가장 작은 변수를 차례대로 .

iloc[:, 0:17] y_bin = df. Machine Learning. Discover the power of XGBoost, one of the most popular machine learning frameworks among data scientists, with this step-by-step tutorial in Python. Irrelevant or partially relevant features can negatively impact model performance.Feature Selection using XGBoost in Python. In this example, I will use `boston` dataset availabe in `scikit-learn` pacakge (a regression task).Feature Selection with XGBoost Feature Importance Scores.If None, defaults to np.