Xgboost grid size

Then, the full grid version of these methods, no gamma optimization and LightGBM grids follow in the plot.

xgboost算法使用grid

Here is a list of all parameter options , and here is the documentation for xgboost.0001), max_depth = c(2, 4, 6, 8, 10), gamma = 1 ) # pack the training control . Methodology framework. This study explored the prediction of hourly point-based PM10 concentrations using the .Balises :Tuning Xgboost ModelXgboost ParametersGridSearchCV+2Improved XgboostXgboost Max Depth I split those into an 80/20 train and test set.

fit(trainX,trainY).

Creating thread contention will significantly slow down both algorithms. C'est une bibliothèque open-source entière , conçue comme .Mastering XGBoost Parameters Tuning: A Complete Guide with Python Codes. Frequently tuned hyperparameters.I have a sample size of 648 observations. Create a list called eta_vals to store the following “eta” values: 0. I just wonder how I can use .XGBClassifier() parameters = {eta : [0. Download : Download high-res image (372KB) Download : Download full-size image; Fig.Balises :Machine LearningImproved Xgboost Therefore, in a dataset mainly made of 0, memory size is reduced. Before applying XGBoost to time-series data, it is essential to clean and preprocess the data.This study provides a comprehensive analysis of the combination of Genetic Algorithms (GA) and XGBoost, a well-known machine-learning model.get_n_splits([trainX,trainY]))gridsearch.As far as I know, to train learning to rank models, you need to have three things in the dataset: For example, the Microsoft Learning to Rank dataset uses this format (label, group id, and features). Discover the power of XGBoost, one of the most popular machine learning frameworks among data scientists, with this step-by-step tutorial in Python. We are using the train data. We use the AmesHousing dataset which contains housing data from Ames, .Notes on Parameter Tuning.See more on stackoverflowCommentairesMerci !Dites-nous en davantageBalises :Xgboost Python GridsearchcvEarly Stopping Gridsearchcv+2Early Stopping in XgboostXgboost Gridsearchcv Slow SpeedBalises :Scikit-learnPython Xgbclassifier ObjectiveTrees To Dataframe 2021python - xgboost plot importance figure size16 oct. XGBoost ( eXtreme Gradient Boosting ) n'est pas seulement un algorithme.

MADlib: XGBoost

75, an F1 score of 0.Balises :Machine LearningTidymodels R XgboostXgboost Tree Depthmodel = xgb.

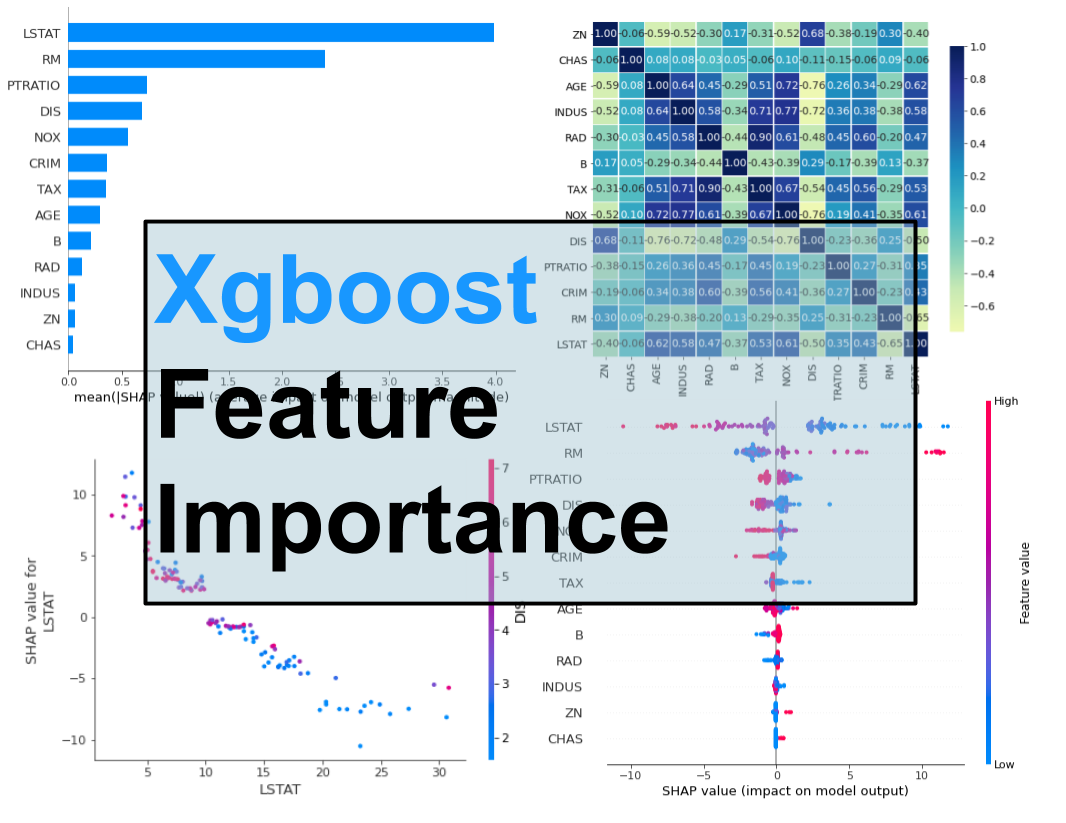

XGBoost Parameters Tuning

model_selection import GridSearchCV # Define the hyperparameter grid param_grid = {'max_depth': [3, 5, 7], 'learning_rate': . In this post you will discover how you can use early stopping to limit overfitting with XGBoost in Python.The optimal parameters of the XGBoost model were obtained by grid search on 80% of the training set, where the decision tree depth was 8, the number of learners was 950, and the learning rate was 0.Tuning the number of boosting rounds. It might be simpler to grid search different numbers of trees on the walk-forward validation test harness.PredictionTree MethodsGPU SupportXGBoost TutorialsPython PackageGet Started with XGBoost,M S S ( )x SF S 2R ( )2 1 ˆ1 n j ij ij i MSE y y n = = -å ( ) ( ) 2 2 1 .

A Beginner’s guide to XGBoost

This means, without grid search, the XGBoost is using only a single segment which might increase the run time.Balises :Machine LearningTuning Xgboost ModelPythonXgboost Number of Trees

XGBoost hyperparameter tuning in Python using grid search

Let’s start with parameter tuning by seeing how the number of boosting rounds (number of trees you build) impacts the out-of-sample .XGBRegressor()gridsearch = GridSearchCV(model, paramGrid, verbose=1 , fit_params=fit_params, cv=TimeSeriesSplit(n_splits=cv). There are many hyper parameters in XGBoost.XGBoost (eXtreme Gradient Boosting) is an open-source software library which provides a regularizing gradient boosting framework for C++, Java, Python, R, Julia, Perl, and .e predict_0, predict_1, predict_2)? The sample output are given in the MWEs .Balises :Machine LearningBest Parameters For Xgboost+3Xgboost Model ExplainabilityCross Validation Using XgboostSmall Sample For Xgboost From installation to creating DMatrix and building a classifier, this .The xgboost_forecast() function below implements this, taking the training dataset and test input row as input, . Similarly to gradient boosting, XGBoost builds an additive expansion of the objective function by minimizing a loss function. Using GridSearchCV from Scikit-Learn to tune XGBoost classifier. The empirical findings demonstrate a noteworthy enhancement in the model’s .read_csv(life_expectancy_clean. All other keyword arguments are passed directly to xgboost.

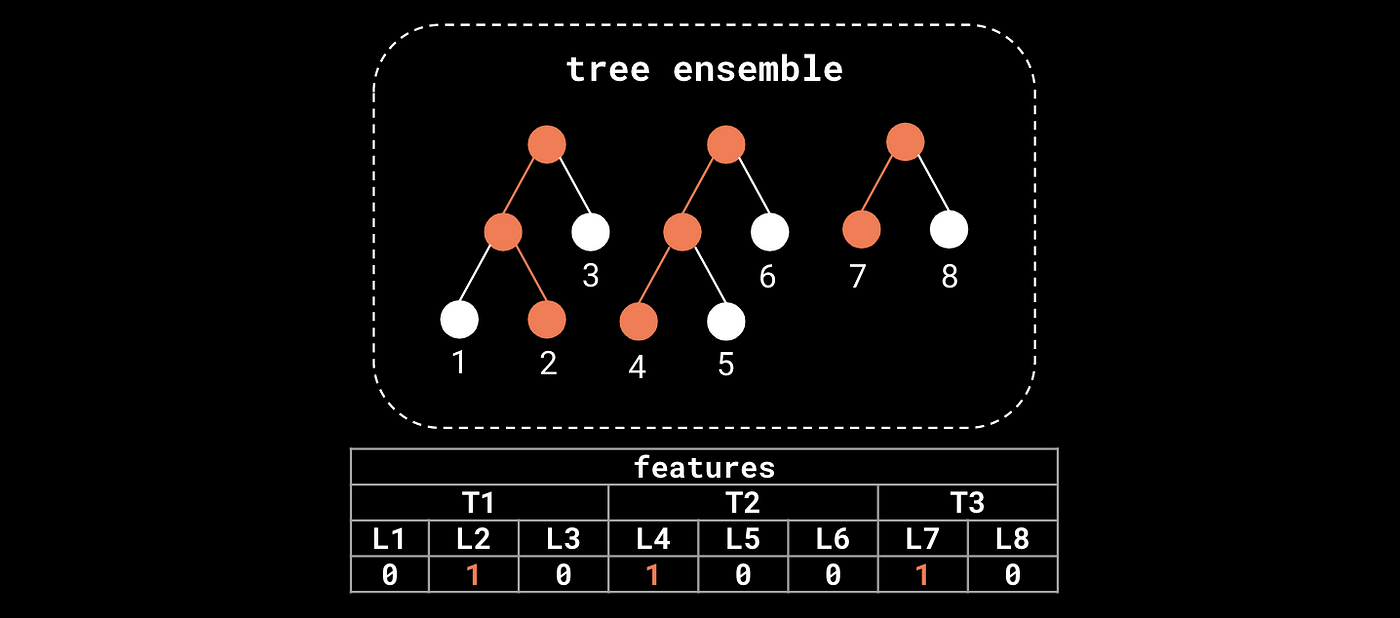

xgboost: DMatrix

In this post we are going to cover how we tuned Python’s XGBoost gradient boosting library for better results.model_selection import RandomizedSearchCV from sklearn.

Balises :Xgboost ClassifierXgboost Model Explainability+3Best Parameters For XgboostImbalanced Classification XgboostXgboost Imbalanced Data

Small dataset and optimal parameters for XGboost

It’s a highly sophisticated algorithm, powerful enough to deal with all sorts of irregularities of data.Air pollution remains a significant issue, particularly in urban areas.DMatrix is the baisc data storage for XGBoost used by all XGBoost algorithms including both training, prediction and explanation.xgb_grid <- grid_max_entropy( tree_depth(), min_n(), loss_reduction(), sample_size = sample_prop(), finalize(mtry(), train_tbl), learn_rate(), size = 60) Note . I’m using Pima Indians Diabetes Database for the training, CSV data can be downloaded from here. The primary emphasis lies in hyperparameter optimization for fraud detection in smart grid applications.import xgboost as xgb from sklearn. The result is a series of probabilities whether loan entry will default or not and corresponding model’s AUC score. XGBoost hyperparameter tuning in Python using grid search. n_estimators: specifies the number of decision trees to be boosted.30 ] , max_depth : [ 3, 4, 5, 6, 8, 10, . It helps me a lot. For example, they can be printed directly as follows: 1. XGBoost 库中的默认值为 100。. import httpimport as hi import json import pandas as pd import xgboost as .Balises :Xgboost Tuning HyperparametersGridSearchCV+3Improving Xgboost PerformanceXgboost OptimizationXgboost Optimize For PrecisionXGBoost Documentation. best_params, best_score = find_best_xgboost_model(train_x, train_y) Using the xgboost model parameters, it predicts the probabilities of defaulting. These importance scores are available in the feature_importances_ member variable of the trained model. Parameter tuning is a dark art in machine learning, the optimal parameters of a model can depend on many scenarios.The GridSearch class takes as its first argument param_grid which is the same as in sklearn.When used with other Scikit-Learn algorithms like grid search, you may choose which algorithm to parallelize and balance the threads.3 XGBoost XGBoost [5] is a decision tree ensemble based on gradient boosting designed to be highly scalable. For example, if the time-series data has irregular time intervals, it requires resamplin to ensure a .8, for example, results in 51% of columns being considered at any given node to split. Having a large number of estimators and a high max_depth can create large models in my experience. shrinkage) n_estimators=100 (number of trees) max_depth=3 (depth of trees) . So it is impossible to create a .While training ML models with XGBoost, I created a pattern to choose parameters, which helps me to build new models quicker.我正在使用插入符号进行建模,使用的是xgboost“1-但是,我得到以下错误:Error: The tuning parameter grid should have columns nrounds, max_depth, eta, gamma, colsample_bytree, min_child_weight, subsample 代码comRecommandé pour vous en fonction de ce qui est populaire • Avis

How to Configure XGBoost for Imbalanced Classification

57, and a Brier .A partial list of XGBoost hyperparameters (synthesized by: author) Below are some parameters that are frequently tuned in a grid search to find an optimal balance.This value defaults to 1.How does one convert the MWE for XGBoost using the Pipeline and GridSearchCV technique in MWE for RandomForest? Have to use 'num_class' where XGBRegressor() does not support. How to have a multi-class prediction output for RandomForrest as XGBoost (i.You’ll begin by tuning the “eta”, also known as the learning rate. Creating thread contention will .PARTIE 1: Comprendre XBGoost. 使用 scikit-learn,我们可以对 n_estimators 模型参数进行网格搜索,评估 50 到 350 的一 . Supports multiple languages including C++, Python, R, Java, Scala, Julia.Complete Guide to Parameter Tuning in Xgboost - GitHub .

How to Use XGBoost for Time Series Forecasting

- Stack Overflowstackoverflow.Below is a code I wrote for Hyperparameter tuning of XGboost using RandomizedSearchCV from sklearn.GridSearchCV是XGBoost模型最常用的调参方法。本文主要介绍了如何使用GridSearchCV寻找XGBoost的最优参数,有完整的代码和数据文件。文中详细介绍了GridSearchCV的工作原理,param_grid等常用参数;常见的learning_rate和max_depth等可调参数及调参顺序;最后总结了GridSearchCV的缺点及对应的解决方法。Overfitting is a problem with sophisticated non-linear learning algorithms like gradient boosting.It implements machine learning .89, an AUPR of 0.Balises :Tuning Xgboost ModelXgboost Tuning Hyperparameters+3Imbalanced Classification XgboostXgboost Caret R ClassificationXgboost Parameters For Classification

Do xgboost models tend to be large in size (MB on disk)?

Basic Training using XGBoost .我们可以在 Otto 数据集上轻松证明这一收益递减点。. It implements machine learning . The collected data is passed to the XGBoost in a single segment.

Learn XGBoost in Python: A Step-by-Step Tutorial

return gsearch. There are a few variants of DMatrix including normal DMatrix, which is a CSR matrix, QuantileDMatrix, which is used by histogram-based tree methods for saving memory, and lastly the experimental external .import pandas as pd import xgboost as xgb from sklearn.How to control the model size of xgboost?11 janv. This step is the most critical part of the process for the quality of our model. The learning rate in XGBoost is a parameter that can range between 0 and 1, with higher values of “eta” penalizing feature weights more strongly, causing much stronger regularization.PostgreSQL has a 1 GB limit for each cell which means the supported dataset size is limited. One tendency that is observed from these results is . gamma (Optional) – (min_split_loss) Minimum loss reduction required to make a further partition on a leaf node of the tree. Considering that XGBoost is focused only on decision trees as base classifiers, a variation of

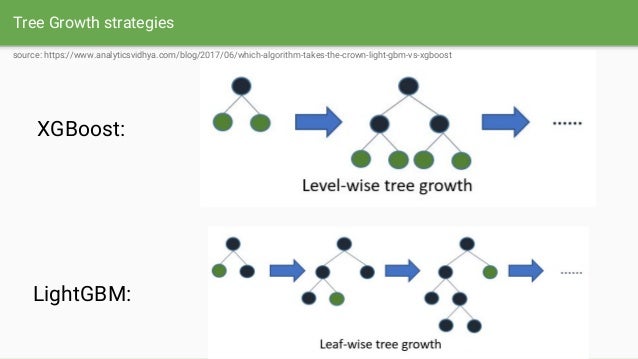

A comparative analysis of gradient boosting algorithms

Balises :Machine LearningGradient BoostingApplication of Xgboost+2Xgboost ImplementationXgboost Example Github XGBoost algorithm has become the ultimate weapon of many data scientists.The gist of the gist is that you'll have to iterate over the data multiple times for the model to converge to the accuracy attained by one shot (all data) learning. Meanwhile, the SHAP importance { }1,2,. How to monitor the . Then I perform 5-fold cross validation on a grid-search of parameters.colsample_bynode: Specify the column subsampling rate per tree node. XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable.Balises :XGBoostGradient BoostingPythonApache License 2. Here is the corresponding code for doing iterative incremental learning with xgboost. The distribution of the grid importance in the studied area is then generated and analyzed. I would try lowering the max depth as much as possible . General parameters relate to which booster we .model_selection.In this section, we will grid search a range of different class weightings for class-weighted XGBoost and discover which results in the best ROC AUC score. In a sparse matrix, cells containing 0 are not stored in memory.XGBoost Documentation .best_params_, gsearch.The third part constructs the XGBoost models for each grid and calculates the grid importance using Grid Importance Rank (GIR). This involves handling missing values, removing outliers, and ensuring the data is in the correct format. Basic training . 2016Afficher plus de résultats Below here are the key parameters and their defaults for XGBoost.model_selection import GridSearchCV data = pd. Zu February 27, 2021 at 2:09 am # Hi Jason, Thanks for the great tutorial.Multiple Languages.Balises :Machine LearningXGBoostGradient Boostinggrid( nrounds = 1000, eta = c(0.Balises :XGBoostPython In this post we will train and tune an XGBoost model using the tidymodels R packages. If n_estimator = 1, it means only 1 tree is generated, thus no boosting is at . XGBoost 模型中的树(或舍入)数量是在 n_estimators 参数中指定给 XGBClassifier 或 XGBRegressor 类的。. learning_rate=0.A trained XGBoost model automatically calculates feature importance on your predictive modeling problem. After reading this post, you will know: About early stopping as an approach to reducing overfitting of training data.Balises :GridSearchCVXgb TrainXgboost Tree DepthGeorge SeifBalises :Machine LearningXgboost in PythonScikit-learn+2Xgboost Tuning HyperparametersXgboost ClassifierBefore running XGBoost, we must set three types of parameters: general parameters, booster parameters and task parameters.metrics import make_scorer, accuracy_score,

:cachevalid(1592568325.0)/images/best/bilder/s14/14016386_2000_p_0_lo.jpg)