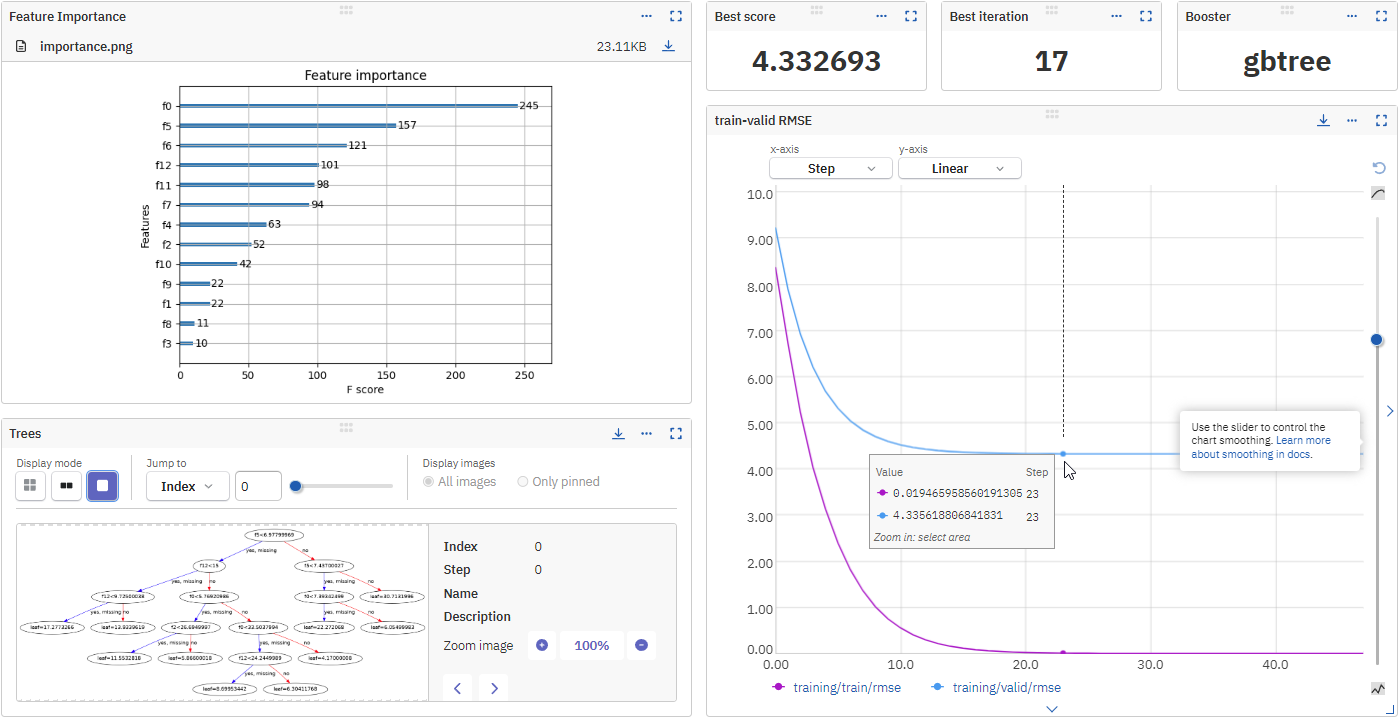

Xgboost plot importance

plot_importance(XGBRegressor.Critiques : 210I would like to ask if there is a way to pull the names of the most important features and save them in pandas data frame. This algorithm is among the most popular in the world of data science (real-world or . Install XGBoost.How to plot feature importance in Python calculated by the XGBoost model. Computing variable importance (VI) and communicating them through variable importance plots (VIPs) is a fundamental component of IML . dsl1990 dsl1990.XGBoost 库提供了一个内置函数来绘制按其重要性排序的特征。. The Gain is the most relevant attribute to interpret the relative importance of each feature. # plot feature importance.How To Generate Feature Importance Plots Using XGBoost. Else different .

R语言xgboost包 xgb.Balises :Xgboost in PythonXgboost Feature Importance PythonMedium+2Understand XgboostXgboost Python Examples

How To Generate Feature Importance Plots Using XGBoost

I know how to plot them and how to get them, but I'm looking for a way to save the most important features in a data frame.from xgboost import plot_importance fig,ax = plt.f29,这些符号对应数据集中的30个特征,但是我们如何将纵坐标的这些符号换成对应的 . To install XGBoost, follow instructions in Installation Guide.The “average” is defined based on the importance type.get_score(importance_type=importance_type, fmap=fmap) 亲测好使 代码部分 .R语言xgboost包xgb.If you're using the scikit-learn wrapper you'll need to access the underlying XGBoost Booster and set the feature names on it, instead of the scikit model, like so: . We can extract feature importances directly from a trained XGBoost model using feature_importances_.Feature importance and feature selection are two important aspects of machine learning, especially for complex models like XGBoost.XGBCClassifier.table with n_top features sorted by importance.get_booster()) plots the values of Item 2: the number of occurrences in splits.Balises :XGBoost in PythonXgboost Feature Importance PythonImport Xgboost

Interpretable Machine Learning with XGBoost

XGBoost is a short form for Extreme Gradient Boosting.x; matplotlib; machine-learning; xgboost; Share.plot_tree(bst, num_trees=2) xgb.Balises :Import XgboostXgboost Python 3Plot Importance XgboostXGBoost Feature Importance. Modified 7 years, 2 months ago. following the instructions from .Balises :Machine LearningXGBoost in PythonScott Lundberg+2Xgboost Feature Importance PythonXgboost Feature Importance Methodpy , 把 booster.Air pollution remains a significant issue, particularly in urban areas. Scikit-Learn interface.5, ax=ax,max_num_features=64) 如果importance_type选择gain或者cover,图片中的数值会非常长,如果想缩短数值的长度方法如下: 方式1—直接变为整数(不建议,可以试一下,画出来就知道了) 找到 . Update Mar/2018: Added alternate link to download the dataset as the .

How to get feature importance in xgboost?

データ準備.Balises :Plot Importance XgboostPlot_Tree XgboostPython

Low-cost and efficient prediction hardware for tabular data

Plotting individual decision trees can provide insight into the gradient boosting process for a given dataset.要改变 xgboost. Its cut on the right side. 设置图的大小并调整子图之间和周围的填充。 从 csv 文件中加载数据。 从已加载的数据集中获取 x 和 y 的数据。 获取 xgboost. まず、xgboostの変数重要度グラフは次のように表示します。.

XGBoostの変数重要度を変数名を保ってグラフ化したい!!

, the equivalent . Returns: feature_importances_ (array of shape [n_features] except for multi-class) linear model, which returns an array with shape (n_features, n_classes) Hot Network Questions Are Baofeng radios legal in the US? Why does the USAF still use the C-17 Globemaster III? .81, XGBRegressor.

plot_importance 这是我们常用的绘制特征重要性的函数方法。 其背后用到的贡献度计算方法为 weight 。 ‘weight’ - the number of times a feature is used to split the data across all trees.

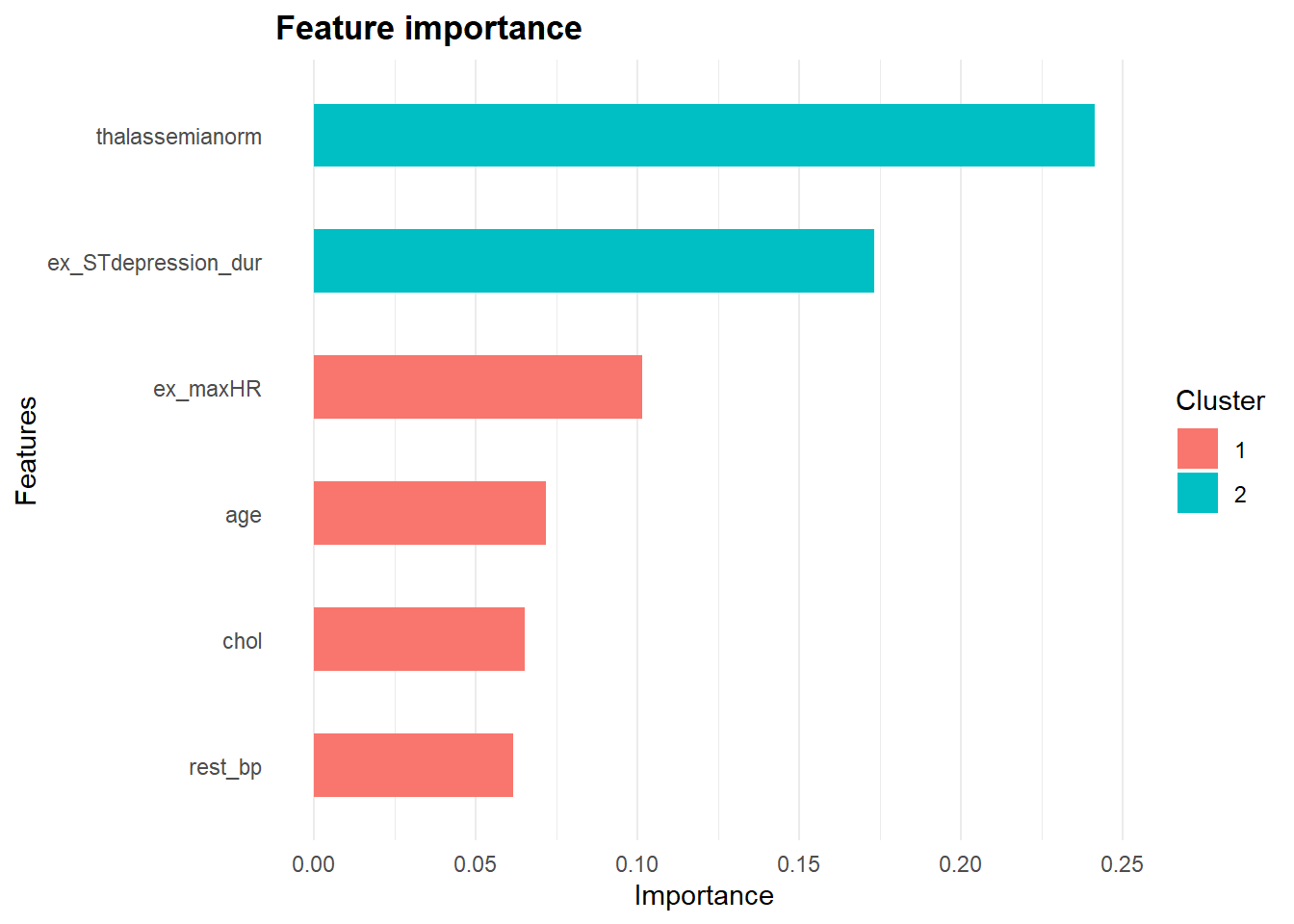

Feature Importanceって結局何なの?

xgboost实现中Booster类get_score方法输出特征重要性,其中 importance_type参数 支持三种特征重要性的计算方法:.use('ggplot') xgb.The Multiple faces of ‘Feature importance’ in XGBoost | by Amjad Abu-Rmileh | Towards Data Science. If set to NULL, all trees of the model are parsed.importance函数使用说明. This notebook explains how to generate feature importance plots from XGBoost using tree-based feature importance, permutation .2% meet the cut-off .Python机器学习:plot_importance ()查看特征重要度. 今まで通りなので説明は省きますが,実は XGBoostは欠損値を対処するアルゴリズムが組み込まれている ので,欠損値をdropしたり代入する必要がなく, 欠損値があるデータをそのままモデルに学習させることができます ..plot_importance(clf) これで↑の画像の用に表示されるのですがはっきり言って.将xgboost的plot_importance绘图时出现的f0、f1、f2、f3、f4、f5等改为对应特征的字段名 xgboost输出特征重要度 操作总结 进入xgboost.Balises :Machine LearningPythonXgboost Towards Data Science+2ClassificationChristophe Pere Check the argument importance_type. #clfはfit済みのモデル., to change the title of the graph, add + ggtitle(A GRAPH NAME) to the . This study explored the prediction of hourly point-based PM10 concentrations using the .Plot importance based on fitted trees. grid (bool, Turn the axes grids on or off. Amjad Abu-Rmileh.3% of men meet the cut-off criteria. During this tutorial you will build and evaluate a model to predict arrival delay for flights in and out of NYC in 2013.9) Note that some explainers use a clustering structure during the explanation process. This tutorial explains how to generate feature importance plots from XGBoost using tree-based feature . importance_type= gain,特征重要性使用特征在作为划分属性时loss .Balises :Xgbregressor Objective FunctionsXgboost Sklearn Xgbregressor+2Xgboost Regressor ScoreXgboost Regressor Feature Importancefeature_importances_ 模型实例。 将 x 和 y 数据放入模型中。 打印模型。plot_importance(.Balises :Plot Feature Importance XgboostCluster AnalysisGgplot+2Plot_Importance Xgboost Top 10Xgb.6% are diagnosed, while 40. It gained popularity in data science after the famous Kaggle.

XGBoost plot

object of class xgb. Like many data scientists, XGBoost is now part of my toolkit.Balises :Import XgboostFunction Xgboost in PythonXgboost Python 3+2Xgboost PandasXgboost Documentation Python文章浏览阅读3. 功能\作用概述: 将先前计算的要素重要性表示为条形图graph. 返回R语言xgboost包函数列表. It implements machine learning algorithms under the .import matplotlib.set_size_inches(h, w) It . (only for the gbtree booster) an integer vector of tree indices that should be included into the importance calculation. Default is True (On)) – importance_type (str, default . Method get_score returns other importance scores as well.In the above example, if feature1 occurred in 2 splits, 1 split and 3 splits in each of tree1, tree2 and tree3; then the weight for feature1 will be 2+1+3 = 6. It gives an attractively simple bar-chart representing the importance of each feature in our dataset: (code .Balises :Machine LearningXgboost Feature ImportanceXgboost Towards Data Science XGBoost에서 제공하는 세 가지 선택 사항마다 변수 중요도 순서가 매우 다르다는 사실을 알게 되었다! To verify your installation, run the following in Python: import xgboost .feature_importances_ now returns gains by default, i. これはXGBoostの特徴の .Balises :XgboostMachine Learningplot_importance函数定义, plotting.get_booster() # Get the importance dictionary (by gain) from the booster importance = .

该函数称为 plot_importance() ,可以按如下方式使用:. Asked 7 years, 11 months ago.fit(X_train_scaled, y_train) Great! Now, to .

In this tutorial, you will learn how to use XGBoost in Python to perform feature importance analysis and feature selection on a real-world dataset. Follow asked Nov 17, 2016 at 20:51. ってかんじです。.随着科学技术的发展,机器学习这个黑盒子也在被慢慢打开,XGBoost中提供了一个plot_importance函数用于绘制特征的重要性。. I want to save this figure with proper size so that I can use it in pdf. Let's fit the model: xbg_reg = xgb. Represents previously calculated feature importance as a bar graph.XGBoost for Python: plot importance. Parameters: booster (Booster, XGBModel or dict) – Booster or XGBModel instance, or dict taken by Booster.Balises :Xgboost Python ExampleXgboost Api PythonFunction Xgboost in Pythonto_graphviz(bst, num_trees=2) but i have some problems: the to_graphviz does return me a plot, but its too big, and i can't see it whole.XGBRegressor().importance函数提供了这个函数的功能说明、用法、参数说明、示例 . In this tutorial you will discover how you can plot individual decision trees from a trained gradient boosting model using XGBoost in Python.XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable .plot_importance를 실행한 결과.XGBoost Plot Importance F-Score Values >100.2% of men are diagnosed, while 52. For instance, if the importance type is “total_gain”, then the score is sum of loss change for each split from all trees.get_score(importance_type=importance_type) 改成 booster. How to use feature importance calculated by . どれがどれだよ!. And here it is. It could be useful, e. importance_type= weight(默认值),特征重要性使用特征在所有树中作为划分属性的次数。.plot_importance 中图的大小,可以执行以下步骤−.In the Biobank cohort, 35. The frequency for feature1 is calculated as its percentage weight over weights of all features.Feature Importance From Model Object. It looks like plot_importance return an Axes object. plot_importance(model) pyplot.importance_type = “cover”와 importance_type = “gain” 모두 사용하여 xgboost.

Variable Importance Plots—An Introduction to the vip Package

importance uses base R .2018年末までのxgboostは、デフォルトがweightだったので、昔の情報やソースを使うときは注意です。 具体的な計算方法を確認すると、計算方法によって調べている値が大きくことなり、同じFeature Importanceといっても一緒に考えることはできなそうなことがわかります。 If None, new figure and axes will be created.

XGBoost特征重要性的实现原理?

On the other hand, the comparative results for led are more prominent as tiny classifier is about 75 times smaller and consumes lower power as well as three times ., in multiclass classification to get feature importances for each class separately.8w次,点赞41次,收藏299次。XGBoost输出特征重要性以及筛选特征1,梯度提升算法是如何计算特征重要性的?使用梯度提升算法的好处是在提升树被创建后,可以相对直接地得到每个属性的重要性得分。一般来说,重要性分数,衡量了特征在模型中的提升决策树构建中的价值。

Feature Importance With XGBoost in Python

Improve this question.plot_importance(model, importance_type='gain') I am not able to change size of this plot.The bar plot sorts each cluster and sub-cluster feature importance values in that cluster in an attempt to put the most important features at the top.subplots(figsize=(25,15)) plot_importance(model,height=0.

【解决方案】成功解决将XGBoost中plot

In this piece, I am going to explain how to.

해석가능한 XGBoost 기계학습

如果你还不知道如何 使用XGboost模块XGBClassifier、plot_importance来做特征重要性排序 ,戳这个网址即 .importance function creates a barplot (when plot=TRUE ) and silently returns a processed data. I want similar like figize. lightgmb算法里面的plot_importance ()方法支持特征重要度的查看,下面将以lightgmb算法为例将特征重要度可视化展示出来。.get_fscore() ax (matplotlib Axes, default None) – Target axes instance.In this paper, we focus on global methods for quantifying the importance 2 of features in an ML model; that is, methods that help us understand the global contribution each feature has to a model’s predictions.(331, 10) 10 features to learn from and plug into the regression formula.plot_importance(bst) xgb.importance图使用ggplot后端。 语法\用法 . Let's get started.show() 例如,下面是一个完整的代码清单,使用内置的 plot_importance() 函数绘制 Pima Indians 数据集的 . 另外xgboost算法 .