Deep learning gru

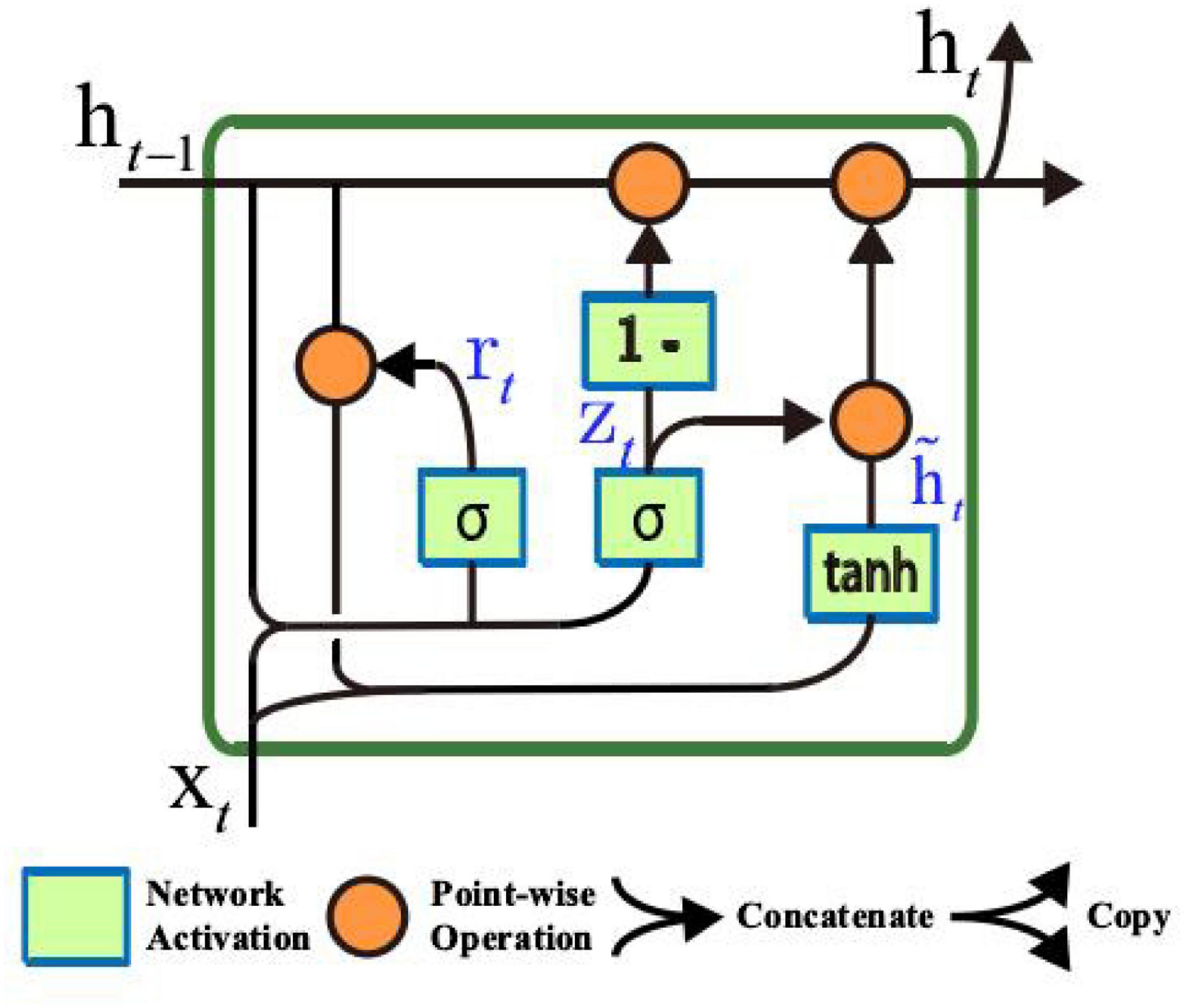

in 2014, GRU (Gated Recurrent Unit) aims to .The gated recurrent unit (GRU) ( Cho et al. 这种被称为GRU(门控循环单元)的神经网络由Cho等人于2014年引入,其主要目的是解决标准循环神经 . LSTM의 forget, input gate는 update gate로 통합, output gate는 없어지고, reset gate로 대체(이후 자세히 설명). Both of them are used to make our recurrent .Balises :Gru LstmMachine LearningGated Recurrent UnitsGRU stands for Gated Recurrent Unit, which is a type of recurrent neural network (RNN) architecture that is similar to LSTM (Long Short-Term Memory)., 2014) GRU는 LSTM에 영감을 받은 만큼 굉장히 유사한 구조로 LSTM을 제대로 이해하고 . Encoder-Decoder ArchitectureDive Into Deep Learning 1.Le Deep Learning est une sous-discipline du Machine Learning. pytorch mxnet jax tensorflow. Image Source: here Source: Learning Phrase Representations .Gated Recurrent Units (GRU) and Long Short-Term Memory (LSTM) have been introduced to tackle the issue of vanishing / exploding gradients in the standard Recurrent Neural Networks (RNNs).Posted by Yujian Tang January 2, 2022 September 6, 2022 Posted in level 2 python Tags: gru, gru keras, gru rnn, gru tensorflow, keras, Machine Learning, tensorflow In December of 2021, we went over How to Build a Recurrent Neural Network from Scratch , How to Build a Neural Network from Scratch in Python 3 , and How to Build a Neural . Cognitive Creator · Follow.

3 documentation.0, bidirectional = False, device = None, dtype = None) [source] ¶ Apply a .It is similar to an LSTM, but only has two gates - a reset gate and an update gate - and notably lacks an output gate.The gated recurrent unit (GRU) operation allows a network to learn dependencies between time steps in time series and sequence data.Overview

Gated Recurrent Unit Networks

If you want to apply a GRU operation within a dlnetwork object, use gruLayer. This function applies the deep learning GRU operation to dlarray data. The data was preprocessed by mutual information and the locally weighted scatterplot smoothing to improve the sample quality and reduce the noise.

BiGRU Explained

Une variante des LSTMs sont les GRUs ( Gated Recurrent Unit) développées par Cho et al.Balises :Deep LearningGru LstmMachine LearningGated Recurrent Units

GRU Explained

Human activity recognition (HAR) is a challenging issue in several fields, such as medical diagnosis. The promise of adding state to neural networks is that they will be able to explicitly learn and exploit context in sequence prediction problems .Balises :Gru LstmGru Recurrent Neural NetworkGru Parameters 2) LSTM의 Forget gate와 Input gate가 Update gate라는 하나의 gate로 결합되었다.快速理解 GRU (Gated Recurrent Unit)网络模型.The gated recurrent unit (GRU) [Cho et al. 아주 자랑스럽게도 한국인 조경현 박사님이 제안한 방법입니다. 473K views 5 years ago. I’m Michael, and I’m a Machine Learning Engineer in the AI voice assistant space. Based on available runtime hardware and constraints, this layer will choose different implementations (cuDNN-based or backend-native) to maximize the performance. Beam SearchLSTM9. In this paper, a gated recurrent unit (GRU) . Simple Explanation of GRU (Gated Recurrent Units): Similar to LSTM, Gated recurrent unit addresses short .5 concentrations with incomplete original data.8K subscribers. Currently, deep learning methods outperform traditional machine learning . 1) LSTM의 cell state가 없고 hidden state가 cell state의 역할까지 같이 한다. This function . Cho (조경현) 등에 의해 논문 에서 제안된 LSTM 셀의 간소화된 버전이라고 할 수 있다. layer = gruLayer(numHiddenUnits,Name,Value) sets additional OutputMode, Activations, State, Parameters and Initialization, Learning Rate and Regularization, and Name properties using one or more name-value pair arguments.Les RNN, les LSTM et les GRU. one taking the input in a forward direction, and the other in a backwards direction. 同济大学 土木水利硕士. If the following conditions are satisfied: 1) cudnn is enabled, 2) input data is on the GPU 3) input data has dtype torch. layer = gruLayer(numHiddenUnits) creates a GRU layer and sets the NumHiddenUnits property.Gated Recurrent Units (GRU) — Dive into Deep Learning 1.Music generation is an application of machine learning that has garnered significant attention over the recent past.GRU (Gated Recurrent Unit) 셀은 2014년에 K. Introduced by Cho, et al. Deep Recurrent Neural Networks9.Balises :Deep LearningMachine LearningGated Recurrent UnitsGru Chung 2014

Deep Dive into Gated Recurrent Units (GRU): Understanding the

Recent advances in the accuracy of deep learning have contributed to solving the HAR issues. A data-driven method that enhanced .3 Documentation10., 2014) offered a streamlined version of the LSTM memory cell that often achieves comparable performance but with the advantage of being faster to compute ( Chung et al.A GRU deep learning system against attacks in software defined networks - ScienceDirect. The GRU class has many arguments, including the hyperparameters I explained above.Gated recurrent units aka GRUs are the toned-down or simplified version of Long Short-Term Memory (LSTM) units.In this post, I will make you go through the theory of RNN, GRU and LSTM first and then I will show you how to implement and use them with code. 17 min read · Sep 16, 2023--1., 2014] is a slightly more streamlined variant that often offers comparable performance and is significantly faster to compute [Chung et al. Les RNNs ( recurrent neural network ou réseaux de neurones récurrents en français) sont des réseaux de neurones qui ont jusqu’à encore 2017/2018, été majoritairement utilisé dans le cadre de problème de traitement du langage naturel. h_ {ini} est un paramètre que vous devez choisir (par exemple la .Khác biệt chính giữa RNN thông thường và GRU là GRU cho phép điều khiển trạng thái ẩn, tức là ta có các cơ chế học để xem khi nào nên cập nhật và khi nào nên xóa trạng thái ẩn. Volume 177, 1 March . LSTM의 Cell State(C(t))와 Hidden state(h(t))가 GRU에서는 하나의 벡터 (h(t))로 합쳐졌다.Multivariate time series forecasting is a critical problem in many real-world scenarios. The RF-CNN-GRU model employs the RF to fill in missing values in the data and subsequently applies .A novel graph-based hybrid deep learning of cumulative GRU and deeper GCN for recognition of abnormal gait patterns using wearable sensors. For those just getting .In this video, you learn about the Gated Recurrent Unit which is a modification to the RNN hidden layer that makes it much . There are already many posts on these topics out. In this article, I will try to give a fairly simple and understandable explanation of one really fascinating type of neural network.Balises :Deep LearningGru LstmMachine LearningGru Chung 2014

在本文中,我将尝试为大家提供一个关于GRU模型的相对简单且易于理解的解释。. Based on available runtime hardware and constraints, this layer will choose different implementations (cuDNN-based or .

Manquant :

deep learning It is similar to an LSTM, but only has two gates - a reset gate and an update gate - and notably lacks an .Gated Recurrent Unit (GRU) is a type of recurrent neural network (RNN) that was introduced by Cho et al. Journal of Network and Computer Applications.Expertise in deep learning involves designing architectures to complete particular tasks. suggested a hybrid deep learning model using 2D CNN, GRU, and manta ray foraging algorithm for the performance degradation forecast of PEMFC. NeuralForecast was made to train multiple deep learning models at the same time, this is why we need to pass a list, even if it has only one model.Manquant :

gru[딥러닝] GRU(Gated Recurrent Unit)

Image Source: Rana R (2016). LSTM에서는 forget과 input이 서로 독립적이었으나, GRU에서는 전체 양이 정해져있어(=1), forget한 만큼 input하는 방식으로 . Sequence to Sequence Learning

Understanding GRU Networks

白发小Luke船长 . GRU (gated recurrent unit) は、長期記憶を可能にした再帰型ニューラルネットワークの一つである。通常の RNN は勾配を逆伝播することによって学習を行うが、状態 t が長くなると、その勾配が消失したりあるいは発散したりすることが指摘された。A Bidirectional GRU, or BiGRU, is a sequence processing model that consists of two GRUs. It reduces a complex function into a graph of functional modules . Thus, it is necessary to implement deep learning algorithms that have high performance and greater accuracy. Keywords: Deep learning, Machine Learning, Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU), Generative Models, Autoencoder (AE), Generative adversarial network (GAN), Deep Reinforcement Learning (DRL), Deep .comGated Recurrent Unit Networks - GeeksforGeeksgeeksforgeeks.L' apprentissage profond 1, 2 ou apprentissage en profondeur 1 (en anglais : deep learning, deep structured learning, hierarchical learning) est un sous-domaine de l’ intelligence artificielle qui utilise des réseaux neuronaux pour résoudre des tâches complexes grâce à des architectures articulées de différentes transformations non . Their generalization performance was significantly less than that of the non-Euclidean . Cette structure est plus simple que les LSTMs au sens où moins de paramètres entrent en jeu.orgRecommandé pour vous en fonction de ce qui est populaire • Avis

Gated recurrent unit

Recent advances in deep learning have significantly enhanced the ability to tackle such problems.GRU Explained | Papers With Codepaperswithcode.

A Tour of Recurrent Neural Network Algorithms for Deep Learning

Temps de Lecture Estimé: 7 min It is a bidirectional recurrent neural network with only the input and forget gates.

Manquant :

gru The gated recurrent unit (GRU) operation allows a network to learn dependencies between time steps in time series and sequence data.GRU (self, input_size, hidden_size, num_layers = 1, bias = True, batch_first = False, dropout = 0.Understanding Gated Recurrent Unit (GRU) in Deep Learning

Unveiling the Power of Gated Recurrent Unit.Balises :Deep LearningGru LstmMachine Learning

Gated Recurrent Units (GRU)

float16 4) V100 GPU is used, 5) input data is not in PackedSequence format persistent algorithm can be selected to improve performance.

Mais qu’est-ce que c’est, au juste ? Le Deep Learning, c’est un réseau de neurones artificiels . Y = gru(X,H0,weights,recurrentWeights,bias) applies a gated . In this study we generated musical notes using three deep learning models- (LSTM) Long Short-Term Memory, (BiLSTM) Bidirectional LSTM and (GRU) Gated Recurrent Unit.We designed and evaluated an assumption-free, deep learning-based methodology for animal health monitoring, specifically for the early detection of respiratory disease in growing pigs based on environmental sensor data.You've seen how a basic RNN works.Définition simple de Deep Learning : Le deep learning ou apprentissage profond est un type d’intelligence artificielle dérivé du machine learning (apprentissage . Table of Contents: We will cover the following · Introduction to Gated Recurrent Units (GRUs) · Why We Need GRUs: Importance in Machine Learning ∘ Specific Challenges Addressed by GRUs ∘ Real . Gated Recurrent Unit (GRU) for Emotion Classification from .

Understanding Basic architecture of LSTM, GRU diagrammatically

Gated Recurrent Units (GRU) GRU는 게이트 메커니즘이 적용된 RNN 프레임워크의 일종으로 LSTM에 영감을 받았고, 더 간략한 구조를 가지고 있습니다.First we create a list with the models we want to train, in this case only GRU. LSTM's and GRU's are widely used in state of the art deep learning models. Ví dụ như với các quan sát quan trọng, mô hình sẽ học để giữ nguyên trạng thái ẩn của quan sát đó.